Exploring the Magic of Surround Sound with Zylia Microphone in "Hunting for Witches" Podcast2/9/2023 Podcasts are usually associated with two people sitting in a soundproofed room, talking into a microphone. But what if we want to add an extra layer of sound that can tell its own captivating story? That's when a regular podcast becomes a mesmerizing radio play that ignites the imagination and emotions of its listeners. One such podcast is "Hunting for Witches" (Polish title "Polowanie na Wiedźmy") by Michał Matus, available on Audioteka. It's a documentary audio series that takes us on a journey to explore the magic of our time. We had the privilege of speaking with the chief reporter, Michał Matus, and Katia Sochaczewska, the audio producer, about the creation of "Hunting for Witches" and the role that surround sound recording played in the production. The ZYLIA ZM-1 microphone was a key tool in capturing the unique sounds that make this podcast truly special. For the past year, Michal has been traveling throughout Europe, visiting places where magic is practiced, meeting people who have dedicated their lives to studying various forms of magic, and documenting the secret rituals that are performed to change the course of things. The ZYLIA microphone was with him every step of the way, capturing the essence of these magical places.

“You can hear the sounds of nature during a meeting with the Danish witch Vølve, in her garden, or the summer solstice celebration at the stone circle of Stonehenge, where thousands of pagan followers, druids, and party-goers were drumming, dancing, and singing.” "I also used Zylia when I knew a scene was going to be spectacular in terms of sound, with many different sound sources around." Michał recalls. “So, through Zylia, I recorded the summer solstice celebration at the stone circle of Stonehenge, where thousands of followers of pagan beliefs, druids and ordinary party people had fun drumming, dancing and singing. It was similar when visiting Roma witches who allowed their ritual to be recorded. All these places and events have a spatial sound that was worth preserving so that we could later recall them in the sound story.”

Want to learn more about 3D audio podcasts? |

| Dolby Atmos and Atmos Music are one of the hottest topics in immersive audio these days. Through this new format, consumers can enjoy immersive audio over various playback solutions like multichannel home theatre setups, sound bars, or as binaural renderings through headphones, some of which even offer integrated head tracking. The other audio format playing an important role in creating immersive experiences in VR, AR, and XR is Higher-order Ambisonics (HOA). With the ZYLIA ZM-1 from Zylia, there is a convenient, practical and affordable solution to record sound in HOA with high spatial resolution. The question now is, how can HOA be combined with Dolby Atmos? |

Content of the article

Higher-order Ambisonics (HOA) overview

It is important to know that the raw output of an Ambisonics microphone array, the A-format, needs to be converted into what is known as the B-format before using it in an Ambisonics production workflow. This is sometimes a bit of an obstacle for the novice. Unlike in a channel-based approach, the audio channels of the B-format are not associated in a direct way with spatial directions or positions, like the positions of speakers in the playback system, for instance. However, the more abstract representation of the sound scene in the B-format makes sound field operations like rotations fairly straightforward.

Although multichannel audio is at its base, the B-format is only an intermediary step and requires decoding before you can listen to a meaningful output. This decoding process can either yield speaker feeds ranging from classic stereo to multichannel surround sound setups for a conventional channel-based output, or it can result in a binaural experience over headphones. This is just one of the reasons why one would like to deliver immersive audio recorded through Ambisonics over playback solutions that support Dolby Atmos. For delivering a mobile and immersive listener experience with comparably small hardware requirements, the capabilities of headphones with integrated head-tracking are particularly attractive.

For FOA recordings, the A- and the B-format have both 4 channels. The spatial resolution is limited, and sound sources usually appear as if they are all at the same remote distance. For full-sphere HOA solutions the A-format has typically more channels than the B-format. As an example, the ZYLIA ZM-1 has 19 channels as raw A-format output and 16 channels after converting it to 3rd order Ambisonics. It is important to remember these channel counts when planning your workflow with respect to the capacities of the DAW in question.

Dolby Atmos overview

In order to represent rich 360° audio content, Dolby Atmos can handle internally up to 128 channels of audio. The Audio Definition Model (ADM) ensures a proper representation of all the metadata related to these channels. Dolby Atmos files are distributed through the Broadcast Wave Format (BWF) [4]. From a mixing point of view, and also for combining it with Ambisonics, it is important to be aware of two main concepts in Dolby Atmos: beds and audio objects.

You can think of beds in two ways:

- Beds are a channel-based-inspired representation of audio content following surround sound speaker layouts plus additional up to 4 height channels.

- In terms of audio content that you would send to beds, this can be for instance music that you mix for a specific surround setting. You can also think of it in general terms as soundscapes that provide a background or atmosphere for other audio elements. This background sound is spatially resolved.

If you think for instance of a nature soundscape, this could be trees with rustling leaves and a creek with running water, all sound sources with more or less distinct positions. Take an urban soundscape as another example and think of traffic with various moving cars, these are sound sources that change their positions, but you would want to use the scene as is and not touch the sources individually in your mix. These are all examples of immersive audio content that you would send to beds.

Dolby Atmos also allows for sounds to be interpreted as objects with positions in three dimensions. In a mix, these are objects that allow for control of their position in x, y, and z independently of the position of designated speaker channels. See below [5], for reading up more on beds and objects.

HOA recordings in Dolby Atmos

While beds are channel-based in their conception, they may be rendered differently, depending on the speaker count and layout of your system. Think of beds as your main mix bus and let’s think of input for beds as surround configurations (2.0, 3.0, 5.0, 5.1, 7.0, 7.1, 7.0.2, or 7.1.2). In order to take advantage of the high resolution of 3rd order recordings made with the ZYLIA ZM-1, we will pick the 7.0.2 configuration with 7 horizontal and two elevated frontal speakers and we will decode the Ambisonic B-format to a virtual 7.0.2 speaker configuration. This results in a proper input for a Dolby Atmos bed.

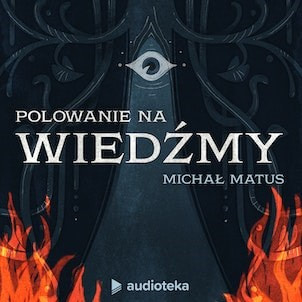

Starting with a raw recording made with the ZYLIA ZM-1 we will then have the following signal chain:

Step one is the raw output of the microphone array, the A-format. For the ZYLIA ZM-1 this is an audio file with 19 channels. From a post-production and mixing perspective, all that matters here is where you placed your microphone with respect to the sound sources. If you want your work environment to include this step of the signal chain, the tracks of your DAW need to be able to accommodate 19 channels. But this is not absolutely necessary, you can start with step 2.

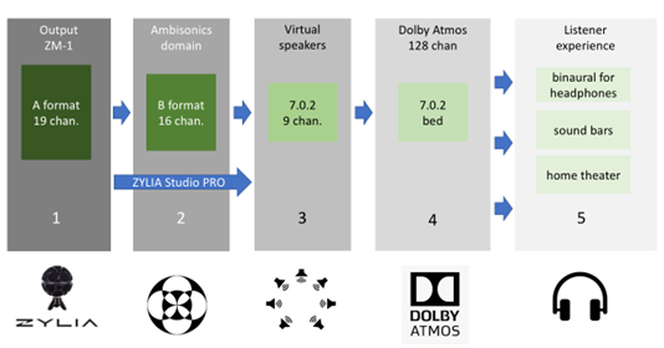

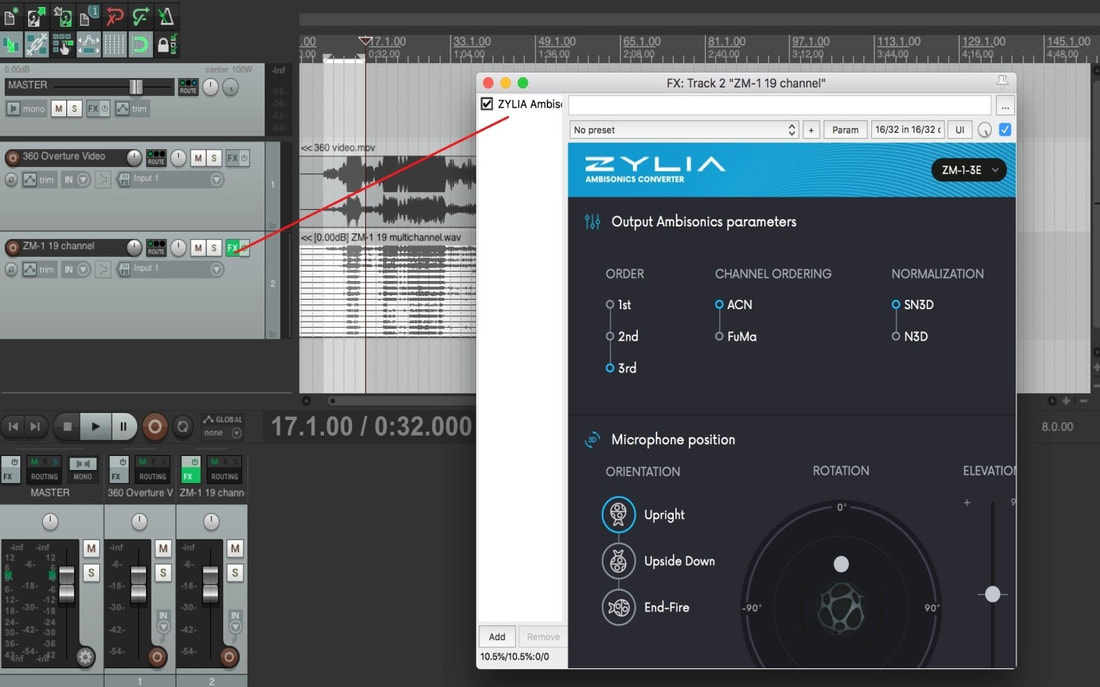

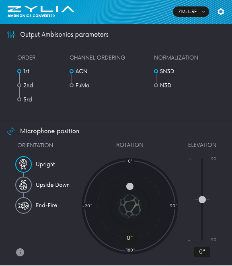

The 3rd order Ambisonic B-format contains 16 channels. For the conversion from step one to step two, you can use the Ambisonic Converter plugin from Zylia [6]. If your DAW cannot accommodate the necessary 19 channels for step one you can also convert offline with the ZYLIA Ambisonics Converter application, which also offers you batch conversion for multiple assets [7]. In many situations, it is advisable to start the signal chain with step 2, in order to save the CPU resources used by the A to B conversion for other effects. From a mixing perspective, operations that you apply here are mostly rotations, and global filtering, limiting or compression of the immersive sound scene that you want to send to Atmos beds. You will apply these operations based on how the immersive bed interacts with the objects that you may want to add to your scene later. There are various recognized free tools available to manipulate HOA B-format, for instance, the IEM [8] or SPARTA [9] plugin suites.

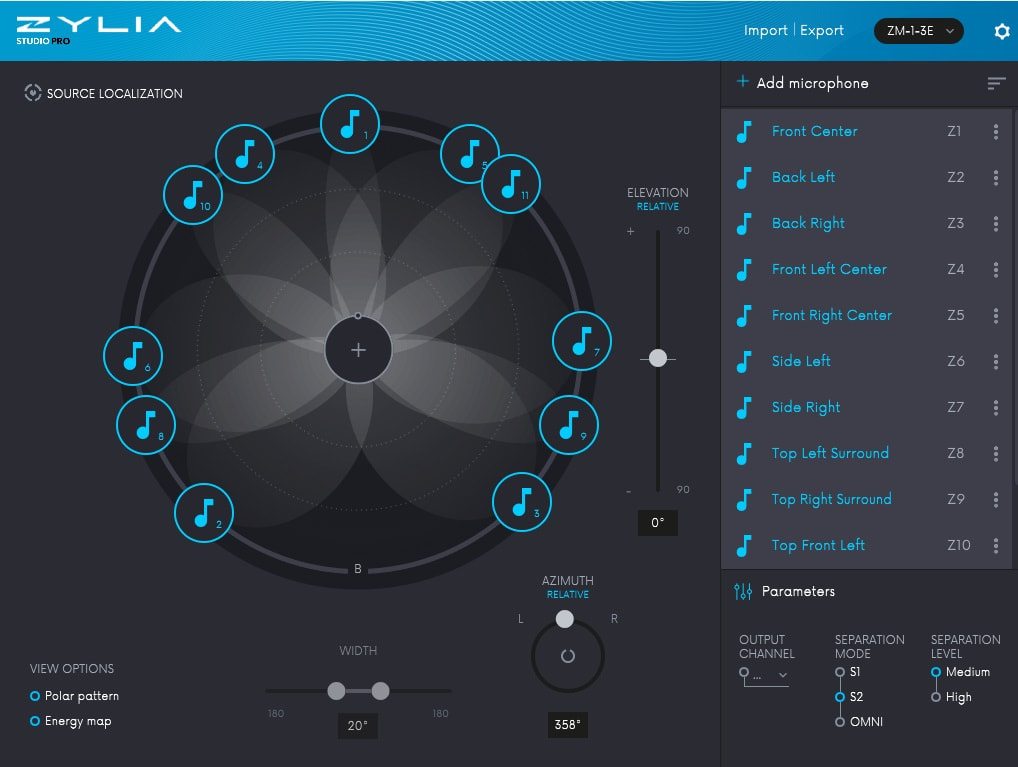

Then, The Ambisonic B-Format needs to be decoded to a virtual surround speaker configuration. For this conversion from the B-format, you can use various decoders that are again available from multiple plugin suites like IEM and SPARTA. ZYLIA Studio Pro [10] allows you to decode to a virtual surround layout directly from step one, the raw A-format recordings, which means that you can bypass step 2. For some background audio content, this maybe a perfectly suitable choice. Part of the roadmap for ZYLIA Studio Pro is to also offer A-format input, making it a versatile high-quality decoder. From a mixing perspective and depending on the content of your bed input, you may want to choose different virtual surround configurations to decode to. Some content might be good on a smaller, more frontal bed e.g. 3.1, and other content will need to be more enveloping. If your DAW has a channel count per track that is limited to surround sound setups, you will need to premix these beds as stems.

This bed then needs to be routed to Dolby Atmos. The details are beyond the scope of this article, and there are many excellent tutorials available that describe this process in detail. Here I want to mention that some DAWs have Dolby Atmos renderers built in, and you can study everything you practically need to know within these DAWs. With other DAWs, you will need to use the external Dolby Bridge [11]. This has a steeper learning curve to it but there are also many excellent tutorials out there that cover these topics [12]. There are also hardware solutions for Dolby Atmos renderings which interface with your speaker setup, but we will not cover them here. In Dolby Atmos, you will likely also integrate additional sources as objects, and you will control their 3D pan position with the Dolby Atmos Music Panner plug-in in your DAW. From a mixing perspective: the sonic interaction between the bed and the objects will probably make you revisit steps 2 and step 3 in order to rebalance, compress or limit your bed to optimise your mix.

You will need to monitor your mix to make sure that the end user experience is perfect. Only very few of us will have access to a Dolby Atmos studio for their work. For bedroom studio owners, you can listen to your mix always over headphones as a binaural rendering, on some recent OSX platforms over the inbuilt Atmos speakers, and with AirPods even over headphones with built-in headtracking. These solutions might be options depending on what you are producing for. Regarding this highly debated question, on whether you can mix and master over headphones, I found the following article very insightful [13], elaborating on all pros and cons and also pointing out that the overwhelming majority of end users will listen to music over headphones. With regards to an Ambisonic mix, using headphones means that the listener will be always in the sweet spot of the spatial reproduction.

The workflow in selected DAWs

Reaper is amongst the first choices when it comes to higher-order Ambisonics, due to its 64-channel count per track. Hence for the HOA aspect of the workflow sketched above, there are no limitations. However, you will need to familiarize yourself with the Dolby Bridge and the Dolby Atmos Music Panner plug-in.

In regular Pro Tools, you will also use the Dolby Atmos Music Panner plug-in and the Dolby Bridge. Since Pro Tools has a limitation of 16 channels per track, you will need to convert all your Ambisonic assets to B-format before you can start mixing. Upgrading to Pro Tools Studio or Flex [15] adds Dolby Atmos ADM BWF import/export, native immersive panning, I/O set-up integration with the Dolby Atmos Renderer, and a number of other Dolby Atmos workflow features as well as Ambisonics tracks.

In the most recent versions of Logic, Dolby Atmos is completely integrated, so no need to use the Dolby Bridge. For the monitoring of your mix, Logic will play nicely with all Atmos-ready features from Apple hardware. However, the channel count per track is limited to beds with 7.1.4. In theory, this means that you would have to premix all the beds as multichannel stems. While you can import ADM BWF files, as the Dolby Atmos project is ready for mixing, it is less obvious how to import a bed input as discussed above. In any case, once you have a premixed bed, the only modifications available to you in the mixing process are multi-mono plugins (e.g., filters), so you cannot rotate the Ambisonic sound field anymore at this point. To summarize for Logic, while Dolby Atmos is very well integrated, the HOA part of the signal chain is more difficult to realize.

Nuendo also has Dolby Atmos integrated and it also features dedicated Ambisonic tracks up to 3rd order which can be decoded to surround tracks. This means you have a complete environment for the steps of the workflow described above.

While being mostly known as a video editing environment, DaVinci Resolve features a native Dolby Atmos renderer that can import and export master files. This allows for a self-contained Dolby Atmos workflow in Resolve without the need for the Dolby Atmos Production or Mastering Suite. DaVinci Resolve also has the Dolby Atmos renderer integrated and the tracks can host multichannel audio assets and effects.

Summary

If you like this article, then please let us know in the comments what we should describe in more detail in future articles?

References:

- M. Gerzon, "Periphony: With Height Sound Reproduction," J. Audio Eng. Soc., vol. 21, no. 1, pp. 2-10, (1973 February.)

- J. Daniel, J. Rault, and J. Polack, "Ambisonics Encoding of Other Audio Formats for Multiple Listening Conditions," Paper 4795, (1998 September).

- R. Nicol, and M. Emerit, "3D-Sound Reproduction Over an Extensive Listening Area: A Hybrid Method Derived from Holophony and Ambisonic," Paper 16-039, (1999 March).

- An article by Jérôme Daniel about the HOA from the Ambisonics symposium 2009: LINK

- F. Zotter, M. Frank, "Ambisonics: A practical 3D audio theory for recording, studio production, sound reinforcement, and virtual reality", Springer Nature; 2019: LINK

- Here, you find more information about the broadcast Wave format BWF: LINK

[6] The Zylia Ambisonics Converter plugin: LINK

[7] The Zylia Ambisonics Converter: LINK

[8] The IEM plugin suite: LINK

[9] The SPARTA plugin suite: LINK

[10] Zylia Studio Pro plugin: LINK

[11] A video tutorial for using the Dolby Bridge with Pro Tools: LINK

[12] A video tutorial for using the Dolby Bridge with Reaper: LINK

[13] An blog post about the limits and possibilities of mixing Dolby Atmos via headphones by Edgar Rothermich: LINK

[14] Information about Dolby support for various DAWs: LINK

[15] Here you can compare Protools versions and their Dolby and HOA support: LINK

#zylia #dolbyatmos #ambisonics

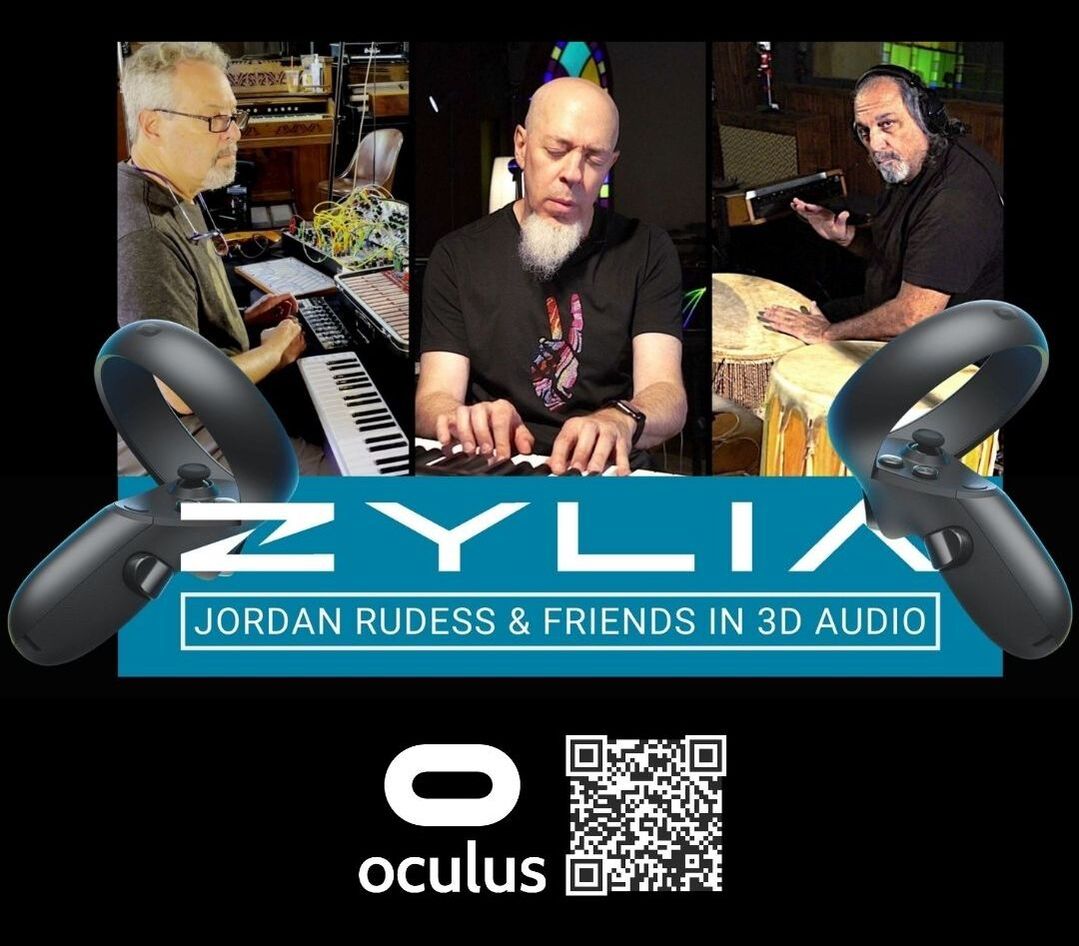

The idea

Zylia’s 3D Audio recording solution gives artists a unique opportunity to connect intimately with the audience. Thanks to the Oculus VR application, the viewer can experience each uniquely developed element of the performance at home as vividly as it would be by a live audience member. Using Oculus VR goggles and headphones, viewers have the opportunity to:

- watch the concert from several different perspectives each with a 360° view,

- listen to the music in 360° spatial audio at every vantage point,

- tilt and turn their head and witness how 3D audio follows their movement perfectly

- hear the unique acoustics of the venue,

- feel the fantastic atmosphere of the event.

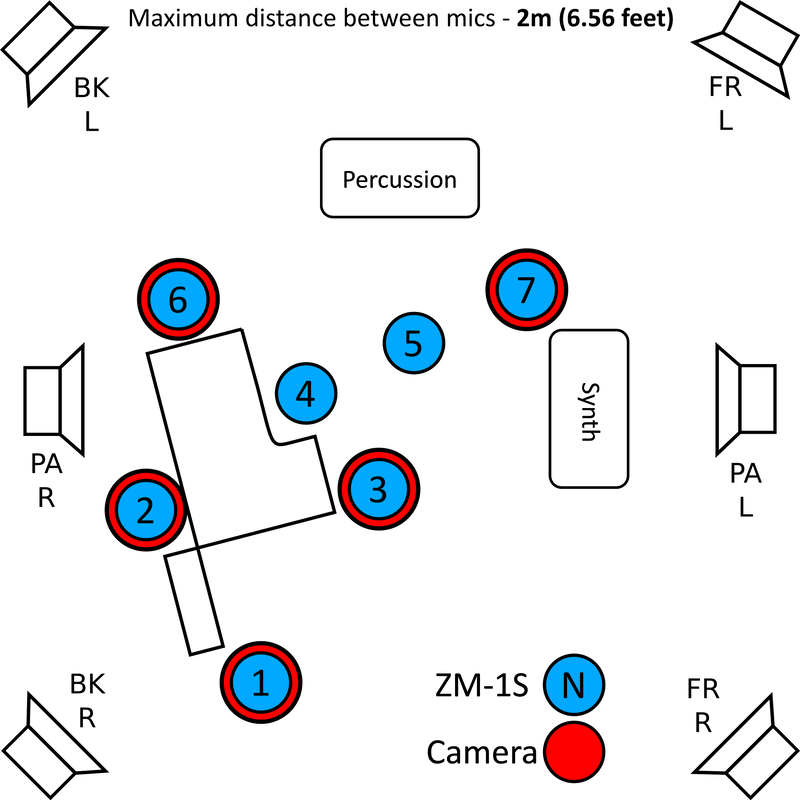

Technical information

The equipment used for the recording of “Jordan Rudess & Friends” concert

- One Mac Mini used for recording

- 6 ZM-1S microphones with synchronization

- 5 Qoocam 8K cameras

The 360° videos from the Qoocam 8K were stitched and converted to an equirectangular video format.

For the purpose of distributing the concert, a VR application was created in Unity3D and Wwise engines for Oculus Quest VR goggles.

Want to learn more about multi-point 360 audio and video productions? Contact our Sales team:

| | Example: Astor Piazzolla’s compositions performed by Students of State Music School in Konin |

- MacOS system (with connection to Ethernet + WiFi)

- Insta360 One R camera,

- Insta360 mobile app installed on your smartphone

- ZM-1 microphone

- OBS (https://obsproject.com/)

- ZYLIA Streaming application (https://www.zylia.co/zylia-streaming-application-download.html)

- MacOS RTMP Local host application (https://github.com/sallar/mac-local-rtmp-server)

- Facebook profile

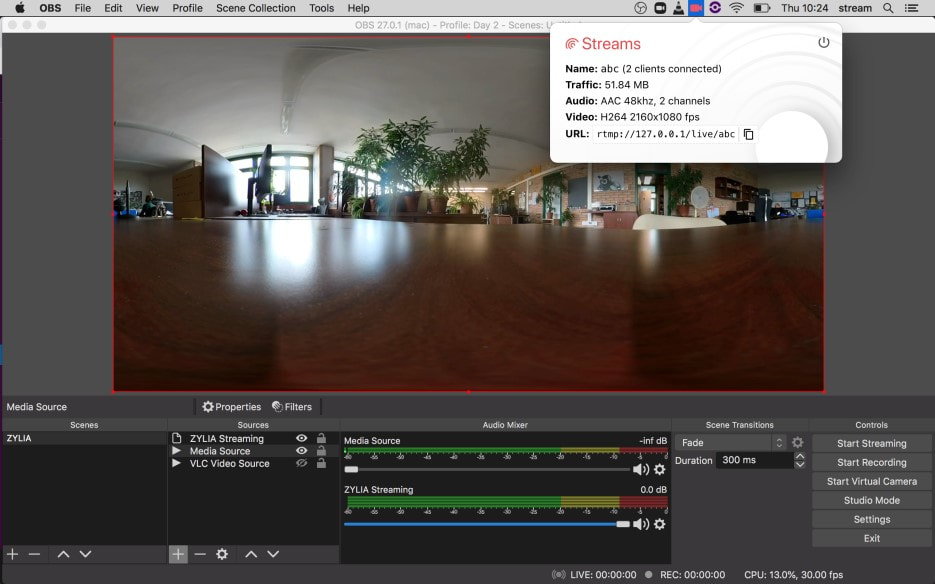

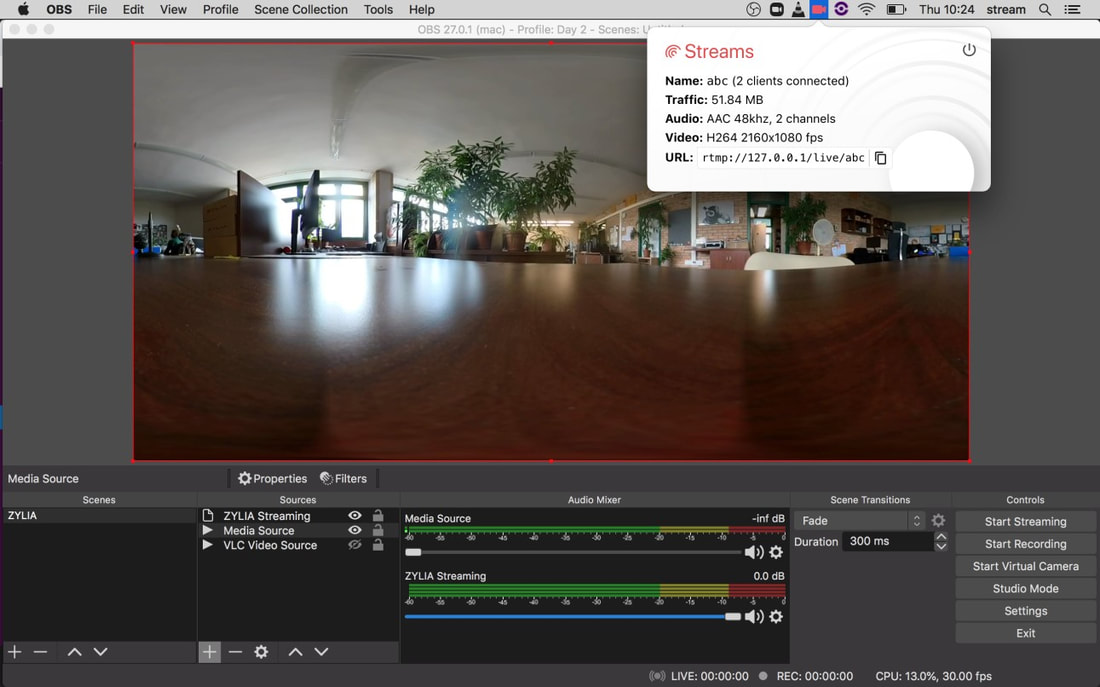

A. Receiving a live 360° video from the Insta360 to OBS

B. Receiving Ambisonics audio from the ZM-1microphone to OBS.

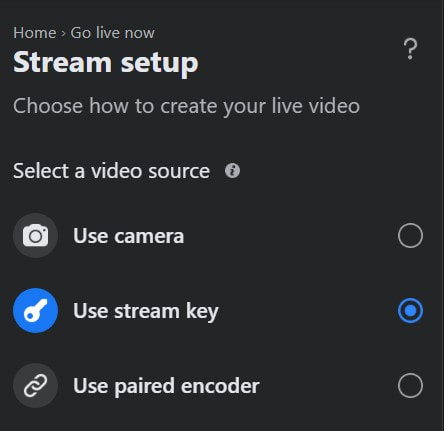

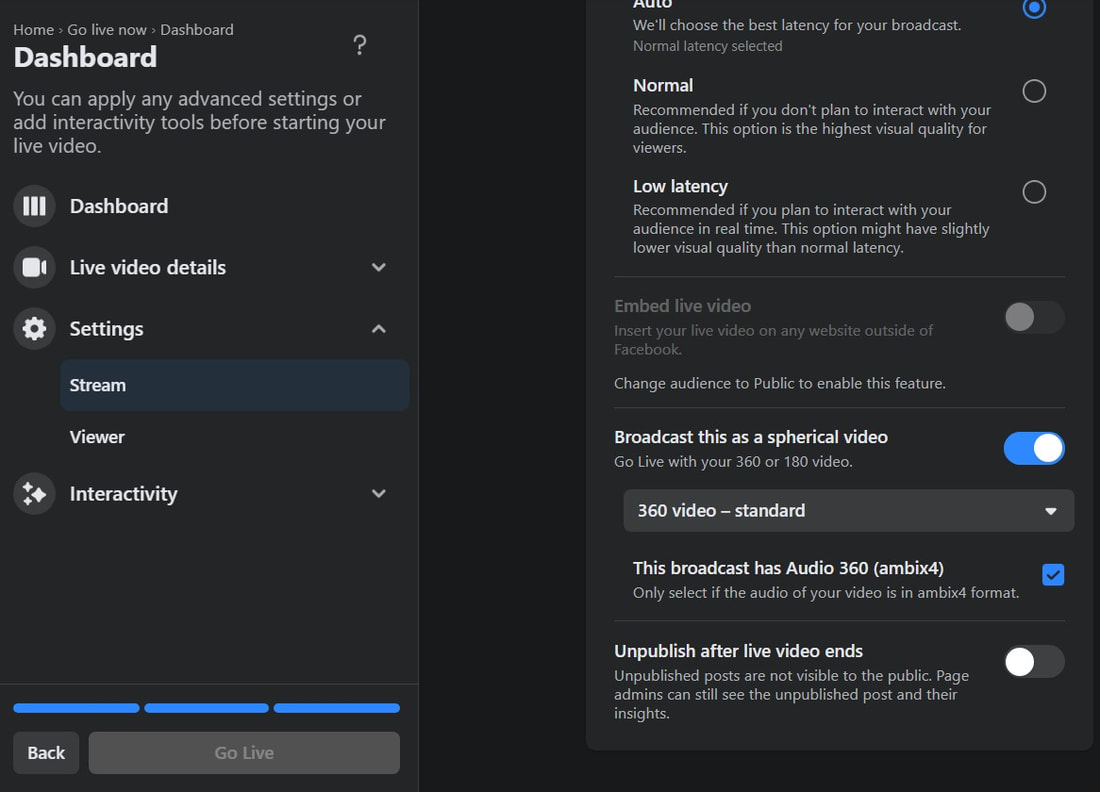

C. Configuring Facebook for live stream of 360° video with Ambisonics audio

1. Turn On the Insta360 camera and connect your computer Wi-Fi to the corresponding Insta360 Wi-Fi network.

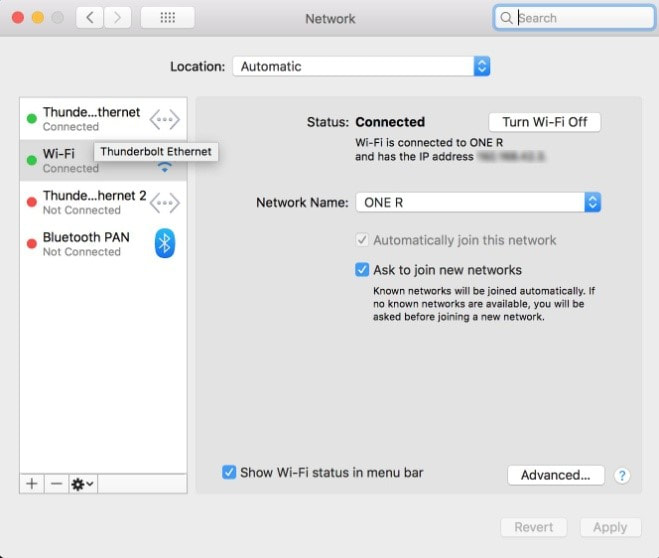

2. Simultaneously, connect your computer to your local ethernet using an ethernet cable. On your network preferences you should be connected with Ethernet and Wi-Fi.

On step number 4 you will need the IP address for the Insta360 Wi-Fi referred on your network preferences.

This simple application will be used to receive a live stream from the Insta360 camera. It also provides you the local host address for OBS.

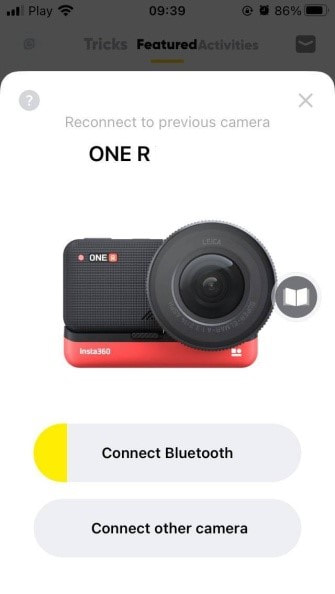

| 4. Using your smartphone, connect to the Insta360 camera Wi-Fi and Bluetooth using the Insta360 mobile application. Scroll to the option “Live” and select RTMP server. Here you should add the address to the Insta360 Wi-Fi mentioned on step 2. In the end of the address add a unique key (it can be anything). rtmp://Insta360IPaddress.live/abc rtmp://192.168.42.3/live/abc (example) |

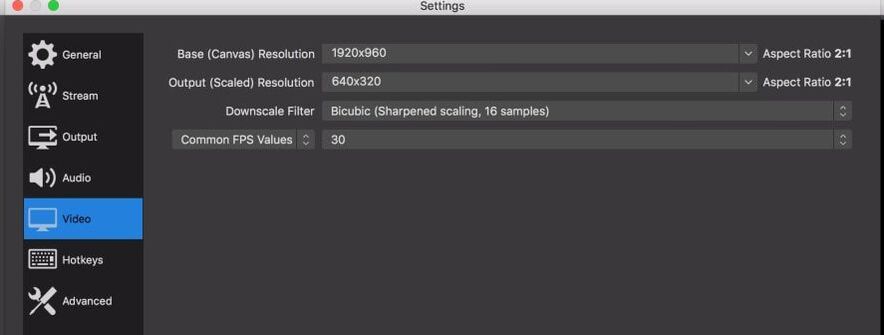

- On the video resolution you require a 2:1 format such as 1280x640

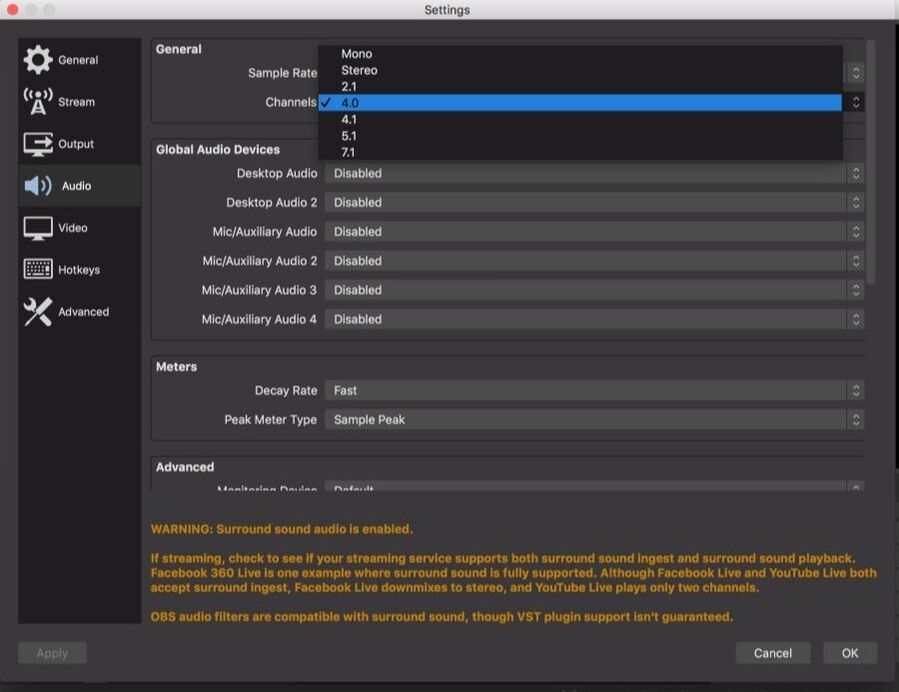

- Also, select your audio to 4.0 to receive 1st order Ambisonics audio later.

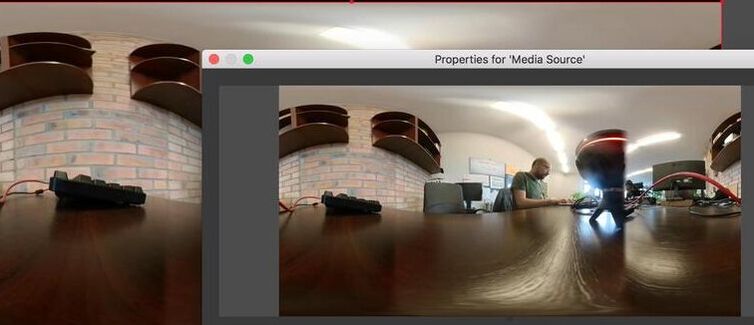

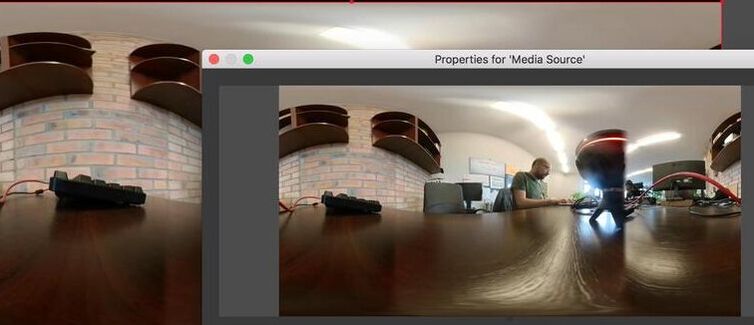

- On the Sources, click the plus sign and add “Media Source”

- Disable “Local File”

- On the input add the same address as the Insta360 camera, you may also use:

- rtmp://localhost/live/abc

After adding the source, you should be receiving a 360° video from the Insta360 into OBS.

7. Connect your ZM-1 microphone to your computer.

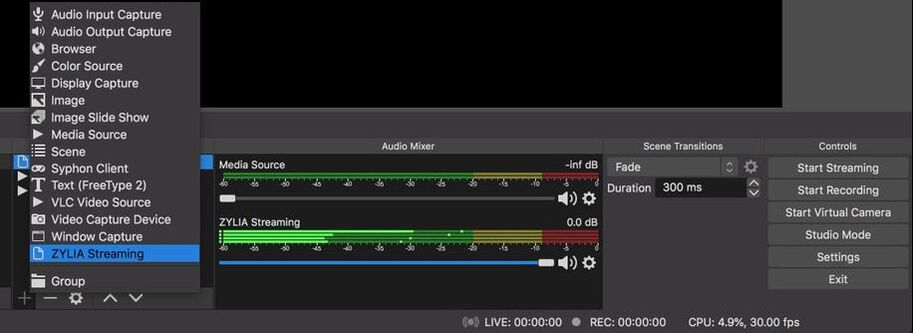

Open ZYLIA Streaming application.

| As an input select your ZM-1 microphone. As an output select ZYLIA Streamer OBS plugin. (As an output you may also use the BlackHole virtual audio device 16 channel as long as you select it as an audio input in OBS) Select 1st Ambisonics Order and press Start streaming. |

Intuitively, we may understand it by analogy to 3D space, which as we know is made up of three dimensions: width, depth, and height. In real life, any position in space is characterized by these dimensions.

Now imagine, that you are outside, for example in a park, full of various sounds. You can hear children laughing on the playground behind you; a dog is barking on your left, a couple of people are talking while sitting on a bench in front of you – you can hear their voices more and more clearly when you move towards them. We may say that you are in the 3-dimensional sound space. When you move, the sound you hear changes as well, corresponding to the position of your ears (its intensity, direction, height and even timbre).

Reproducing this natural human way of hearing in the recording isn’t easy, however, we already have the technology which allows us to do it – it is binaural and Ambisonics sound.

|

Binaural sound reflects the way we hear the world through our ears. It means that when we listen to the audio recording through the headphones it is possible for us to locate sounds coming from left, right, front, behind, above or below. Moreover, the binaural sound is very realistic and spatial and gives an impression of being at the place of recording.

|

Ambisonics sound is also a representation of natural spatial listening. However, when we record with an Ambisonics microphone, we record a 360 sphere around the device, so the whole 3D sound-space. This kind of Ambisonics audio can be further described by ‘orders’. The higher-order there is the better accuracy of the reproduced sound, the greater depth or, in another word, the higher resolution of the recorded sound-sphere.

|

Bellow, you may find some great recordings that will allow you to hear the difference between these different formats. We have also summarized their characteristics in the bullet points, to present the information most clearly.

| | |

- 1 audio channel

- no option to localize individual sound sources

- the same, flat sound coming from all headphones or speakers

- 2 audio channels (left and right)

- headphones or at least 2 speakers required

- possibility to recognize if the sound comes from the left or right

- no option to recognize if the sound comes from above, behind or below

- differences in the sound coming from each speaker

- no requirement of using headphones for accurate stereo playback

- 2 audio channels

- sound captured identically to the way we hear the world

- possibility to localize if the sound comes from left, right, front, behind, above or below

- very realistic sound

- an impression of being at the place of recording

- impression of sound source proximity

- immersive sound impression

- headphones necessary for accurate binaural playback

- customizable headphones playback

- 4 or more audio channels (at least 16, for high quality and spatial resolution)

- possibility to rotate like in 360 movies

- compatible with YouTube360 or Facebook360

- the representation of natural spatial listening

- immersive sound impression

- an impression of being at the place of recording

- possibility to localize the sound coming from any direction

- possibility to decode the sound to any array of speakers

- possibility to convert the sound for playback on headphones (recommended)

- possibility of moving smoothly across 3-dimensional space both with video and audio

#zylia #binaural #ambisonics #3Daudio #surround #spatial #sound

- MacOS

- ZYLIA ZM-1 microphone

- ZYLIA Ambisonics Converter plugin

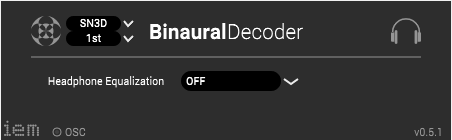

- IEM Binaural Decoder

- Reaper

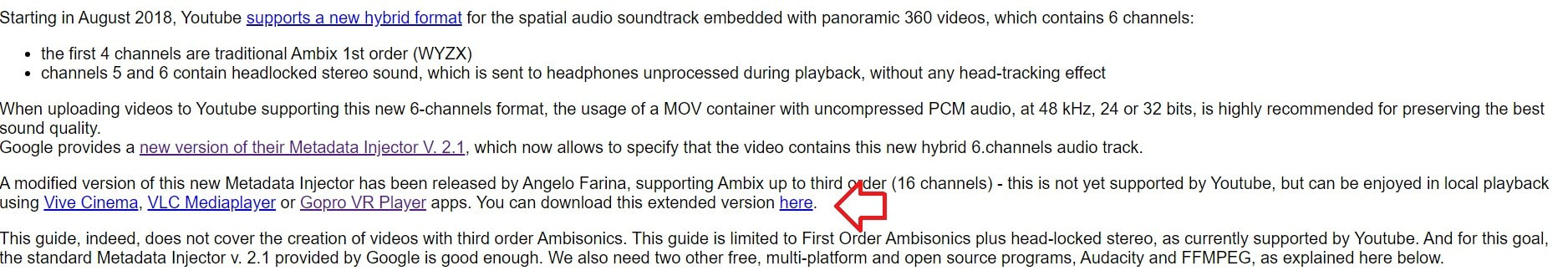

- Modifed version of Google Spatial Media Metadata injector created by professor Angelo Farina.

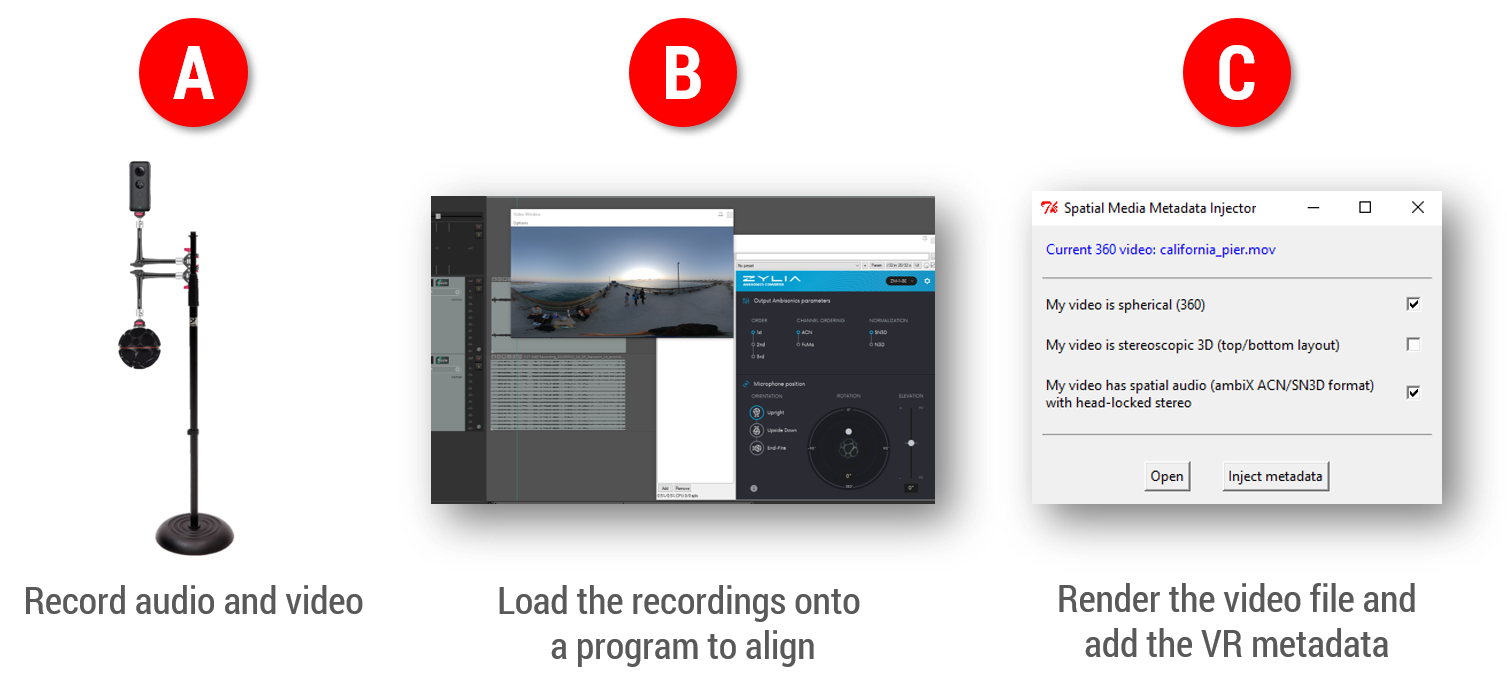

A: Preparing the 360 content with 16 channels

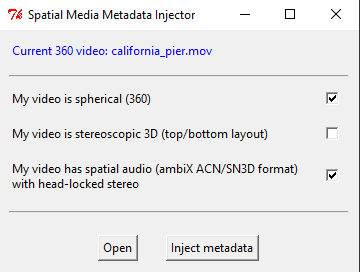

B: Injecting metadata using Spatial Media Injector version, modified by Angelo Farina.

At the moment, only HOAST library ( https://hoast.iem.at/ ) is the only platform which allows online video playback of 3rd Order Ambisonics and therefore the content created from this tutorial is meant to be watched locally using VLC player.

For this tutorial, basic Python knowledge is advised.

For preparing a 360 video with 1st order Ambisonics, visit the link:

https://www.zylia.co/blog/how-to-prepare-a-360-video-with-spatial-audio

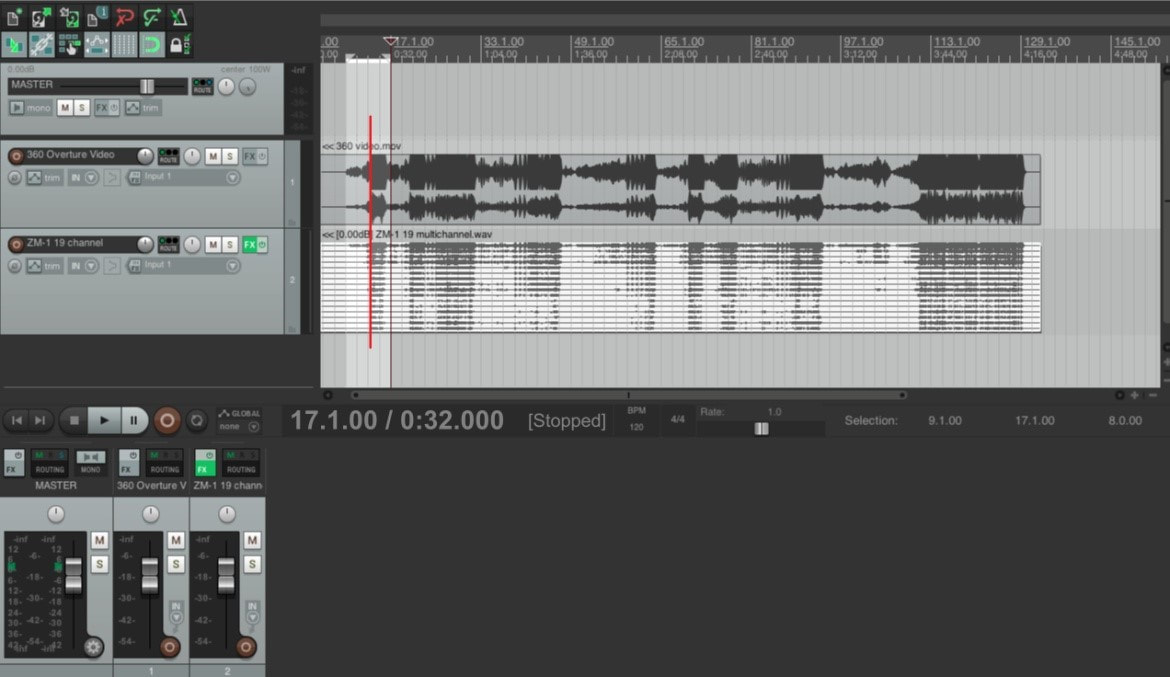

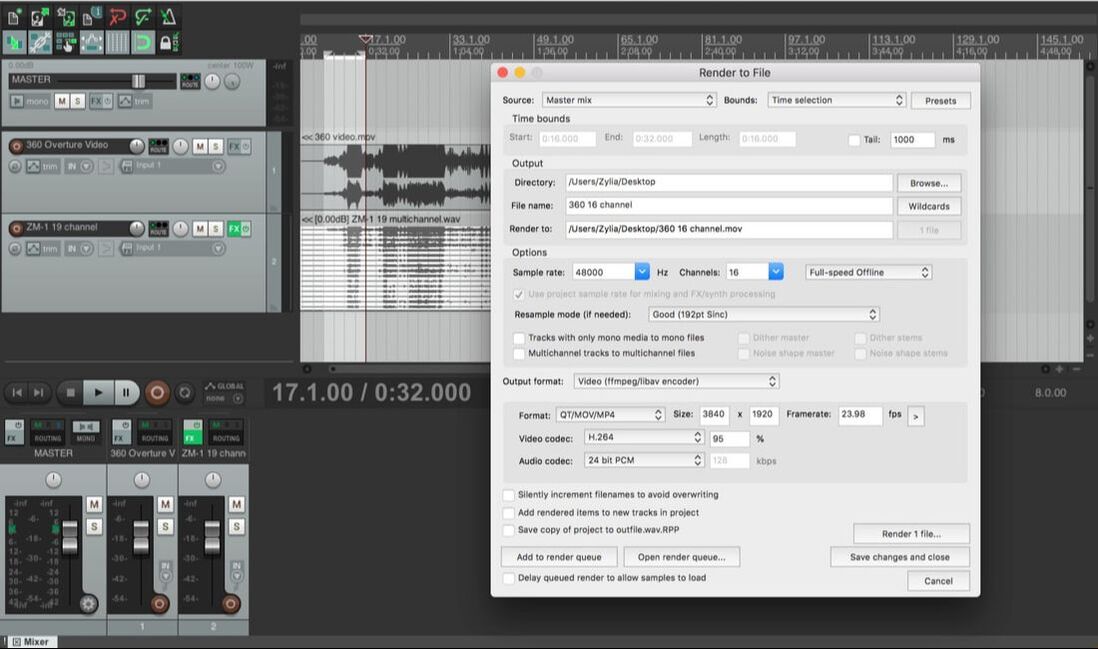

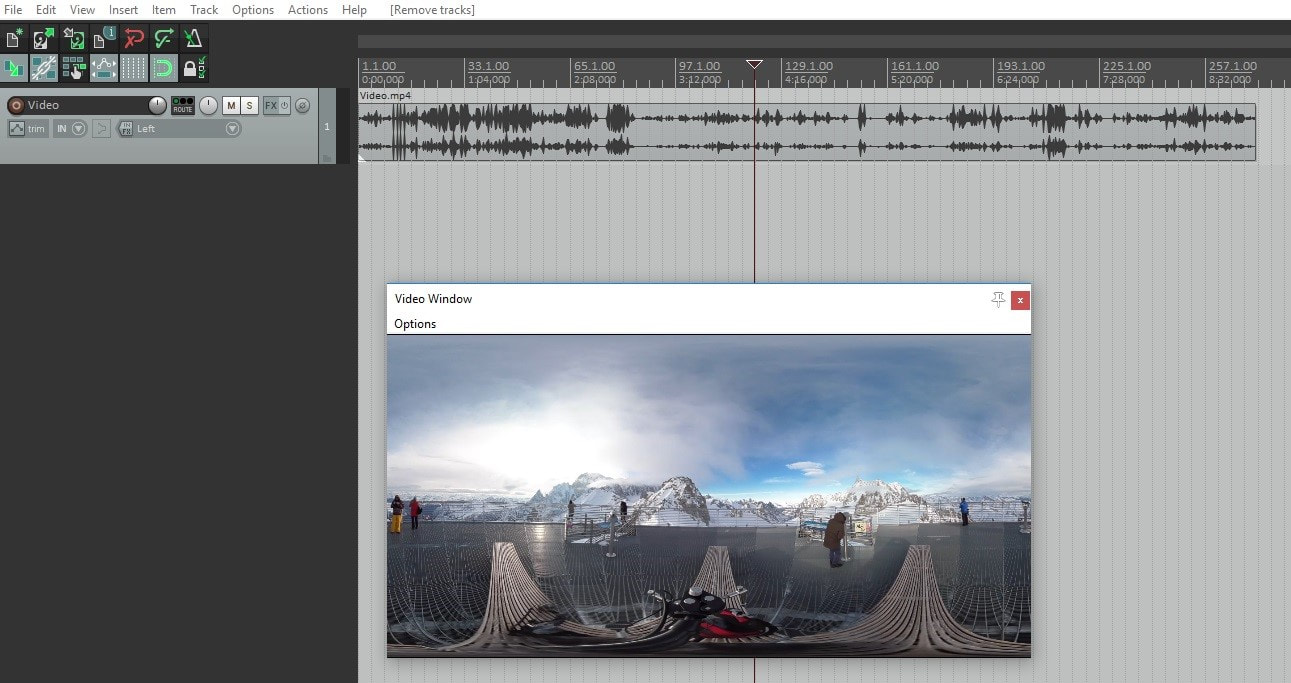

2. After recording, import the 360 video and the 19 Multichannel audio file into Reaper.

Syncronize the audio and video.

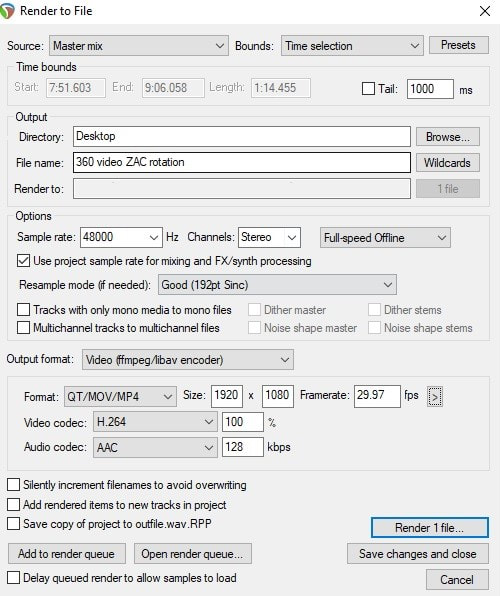

As for the settings:

Sample rate: 48000

Channels: 16 (click on the space and manually type 16)

Output format: Video (ffmpeg/libav encoder)

Size: 3840 x 1920 (or Get width/height/framerate from current video item

Format: QT/Mov/MP4

Video Codec: H.264

Audio Codec: 24 bit PCM

Render the video.

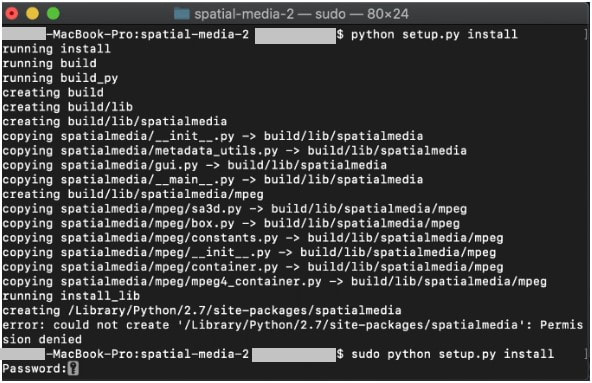

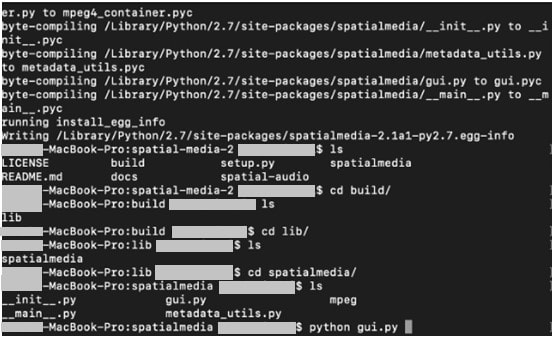

In order to do this, Python is required. Python is preinstalled in macOS but

you can download Python 2.7 version here: https://www.python.org/download/releases/2.7/

Afterward, download Angelo Farina’s modified version of Spatial Media Metadata Injector, located at:

http://www.angelofarina.it/Ambix+HL.htm

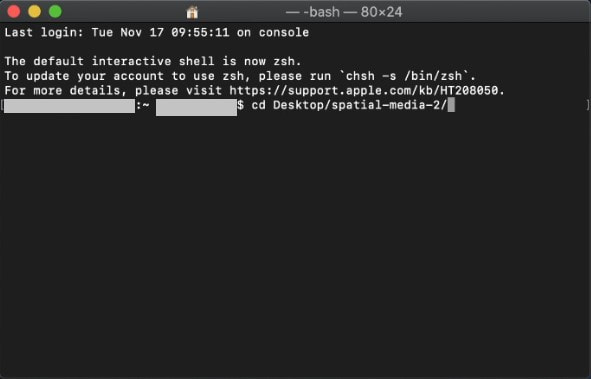

1. With the downloaded file located in your Desktop, run macOS Terminal application.

2. Using “cd” command, go to folder where you have Spatial Media Injector (eg. “cd ~/Desktop/spatial-media-2/”)

6. Enter python gui.py and the application should run.

This allows us to prepare a standard 2D video while keeping the focus on the action from the video and audio perspective.

It also allows us to control the video and audio rotation in real time using a single controller.

Reaper DAW was used to create automated rotation of 360 audio and video.

Audio recorded with ZYLIA ZM-1 microphone array.

Below you will find our video and text tutorial which demonstrate the setup process.

Thank you Red Bull Media House for providing us with the Ambisonics audio and 360 video for this project.

Ambisonics audio and 360 video is Copyrighted by Red Bull Media House Chief Innovation Office and Projekt Spielberg, contact: cino (@) redbull.com

Created by Zylia Inc. / sp. z o.o. https://www.zylia.co

- Video and audio recorded with 360 video camera and ZM-1 microphone

- ZYLIA Ambisonics Converter plugin

- IEM Binaural Decoder

- Reaper

We will use Reaper as a DAW and video editor, as it supports video and multichannel audio from the ZM-1 microphone.

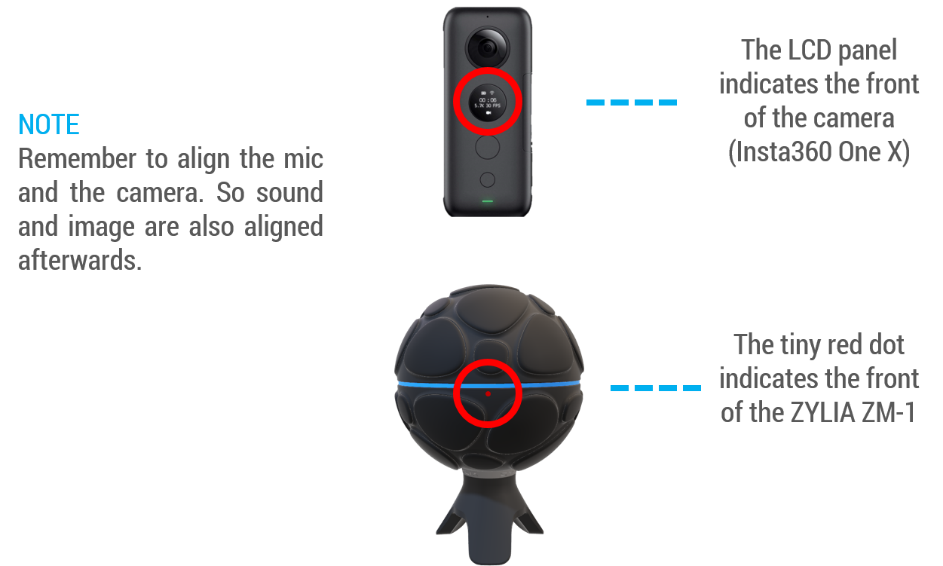

Before recording the 360 video with the ZM-1 microphone make sure to have the front of the camera pointing the same direction as the front of the ZM-1 (red dot on the equator represents the front of the ZM-1 microphone) , this is to prevent future problems and to know in which direction to rotate the audio and video.

The video file format may be .mov .mp4 .avi or other.

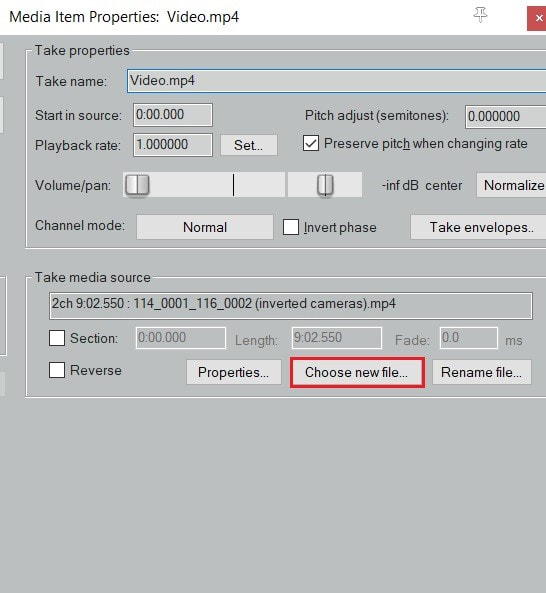

From our experience we recommend to work on a compressed version of the video and replace this media file later for rendering (step 14).

To open the Video window click on View – VIDEO or press Control + Shift + V to show the video.

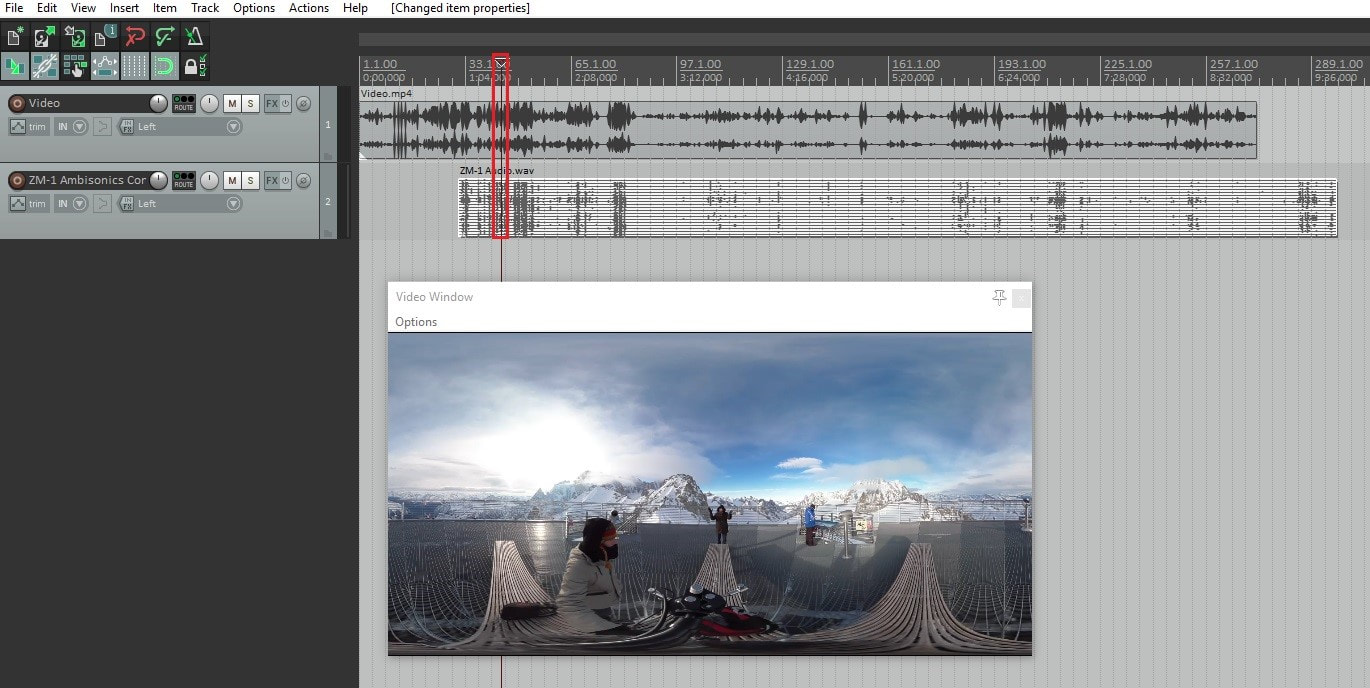

Import the 19 channel file from your ZM-1 and sync it with the video file.

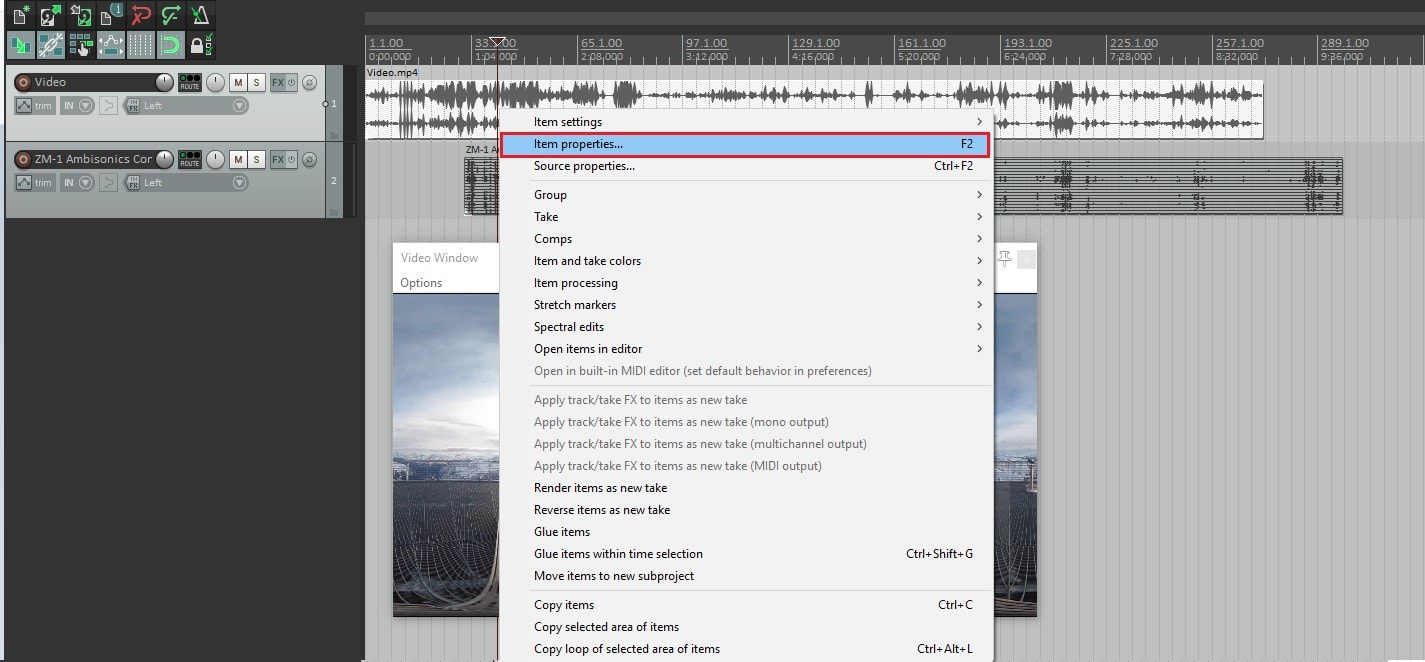

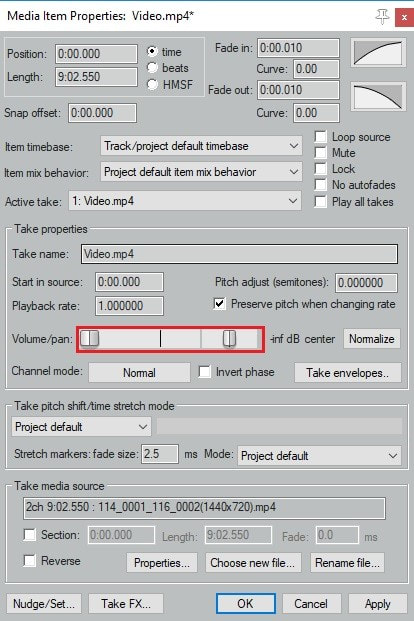

Since we will not use the audio from the video track, we require to remove or put the volume from the audio track at minimum value.

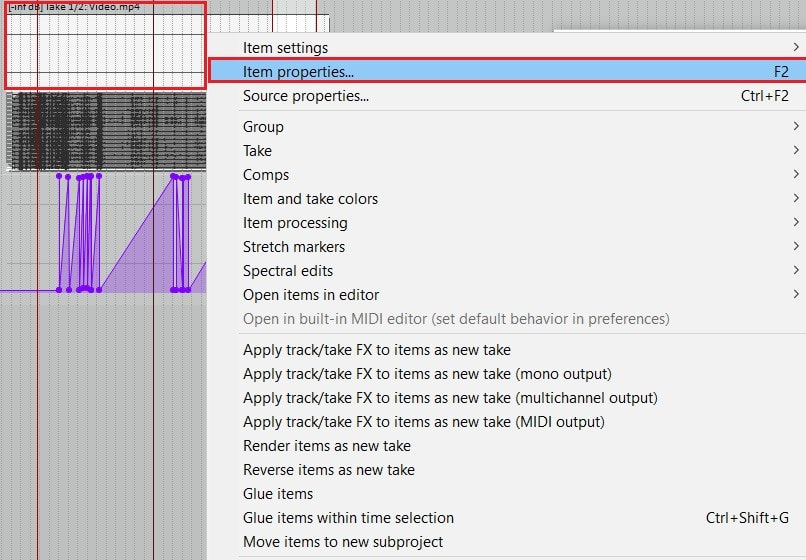

To do so, right click on the Video track – Item properties – move the volume slider to the minimum.

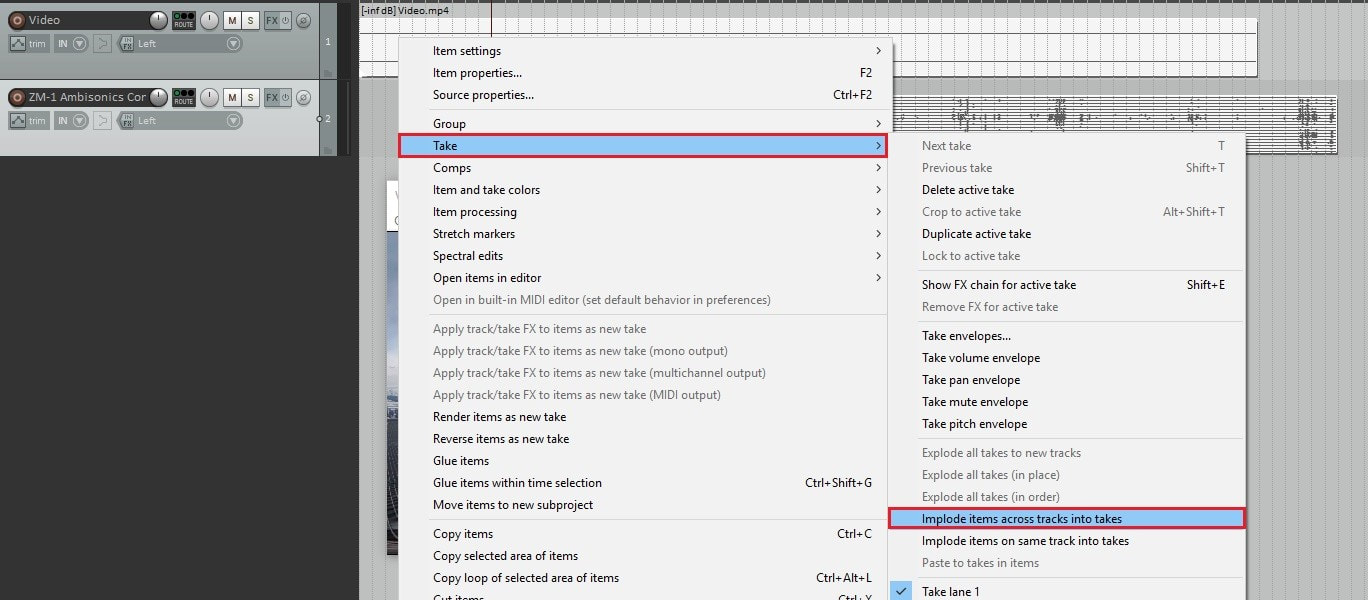

Select both the video and audio track and right click – Take – implode items across tracks into takes

This will merge video and audio to the same track but as different takes.

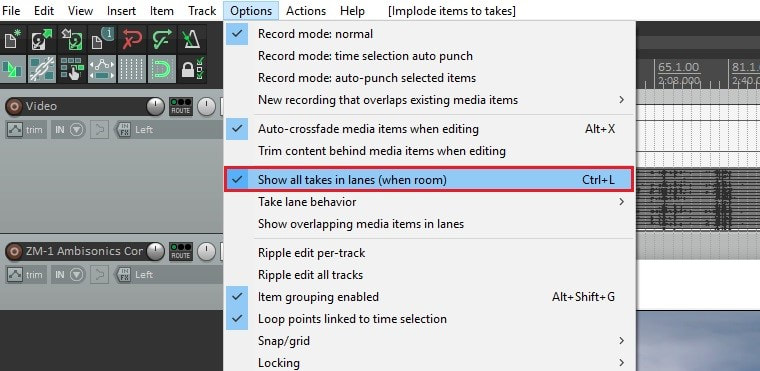

To show both takes, click on Options – Show all takes in lanes (when room) or press Ctrl + L

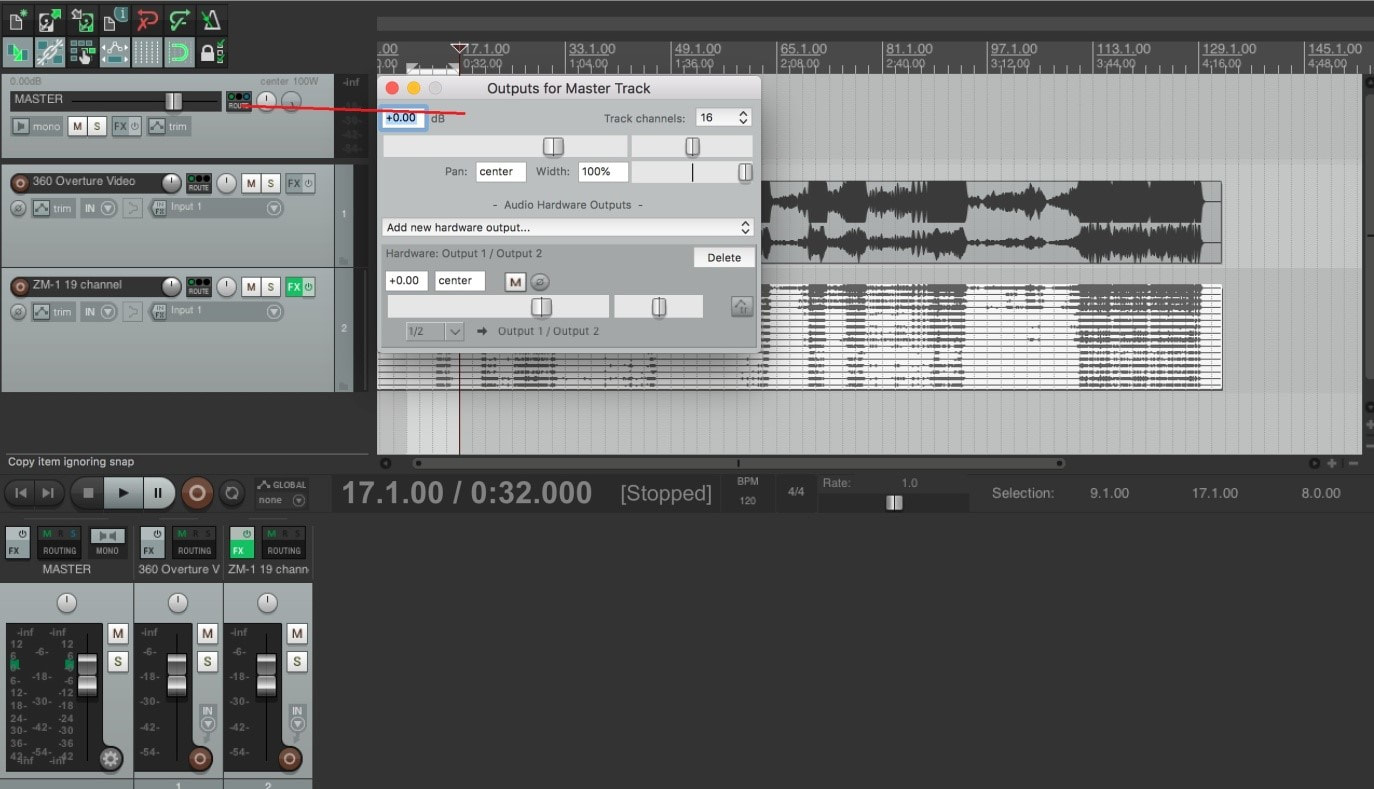

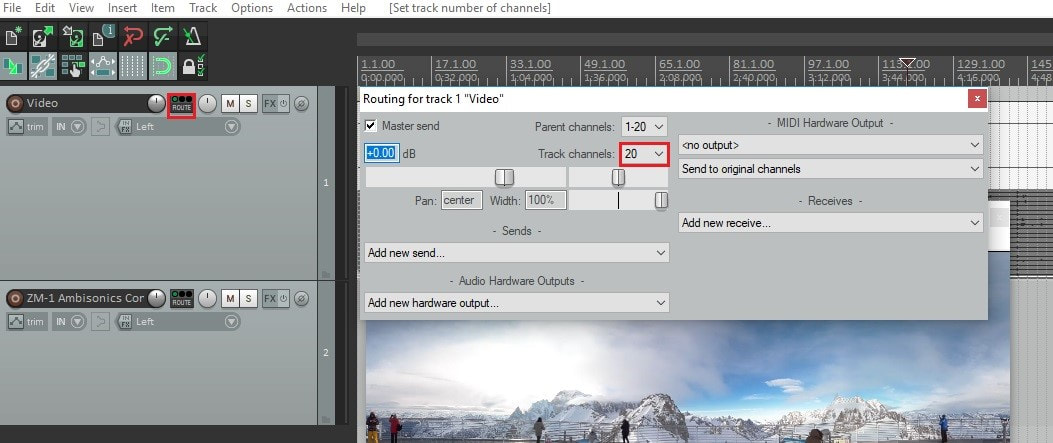

Click on the Route button and change the number of track channels from 2 to 20, this is required to utilize the 19 multichannel of the ZM-1.

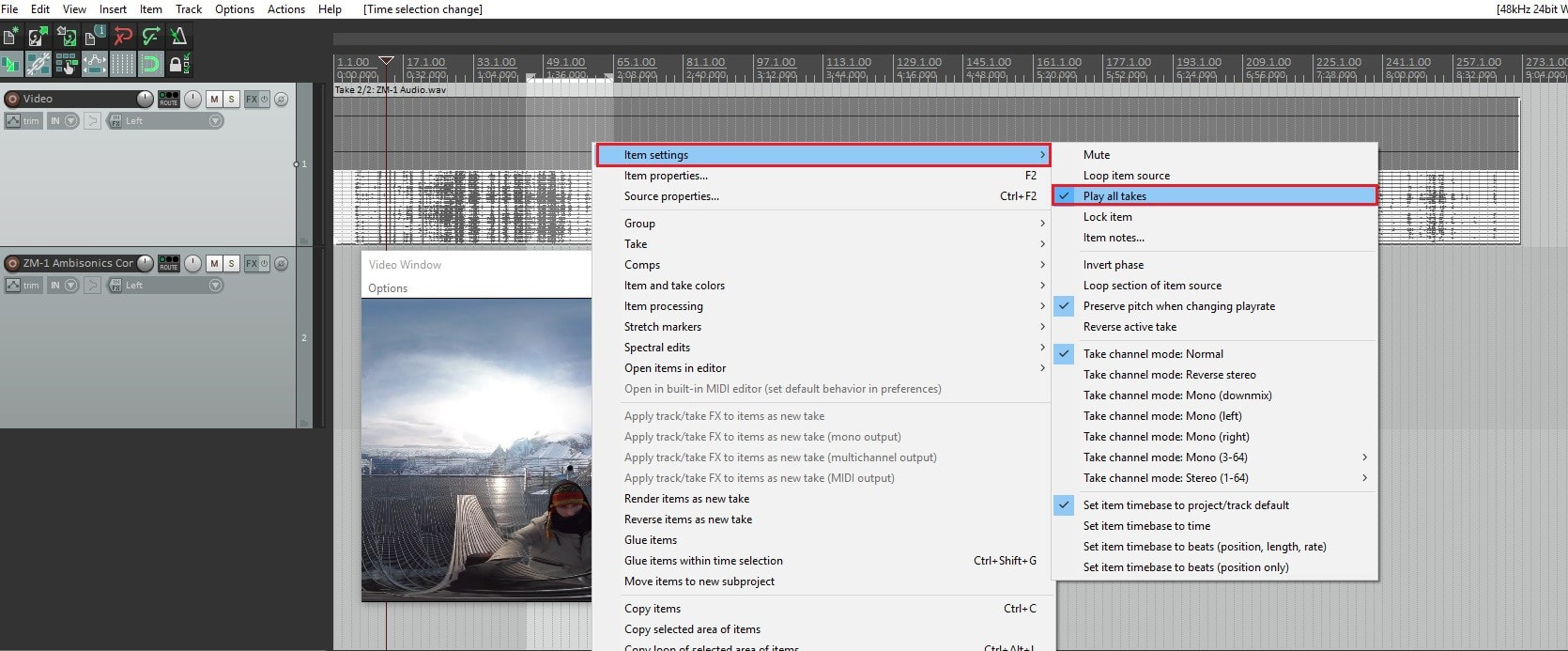

If we press play right now, it will only play the selected take, therefore we need to be able to play both takes simultaneously, therefore:

Right click on the track – Item settings – Play all takes.

Next we will need to convert the 360 video to equirectangular video to visualize and control the rotation of the camera.

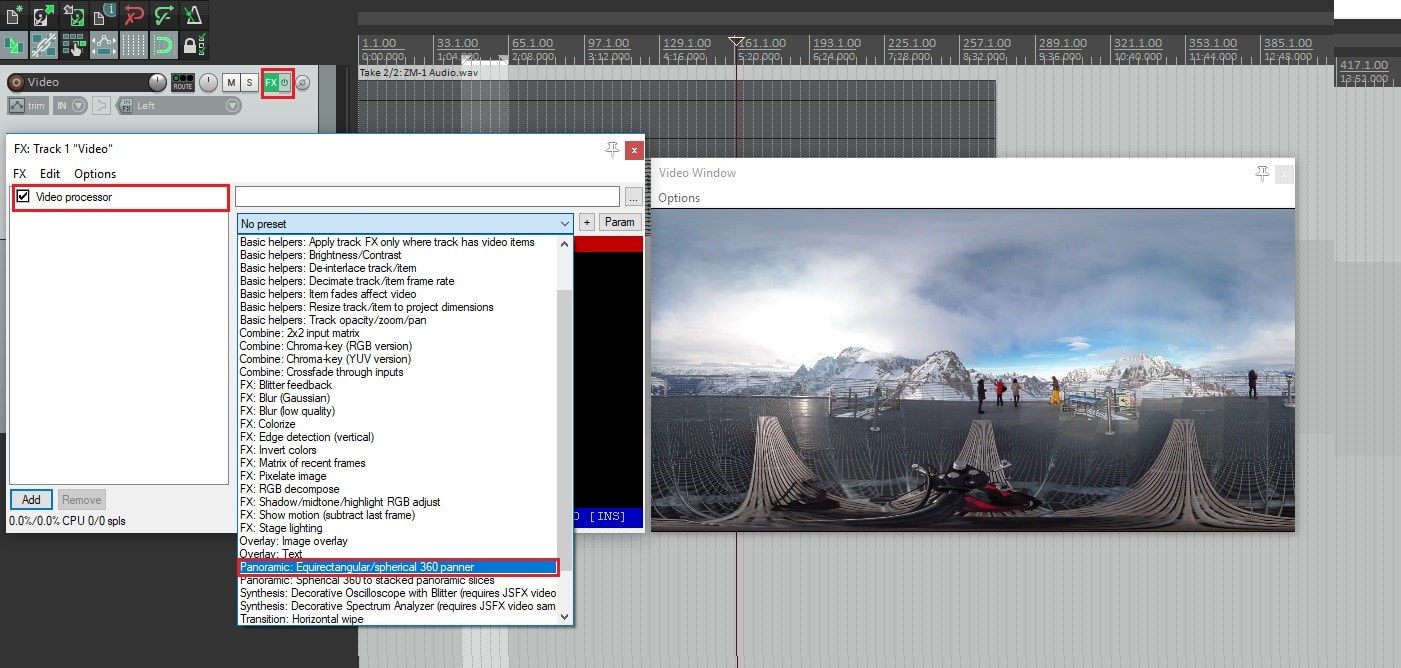

To do so, open the FX window on our main track and search for Video processor.

On the FX window add as well:

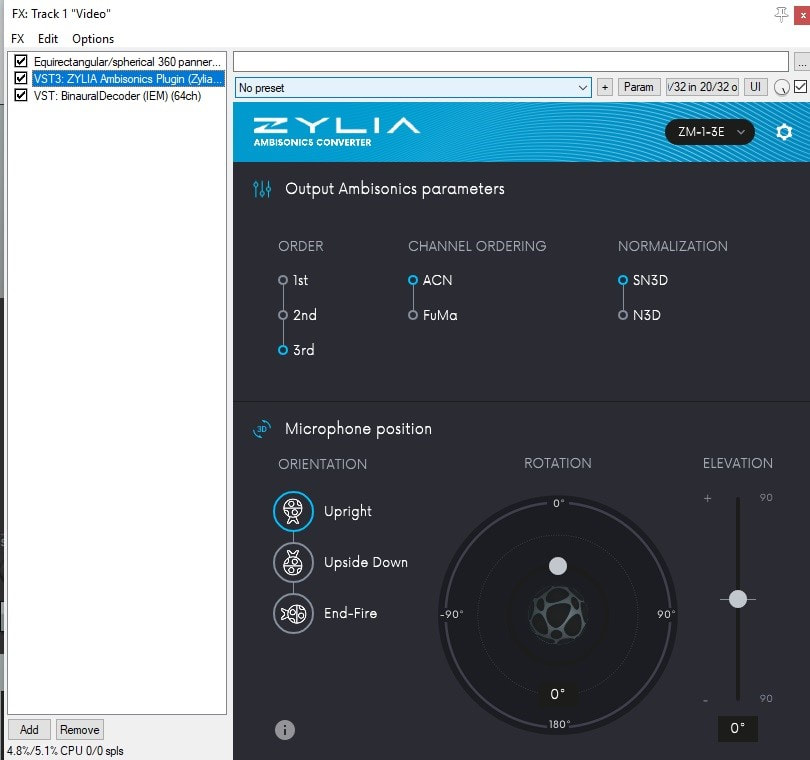

- ZYLIA Ambisonics Converter plugin and set it to 3rd Ambisonics Order. Make sure to set the microphone orientation how you recorded it in the first place.

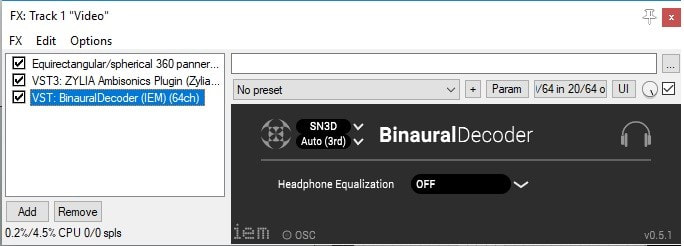

- IEM Binaural Decoder. Here you can choose headphone equalization at your liking.

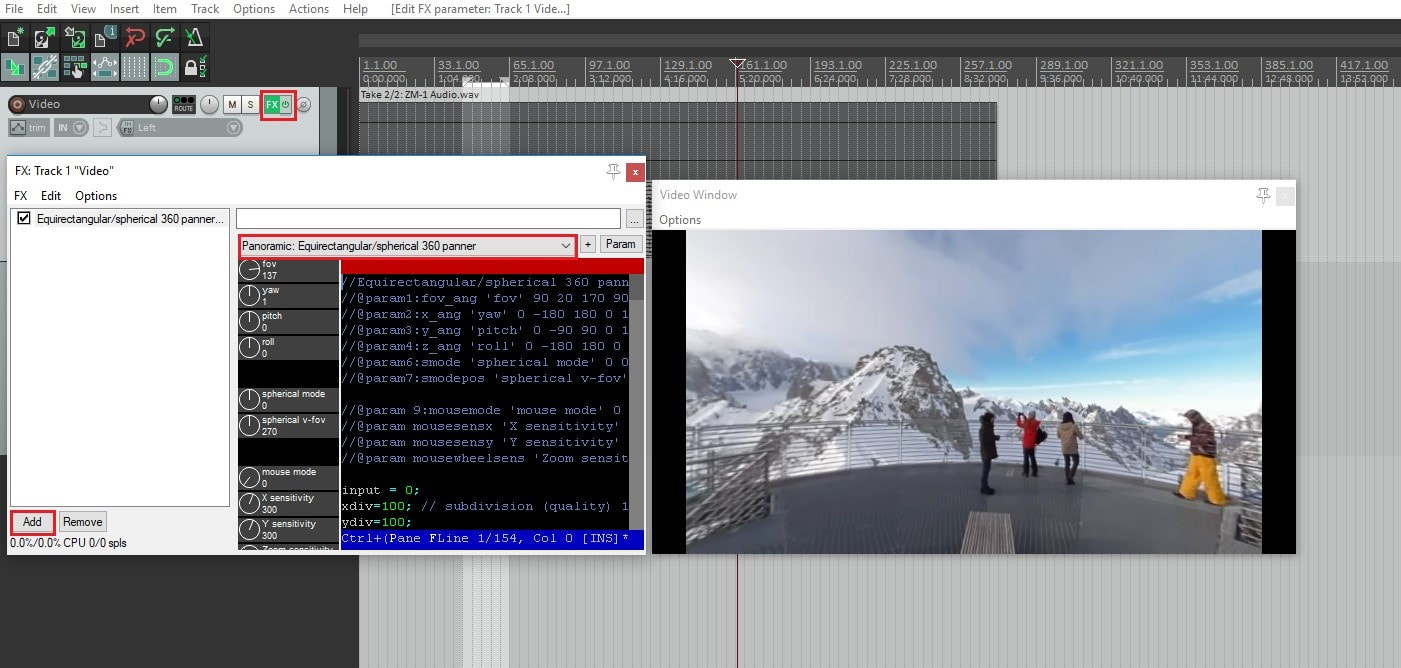

The next steps will be dedicated to linking the Rotation of the ZYLIA Ambisonics Converter and the YAW parameter from the Video Processor.

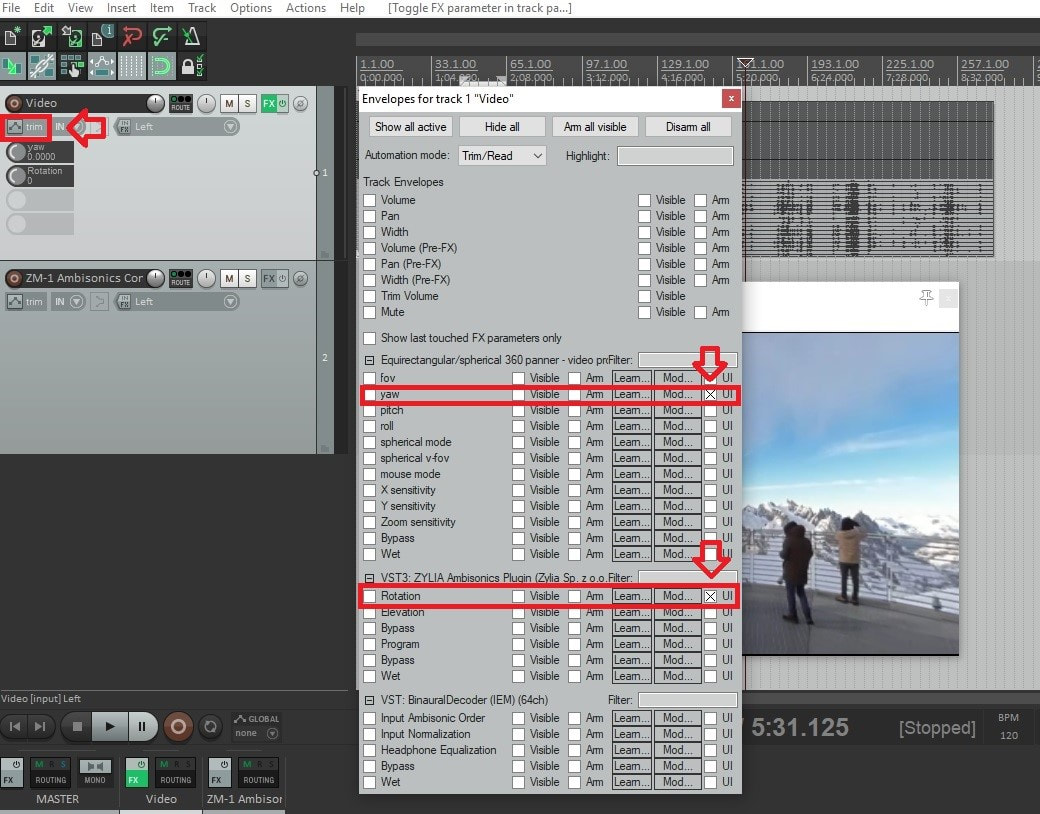

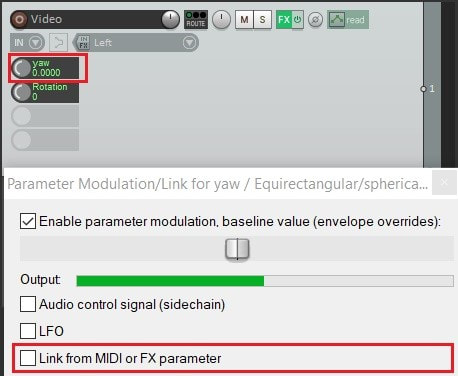

On the main track, click on the Track Envelopes/Automation button and enable the UI for the YAW (in Equirectangular/spherical 360 panner) and Rotation (in ZYLIA Ambisonics Converter plugin).

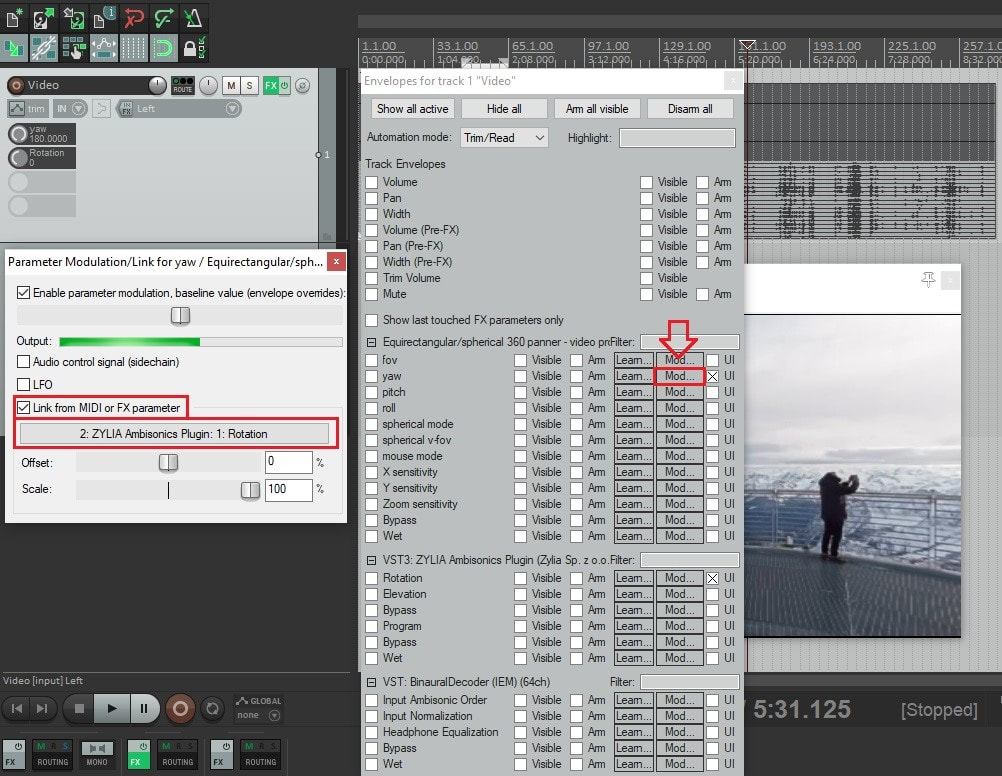

On the same window, on the YAW parameters click on Mod… (Parameter Modulation/Link for YAW) and check the box Link from MIDI or FX parameter.

Select ZYLIA Ambisonics plugin: Rotation

On the Parameter Modulation window you are able to fine-tune the rotation of the audio with the video.

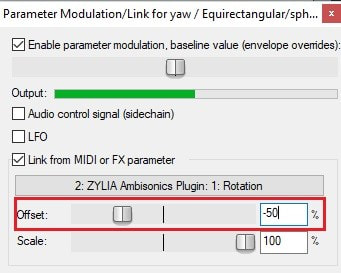

Here we changed the ZYLIA Ambisonics plugin Rotation Offset to -50 % to allow the front of the video match the front of the ZM-1 microphone.

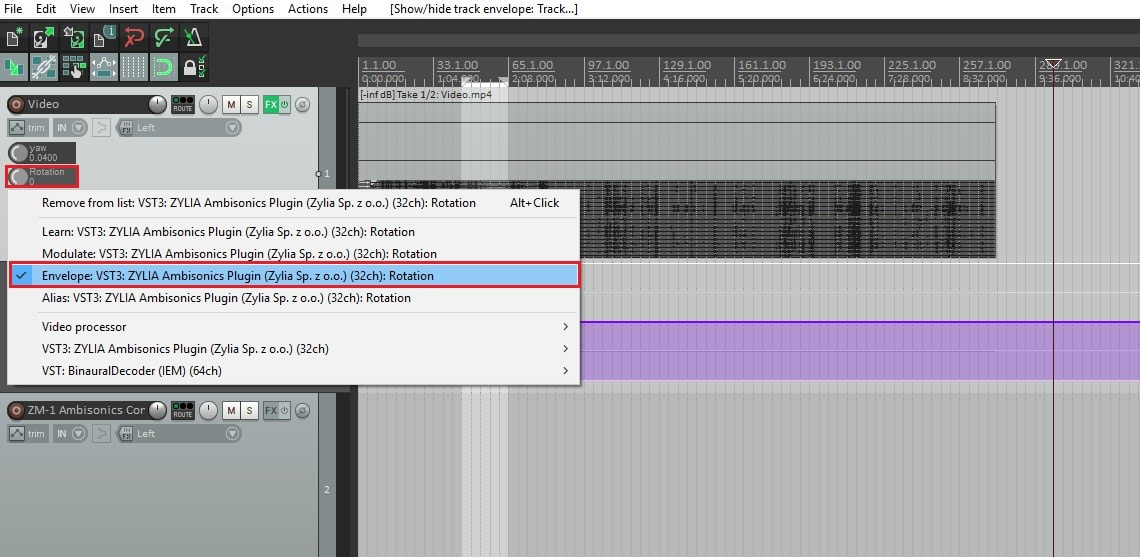

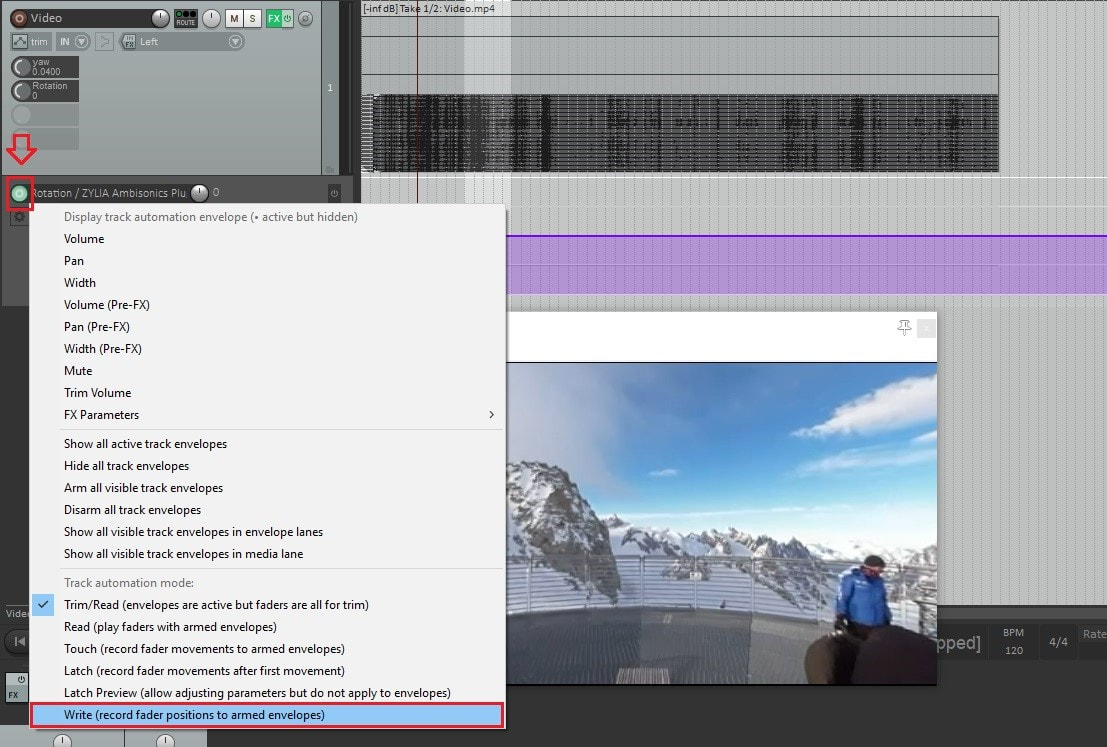

To record the automation of this rotation effect, right-click on the Rotation parameter and select Envelope to make the envelope visible.

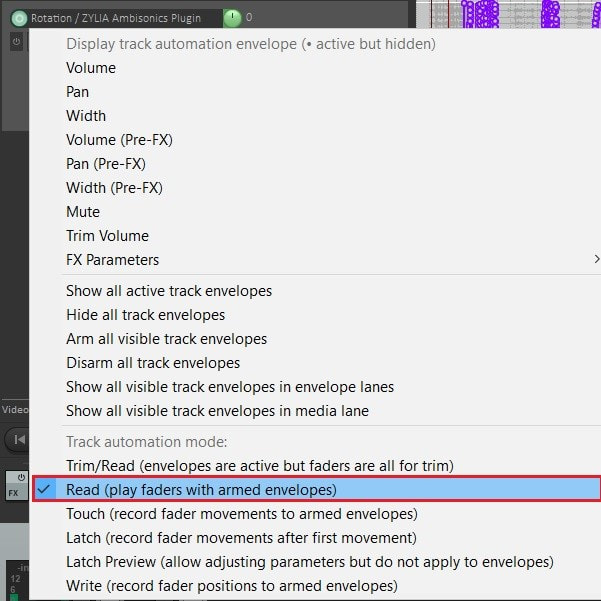

After writing the automation, change the envelope to Read mode instead of Write mode.

Right click on Yaw and uncheck “Link from MIDI or FX parameter”

If you have been working with a compressed video file, this is the time to replace it with the original media file. To do this, right click on the video track and select item properties.

Then select your original uncompressed video file.

You should now have your project ready for Rendering.

Click on File – Render and set Channels to Stereo.

On the Output format choose your preferred Video format.

We exported our clip in .mov file with video codec H.264 and 24bit PCM for the Audio Codec.

Simply download the zip package, extract files and import the appropriate surround preset into your Reaper session.

What is Dolby Atmos?

Read more at Wikipedia>

- ZYLIA ZM-1 microphone array;

- A 360 camera (e.g. Insta 360 One X);

- A computer.

After following steps A, B and C, you’ll have a video file with 1st order Ambisonics spatial audio that can be played on your computer with compatible video players (e.g. VLC) or uploaded to YouTube.

| There are many ways to achieve this. It depends on available gear. A simple, flexible and sturdy way to do it is to use a standard microphone stand and 2 articulated “magic arms” like this one: Amazon.com |

- Bring your files (the 19-channel audio from the ZM-1 and the video one from your camera) onto the DAW;

- Lower the video volume;

- Align the clap position;

- Trim the ends;

- Adjust the volume of the audio file;

- Add ZYLIA Ambisonics Converter Plugin to the audio track and adjust the settings;

- Change the master output to 4 channels;

- Render!

Here’s a video showing all the sub-steps in Reaper:

If you need to check how the recording sounds, add a binaural decoder plugin (e.g. IEM Binaural decoder) to the audio track, after ZYLIA Ambisonics Converter.

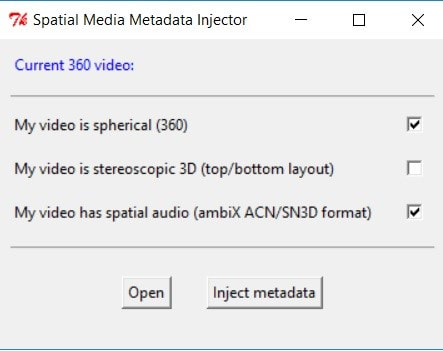

| This is the last step. Just load the file rendered / exported from Reaper onto Google’s Spatial Media metadata injector, check the appropriate box for your kind of 360 video and check the bottom option: “My video has spatial audio”. Click on “Inject metadata” and save the new injected file. |

Now you can enjoy the spatial audio

- By playing it on your computer, using VLC player, for example (very few players will handle 360 video + Ambisonics correctly); or…

- By uploading it to YouTube.

| IEM Binaural decoder – part of a suite of Ambisonics plugins |

https://www.amazon.com/Stage-MY550-Microphone-Extension-Attachment/dp/B0002ZO3LK/ref=sxbs_sxwds-stvp?keywords=microphone+clamp+arm&pd_rd_i=B0002ZO3LK&pd_rd_r=6860690f-2adc-4b00-a80e-de436939ed2b&pd_rd_w=GlE2J&pd_rd_wg=qrTTx&pf_rd_p=a6d018ad-f20b-46c9-8920-433972c7d9b7&pf_rd_r=GGS60M0DGQ5DQF44594V&qid=1575529629

https://www.amazon.com/Aluminum-Microphone-Swivel-Camera-Monitor/dp/B07Q2V6CBC/ref=sr_1_186?keywords=microphone+boom+clamp&qid=1575529342&sr=8-186

Amazon:

https://www.amazon.com/Neewer-Adjustable-Articulating-Mirrorless-Camcorders/dp/B07SV6NVDS/ref=sr_1_205?keywords=microphone+clamp+arm&qid=1575531393&sr=8-205

Allegro generic alternative for us to test: https://allegro.pl/oferta/ramie-przegubowe-11-magic-arm-do-kamery-8505530470

- Automation of plug-in parameters for Digital Audio Workstations.

This allows for programmed and automatic adjustment of Rotation and Elevation parameters directly from your DAW. Users can now emulate different movements of ZYLIA ZM-1, such as rotation. This adds new creative possibilities to the sound design and post-production processes.

- ZYLIA Ambisonics Converter plugin now properly loads the microphone array version saved in personal presets.

- ZYLIA Ambisonics Converter plugin now ignores typed-in incorrect values of Rotation and Elevation parameters. Values of Rotation out of range of [-180, 180] and Elevation out of range of [-90, 90] do not trigger any changes.

Categories

All

360 Recording

6DOF

Ambisonics

Good Reading

How To Posts

Impulse Response

Interviews

Live Stream

Product Review

Recording

Software Releases

Tutorials

Archives

August 2023

July 2023

June 2023

May 2023

February 2023

November 2022

October 2022

July 2022

May 2022

February 2022

January 2022

August 2021

July 2021

May 2021

April 2021

March 2021

January 2021

December 2020

November 2020

October 2020

September 2020

August 2020

July 2020

June 2020

April 2020

March 2020

February 2020

January 2020

December 2019

November 2019

October 2019

September 2019

August 2019

July 2019

June 2019

May 2019

April 2019

March 2019

January 2019

December 2018

October 2018

September 2018

June 2018

May 2018

April 2018

March 2018

February 2018

January 2018

December 2017

October 2017

September 2017

August 2017

July 2017

June 2017

May 2017

March 2017

February 2017

January 2017

December 2016

November 2016

October 2016

RSS Feed

RSS Feed