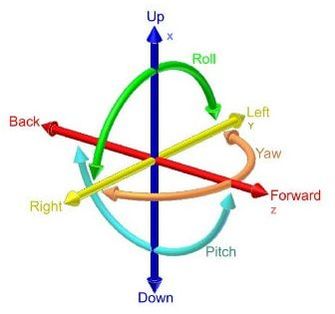

Recordings made with 6DOF Development Kit by ZyliaWhat would happen if on a rainy and cloudy day, during a walk along a forest path, you could move into a completely different place thousands of kilometers away from you? Putting the goggles on would get you into a virtual reality world, you would find yourself on a sunny island in the Pacific Ocean, you would be on the beach, admiring the scenery and walking among the palm trees listening to the sound of waves and colorful parrots screeching over your head. It sounds unrealistic, but such goals are determined by the latest trends in the development of Augmented / Virtual Reality technology (AR / VR). Technology and content for full VR or 6DoF (6 Degrees-of-Freedom) rendered in real time will give the user the opportunity to interact and navigate through virtual worlds. To experience the feeling of "full immersion" in the virtual world, realistic sound must also follow a high-level image. Therefore, only each individual sound source present in virtual audio landscape provided to the user as a single object signal can reliably reflect both the environment and the way the user interacts with it. What are Six Degrees of Freedom (6DOF)

"Six degrees of freedom" is a specific parameter count for the number of degrees of freedom an object has in three-dimensional space, such as the real world. It means that there are six parameters or ways that the object can move.

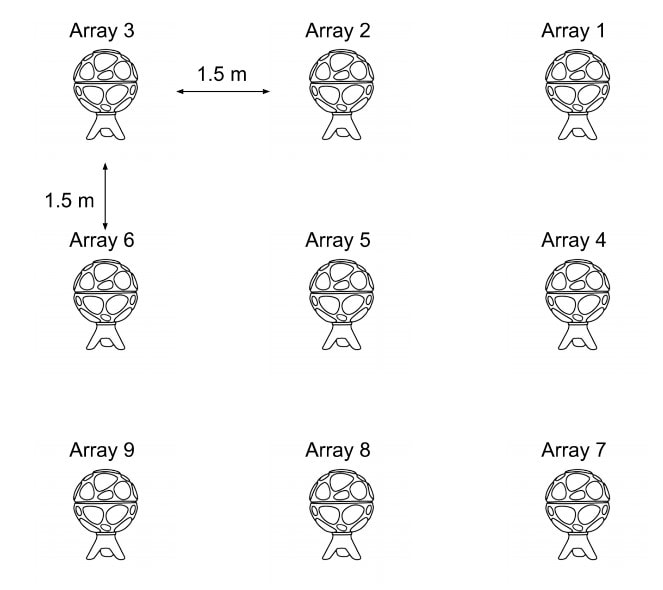

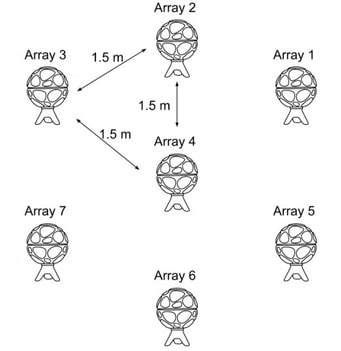

There are many possibilities of using a 6DoF VR technology. You can imagine exploring a movie plan in your own pace. You could stroll between the actors, look at the action from different sides, listen to any conversations and paying attention to what is interesting only for you. Such technology would provide really unique experiences. A wide spectrum of virtual reality applications drives the development of technology in the audio-visual industry. Until now, image-related technologies have been developing much faster, leaving the sound far behind. We have made the first attempts to show that 6DoF for sound is also achievable. Read more about 6DOF Development KitHow to record audio in 6DoF?It's extremely challenging to record high-quality sound from many sources present in the sound scene at the same time. We managed to do this using nine ZYLIA ZM-1 multi-track microphone arrays evenly spaced in the room. In our experiment the sound field was captured using two different spatial arrangements of ZYLIA ZM-1 microphones placed within and around the recorded sound scenes. In the first arrangement, nine ZYLIA ZM-1 microphones were placed on a rectangular grid. Second configuration consisted of seven microphones placed on a grid composed of equilateral triangles. Fig. Setup of 9 and 7 ZYLIA ZM-1 microphone arrays Microphone signals were captured using a personal computer running GNU/Linux operating system. Signals originating from individual ZM-1 arrays were recorded with the specially designed software. We recorded a few takes of musical performance with instruments such as an Irish bouzouki (stringed instrument similar to the mandolin), a tabla (Indian drums), acoustic guitars and a cajon. Unity and 3D audio To present interesting possibilities of using audio recorded with multiple microphone arrays we have created a Unity project with 7 Ambisonics sources. In this simulated environment, you will find three sound sources (our musicians) represented by bonfires among whom you can move around. Experiencing fluent immersive audio becomes so natural that you can actually feel being inside of this scene. MPEG Standardization Committee |

| Equipment used

| Software used

|

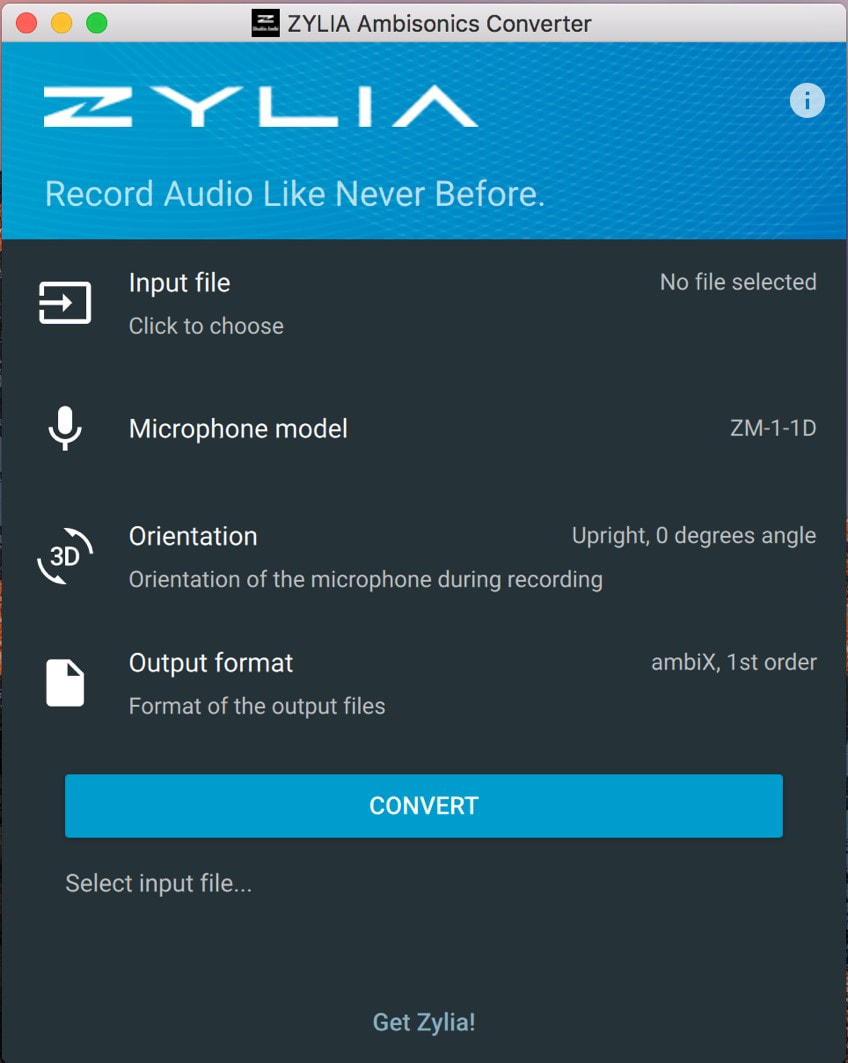

| For scenario A, we used the regular stitched video from the Gear 360 and a 1st order Ambisonics audio file. Scenario A - Basic steps taken:

Here are the detailed steps taken for the conversion to Ambisonics:

* Standard currently (August 2018) used on YouTube. |

Since, the source material is the same as the one from scenario A, we’ll list here only the steps that differ.

Scenario B steps:

- Process stereoscopic video from Gear 360 on Insta360 Studio to have the ‘tiny planet’ effect;

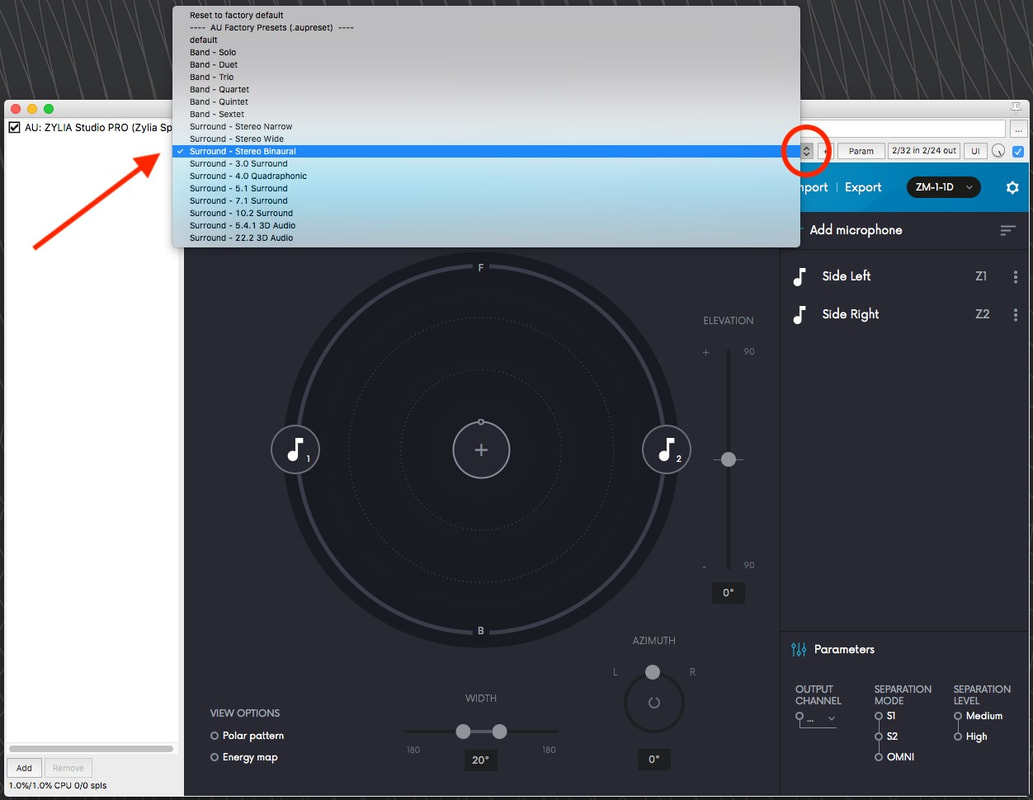

- Convert the raw 19-channel file from ZYLIA Studio to binaural, using ZYLIA Studio PRO running in REAPER.

- Edit 360-degree video and Ambisonics audio on Adobe Premiere.

| We had a great pleasure to meet Yao Wang during our visit at Berklee College of Music. A few days ago Yao published her project 'Unraveled' - it is a phenomenal immersive 360 audio and visual experience. You as a listener find yourself at the center of all elements, you are surrounded by choir, strings, synths, and imagery. You can experience being in the middle of the music scene. Get to know more about this project and read an interview with Yao Wang. | Art work by @cdelcastillo.art |

Yao: Last spring, I was 9 months away from graduating from Berklee College of Music, and the panic of post-graduation uncertainty was becoming unbearable. I was struggling to plan my career and I wanted to do something different. I spent a whole summer researching the ins and outs of spatial audio and decided to do my Senior Portfolio Project around my research. What I have found is that spatial audio is often found in VR games and films - recreating a 3D environment. It is rarely used as a tool for music composition and production. I saw my opportunity.

With the help and hard work of my team (around 60 students involved), we succeeded in creating ‘Unraveled’, an immersive 360 audio and visual experience, where the audience would find themselves at the center of all elements, being surrounded by choir, strings, synths and imagery. My role was the project leader, composer, and executive producer. I found a most talented team of friends to work on this together: Gareth Wong and Deniz Turan as co-producers, Carlos Del Castillo as visual designer, Ben Knorr as music contractor, Paden Osburn as music contractor and conductor, Jeffrey Millonig as lead engineer and Sherry Li as lead vocalist and lyricist. Not to mention the wonderful musicians and choir members. I am truly grateful for their hard work, dedication and focus.

‘Unraveled’ also officially kickstarts my company ICTUS, a company that provides music and sound design content specializing in spatial audio solutions. For immersive experiences such as VR, AR and MR, we are your one-stop audio shop for a soundscape that completes the reality. We provide music composition, sound design, 360 recording, mixing, mastering, post-production, spatialization and visualizing services tailored to your unique project.

We are incredibly humbled that 'Unraveled' has been officially selected for the upcoming 44th Seattle International Film Festival, which runs May 17 to June 10, and to have been accepted for the Art and Technology Exhibition at the Boston Cyberarts Gallery, from Saturday May 26 to Sunday July 1.

‘Unraveled’ has been officially selected for the upcoming 44th Seattle International Film Festival, which runs May 17 - June 10, with more than 400 films from 80 countries, running 25 days, and with over 155,000 attendees!

Yao: I worked very closely with Paden Osburn, the conductor and music contractor, to schedule, revise, coordinate and plan the session. Paden is a dear to work with, basically allowing me to focus on the music while she coordinated with the rest of the amazing choir members. We had developed a great workflow.

I also had many meetings with the team of engineers as well as many professors to figure out the simplest, most efficient way to record. It was indeed very challenging and stressful to pull off, but it was also one the most magical night of my life.

Yao: On October 27, 2017, we had a recording session of the choir parts with 40 students from Berklee College of Music. The recording was done using three ambisonic microphones (Zylia, Ambeo, TetraMic). We tried forging a 320 piece choir by asking the 40 students to shift their positions around the microphones for every overdub. We also recorded 12 close mic-ed singers to have some freedom spatializing individual mono sources.

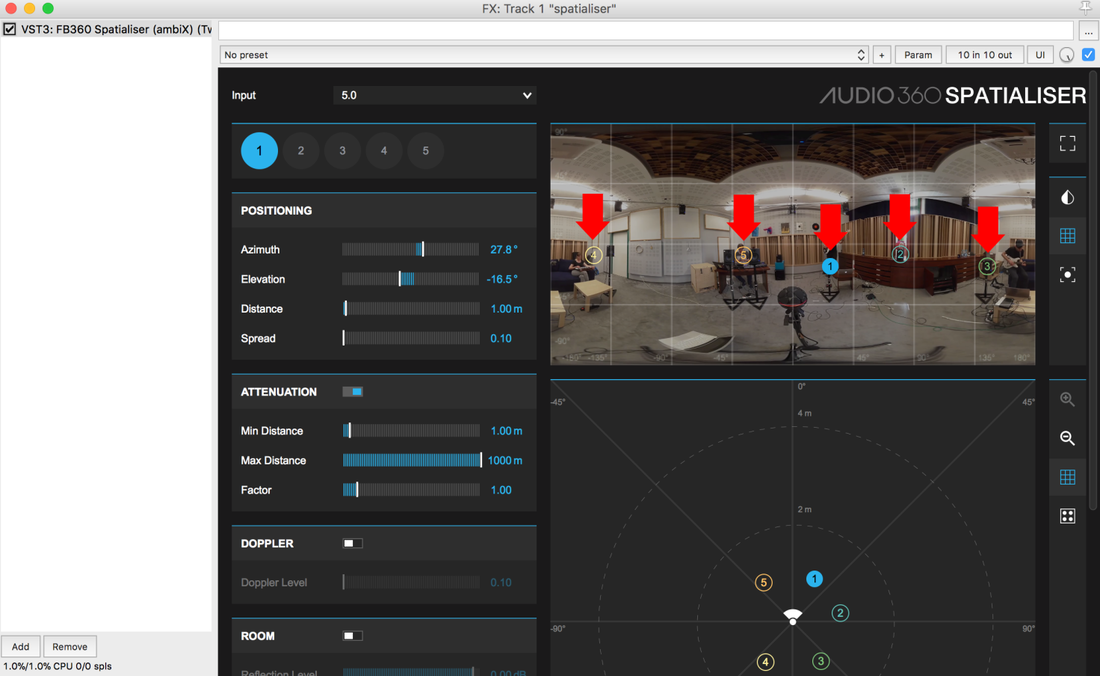

The spatialization was achieved through Facebook360 Spatial Workstation in REAPER. Many sound design elements were created in Ableton and REAPER. The visuals were done in Unity. We basically created a VR game and recorded a 360 video of the performance. Carlos Del Castillo did an outstanding job creating an abstract world that had many moments syncing with the musical cues.

| Zylia: What do you think about using ZYLIA ZM-1 mic for recording an immersive 360 audio? Yao: I clearly remember meeting Tomasz Zernicki on the Sunday prior to our choir session. The Zylia team came to Berklee and demonstrated the capabilities of their awesome microphone, and I thought I had nothing to lose, so I asked for a potential (and super last minute!) collaboration that has proven to be fruitful. This has also brought me great friendship with Edward C. Wersocki who operated the microphone at our session. Unfortunately, he couldn't stay for the whole session, so only partial lines were recorded with the ZYLIA. He also guided me with the A to B format conversion which was extremely easy and user-friendly. I loved the collaboration and will only keep pursuing and exploring more possibilities with spatial audio. Hopefully, this will be the first of many collaborations. | Behind the scene, ZYLIA ZM-1, photo by @jamiexu0528. |

Yao: My long-term goal would be to establish my company ICTUS as one of the leading experts in the field of spatial audio. We are currently working on an interactive VR music experience called ‘Flow’ with an ethnic ensemble, GAIA, and the visuals are influenced by Chinese water paintings. The organic nature of this project will be a nice contrast to ‘Unraveled’s futuristic space vibe.

Another segment of the company is focused on creating high quality, cinematic spatial audio for VR films and games. We are producing a 3D audio series featuring short horror/thriller stories with music, descriptive narration, dialogues, SFX and soundscapes. Empathy is truly at the heart of this project, some of our stories will have a humanitarian purpose and we will be associated with many organizations that are fighting to end domestic abuse, human trafficking, rape, abuse and other violent crimes. We hope to bring more awareness and traffic to these causes with our art. Spatial audio is incredibly powerful, it really allows you to be in the shoe of the victims and without the visuals, I swear your imagination will go crazy!

Yao WangYao is a composer, sound designer, producer and artist. She recently graduated from Berklee College of Music with a Bachelor of Music Degree in Electronic Production & Design and Film Scoring. Passionate about immersive worlds and storytelling, Yao has made it her mission to pursue a career combining her love for music, sound and technology. With this mission in mind, she is now the CEO and founder of ICTUS, a company that provides spatial audio solutions for multimedia.

|

Want to know more about immersive content creation? Contact our Sales team:

There are also quality improvements and bug fixes for 2nd and 3rd order HOA. This update significantly increases the perceptual effect of rotation in HOA domain as well as corrects spatial resolution for 2nd and 3rd order. It is recommended to update to this new version.

Our previous blog post “2nd order Ambisonics Demo VR” described the process of combining audio and the corresponding 360 video into fine 360 movie on Facebook. Presented approach assumes using of 8-channel TBE signal from ZYLIA Ambisonics Converter and converts audio into the Ambisonics domain. As a result we get a nice 3D sound image which is rotating and adapting together with the virtual movement of our position. However, it is still not possible to adjust parameters (gain, EQ correction, etc.) or change the relative position of the individual sound sources present in the recorded sound scene.

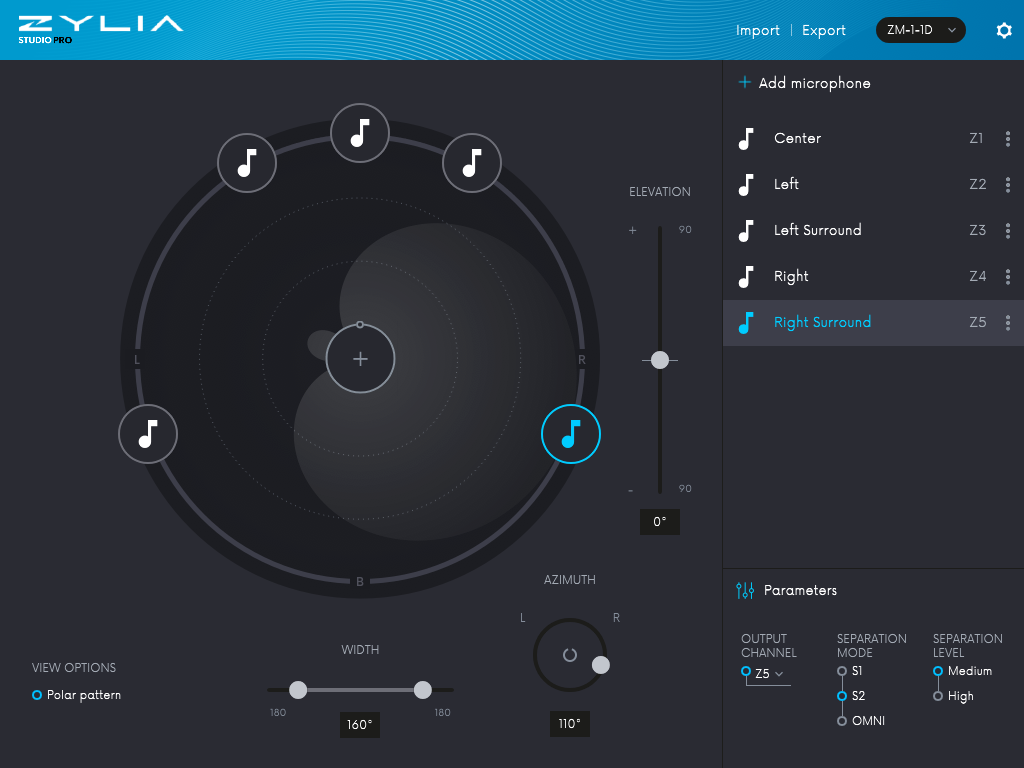

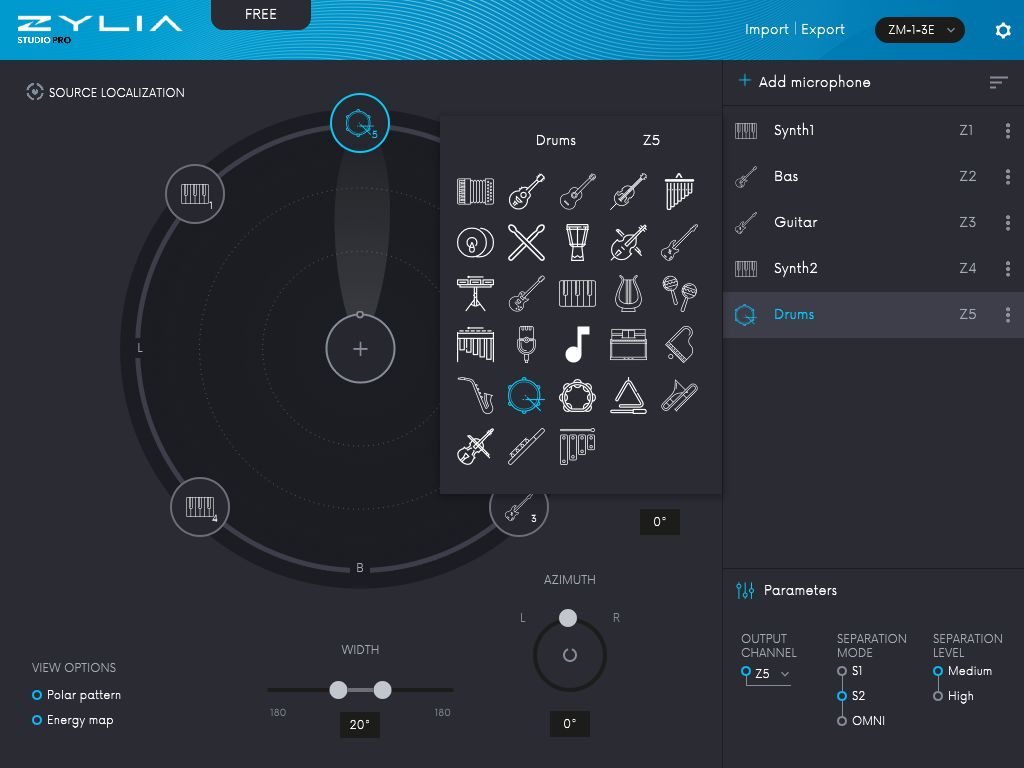

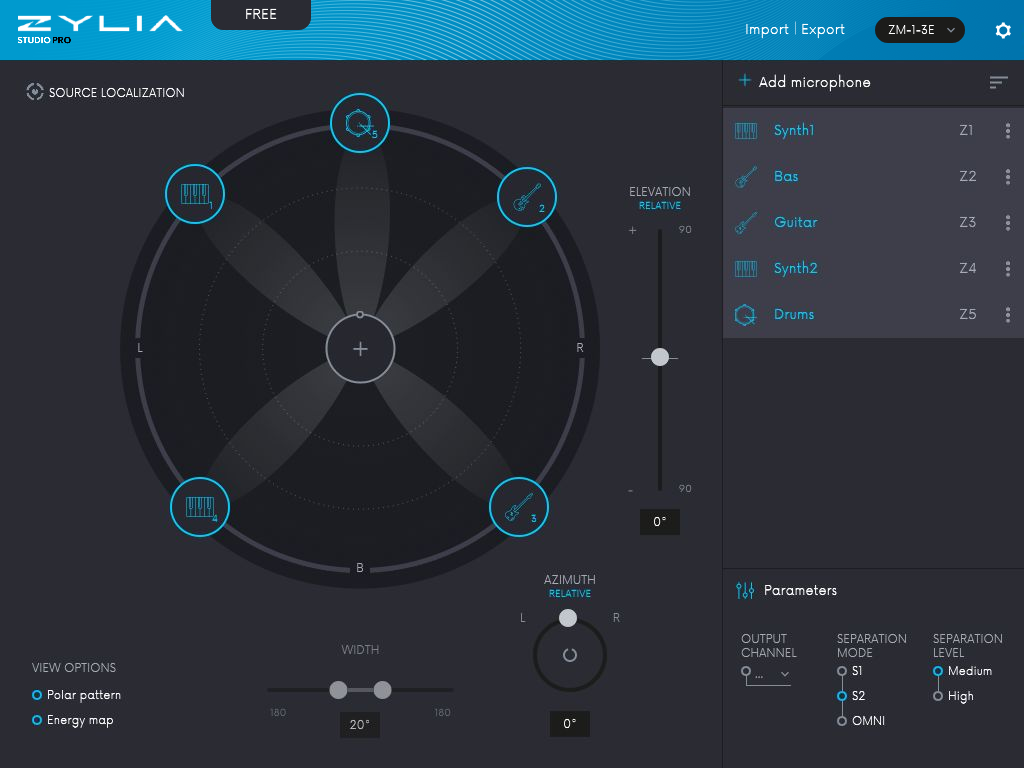

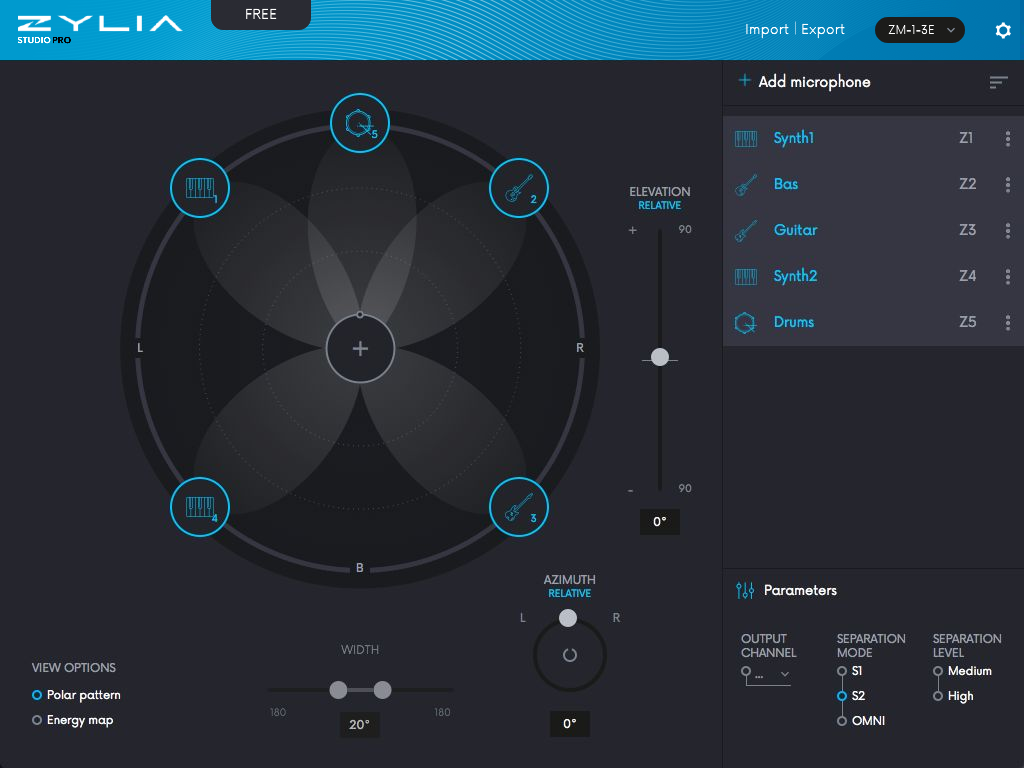

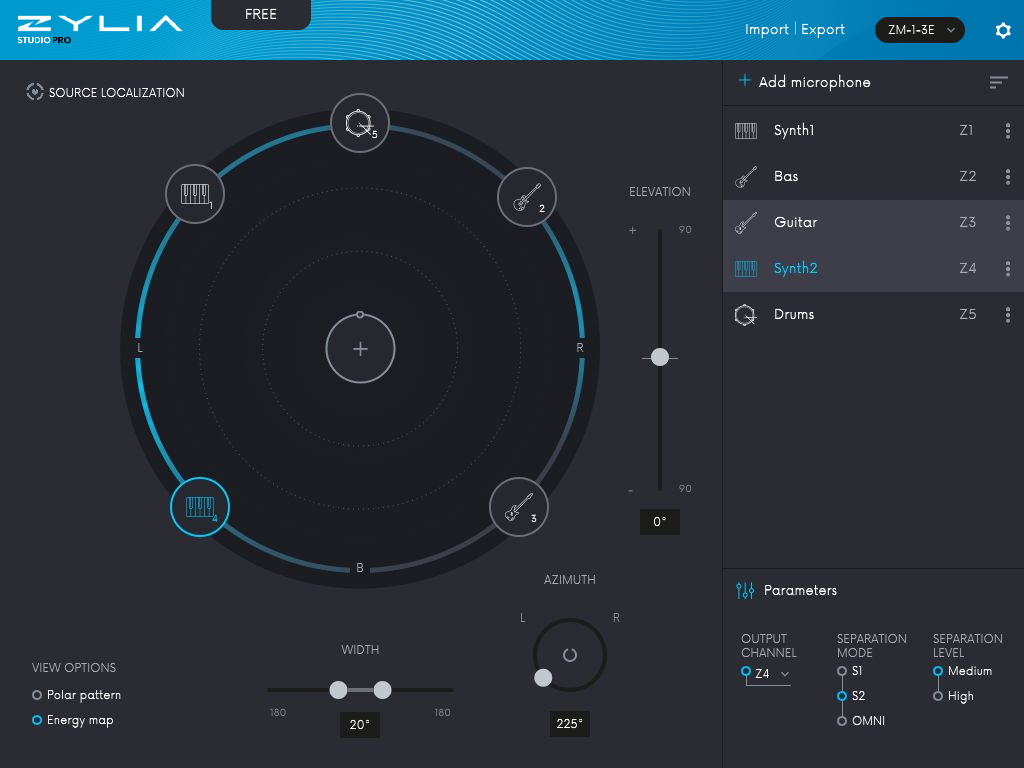

In this tutorial we are going to introduce another approach of using ZYLIA ZM-1 to create a 3D sound recording, which gives much more flexibility in sound source manipulation. It allows us not only to adjust the position of instruments in recorded 3D space around ZYLIA microphone, but also to control the gain or to apply any additional effects (EQ, Comp, etc.). In this way we are able to create a fancy spatial mix using only one microphone instead of several spot mics!

Spatial Encoding of Sound Sources – Tutorial

“Spatial Encoding of Sound Sources” - a step-by-step description

- Download and install REAPER software. The evaluation version is fully functional and perfect to run with our demo.

- Download and install Facebook 360 Spatial Workstation software. It is a free bundle of spatial audio VST plug‑ins. In the session we used version 3.1 beta1.

- Download and install ZYLIA Studio Pro (VST3 Mac, Windows). It’s possible to run the demo in trial mode. In the session we used VST3 plug-in.

- Download the REAPER session prepared by Zylia Team. It is already configured with our audio tracks and all required effects. Unzip it.

- Download 360 movie – two versions are available: high quality [3840 x 1920] and medium quality [1920 x 960]. High quality version sometimes tends to pause in FB360 Video Player on slower CPUs.

- Run REAPER and open the session (ZYLIA-Ambisonics-sources-enc.rpp).

After opening the session, you will see several tracks:

| 1. Very important! Please, ensure that Reaper is working with sample rate of 48 kHz. 2. ZS_pro track – contains 19-channel WAVE file recorded with ZYLIA ZM-1. Click on FX button located on the ZS_pro track. If everything is correct, you will see ZYLIA Studio PRO VST plug-in. By default, there will be 5 virtual microphones – each one already assigned to one of the instruments: bass, drums, guitar, synth and pad. By clicking on a specific virtual microphone, you can adjust azimuth, elevation, width, and separation mode. Master send in routing panel for track should be unchecked. |

a) Click on FX and choose FB360 Spatialiser.

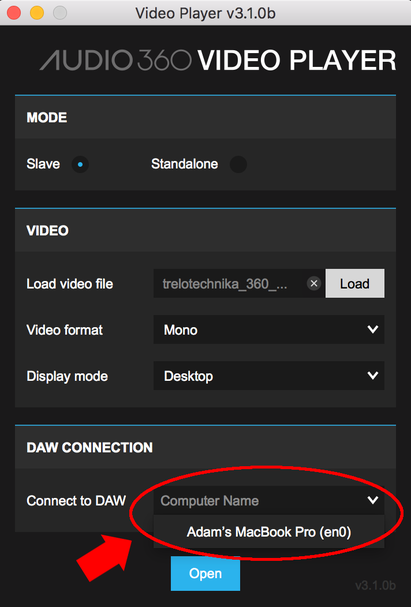

| b) Click on Load button placed on the video grid. Choose Slave mode and load the provided video clip. You will see a message box “H264 is not a recommended codec” - click X. | c) Set the video format to Mono and the display mode to Desktop. In Connect to DAW you should be able to choose your computer’s name. If not, try to restart Reaper and repeat the steps. You are ready to click the Open button. Video box will appear. |

| 5. Control track – receives a multichannel signal from Spatialiser. Control provides connection with the Video Player, rotates the audio scene and applies binauralization. a) Click on FX and choose FB360 Control. b) Ensure that Listener Roll, Listener Pitch and Listener Yaw are properly received from video – controls should be darkened. Open a video box and try to rotate the image - Pitch and Yaw sliders should follow the image to rotate. c) JS: Master Limiter boosts the volume and protects from clipping/distortions. d) Master send in routing panel for track should be checked. |

7. A good practice is to play video from the beginning of file to keep the synchronization. In some cases, it is necessary to close the VideoClient + VideoPlayer and load 360 video again to recover the synchronization.

8. Now you are able to rotate video across the pitch and yaw axis. Your demo is ready to run.

Some time ago we had a chance to record a big performance at Great Theatre in Poznan (Choir and chamber orchestra of the Grand Theatre, conductor Mariusz Otto, program: Christmas carols). It was a Christmas Concert. We decided to make a 360 movie with 3D sound recorded with ZYLIA. With 360 movies there is always a problem with a sound scene following your eyes. We took the challenge to solve this problem. |

Step One - Preparations

Step two - On The Stage

- Microphone placement.

The main ZM-1 microphone was positioned approximately 20 cm below the camera.

Backup ZM-1’s were positioned on the right and on the left side of the main ZM-1 (distance of 4-5 meters). There was also one mic just right in front of the choir.

- The final setup

Recordings made with 6DOF Development Kit by Zylia

Read more ...

Step Three – Recording our perfect 360 movie

Step Four - Video And Audio Post-processing

*At this moment YouTube 360 supports only 1st order Ambisonics audio.

360 movie with 3D sound - The final effect

Video will be available soon.

How to turn a room into a recording studio?

Categories

All

360 Recording

6DOF

Ambisonics

Good Reading

How To Posts

Impulse Response

Interviews

Live Stream

Product Review

Recording

Software Releases

Tutorials

Archives

August 2023

July 2023

June 2023

May 2023

February 2023

November 2022

October 2022

July 2022

May 2022

February 2022

January 2022

August 2021

July 2021

May 2021

April 2021

March 2021

January 2021

December 2020

November 2020

October 2020

September 2020

August 2020

July 2020

June 2020

April 2020

March 2020

February 2020

January 2020

December 2019

November 2019

October 2019

September 2019

August 2019

July 2019

June 2019

May 2019

April 2019

March 2019

January 2019

December 2018

October 2018

September 2018

June 2018

May 2018

April 2018

March 2018

February 2018

January 2018

December 2017

October 2017

September 2017

August 2017

July 2017

June 2017

May 2017

March 2017

February 2017

January 2017

December 2016

November 2016

October 2016

RSS Feed

RSS Feed