Introducing Łukasz Szałankiewicz: A Fusion of Art and Technology in the Immersive Arts Landscape8/14/2023 In this exclusive interview, we have the privilege of introducing Łukasz Szałankiewicz, a visionary artist and the newest Brand Ambassador for Zylia. Łukasz's artistic journey embarked in the early 1990s within the vibrant realm of Demoscene, where his fascination with pushing hardware boundaries laid the foundation for his boundary-breaking approach to art. Over the years, he has seamlessly blended sound and visuals to craft immersive experiences that challenge conventional artistic norms. Now, as a Zylia Brand Ambassador, Łukasz is at the forefront of shaping the audio landscape, bridging the gap between art and technology. With a rich background in the immersive arts and the game industry, he brings expertise and insight into the importance of 3D audio for younger students. As we engage in conversation with Łukasz, he discusses the exciting prospects for the future of 3D audio recording and how Zylia's cutting-edge solutions are positioned to redefine audio production. Zylia: What is your background? Łukasz Szałankiewicz: My journey as an artist began in the early 1990s, when I immersed myself in the fascinating world of Demoscene - a dynamic computer community focused on breaking hardware boundaries. Those formative years were truly transformative, and the multimedia nature of Demoscene had a profound impact on my artistic vision. This laid the foundation for my solo artwork, where I love to combine sound with visuals and weave them into a conceptual framework to create meaningful and immersive experiences. As my art practice has grown, I have discovered my passion for exploring music, audiovisual performances and interactive installations. This allows me to combine art and technology in fascinating ways, encouraging audiences to engage more deeply. The fusion of creativity and innovation has become a defining aspect of my work, and I continue to push the boundaries of artistic expression. Zylia: How close are you to the immersive arts and game industry? Łukasz: For many years I have taught the history of video games and audio production at the non-public University "Collegium da Vinci" in Poznan. It’s a rewarding journey, sharing industry insights with aspiring interactive media enthusiasts. Exploring game improvements and nuances in audio design is fun and satisfying. The blend of theory and real-world practice ensures that students understand the complexities of this dynamic industry. Seeing the younger generation of game professionals becoming more passionate and creative is exciting. Zylia: How important is 3D audio to the young generation of students? Łukasz: Well, when it comes to the importance of 3D audio for younger generations of students, it's a bit mixed. It depends on their specific interests, resources, and field of study. Some students may love the idea of 3D sound as an immersive experience. They understand that it can create a whole new level of impact, whether in gaming, virtual reality, filmmaking, or even music production. On the other hand, not every student may have the same access or access to the technology necessary for 3D sound. It can be a bit more resource-intensive, requiring specialized tools and software. So, while some students are up to date with the technology, others may not yet have had the opportunity to explore its potential fully. Interestingly, today's younger generation is very familiar with the technology. They are familiar with advances in audio technology and often understand the difference between traditional stereo sound and 3D sound. They see how 3D sound adds depth and realism to audio, making you feel like you are right in the middle of the action. In conclusion, the meaning of 3D sound for students varies. But one thing is for sure; they show what is possible with their passion and demonstrations as they venture into this fascinating realm of audio. Zylia: What do you think is the future of 3D audio recording? What technological changes can occur or are already taking place in the industry?

Łukasz: The future of 3D audio recording holds exciting possibilities. Immersive experiences are an important process, driven by virtual reality and augmented reality. Audio technology is advancing, producing life-like sounds. Advances in AI and machine learning will normalize audio and transform sound processing. Expect improved microphone tech and software algorithms for more accurate sound captures. Overall, the future is about blending art and technology to create engaging and immersive audio experiences. Zylia: Where do you see Zylia's solutions in this global change? Łukasz: Zylia prides itself worldwide as a leading provider of 3D audio solutions, dealing with advanced hardware and software, especially in microphones. With constant international changes, Zylia's services are available in an organized manner. With the increased demand for audio reviews, their expertise in 3D audio technology makes them a key player. With superior performance in features such as digital reality and personalized audio, Zylia's solutions are ready to shape the future of audio recording and production. Their revolutionary technology can elevate the quality of audio recordings and define a new commitment to sound, significantly impacting all areas of entertainment, art and communications. As a recognized leader in 3D audio, Zylia aims to innovate and change the global audio landscape. Zylia: What are your following career plans? What would you like to do this year? Łukasz: The upcoming months will be quite a dynamic period with a lot going on. Currently, I'm working on a lecture about the history of communication in the Polish demoscene, while also sound designing a project that showcases Poznan on the Fortnite platform. Autumn is a season of various presentations and educational work, and in December, I will most likely travel to Sydney for the Sigrapph conference, where a demoscene event is taking place. And this is just the beginning. You can follow Łukasz on TikTok: https://www.tiktok.com/@zeniallo or LinkedIn: https://www.linkedin.com/in/zeniallo

0 Comments

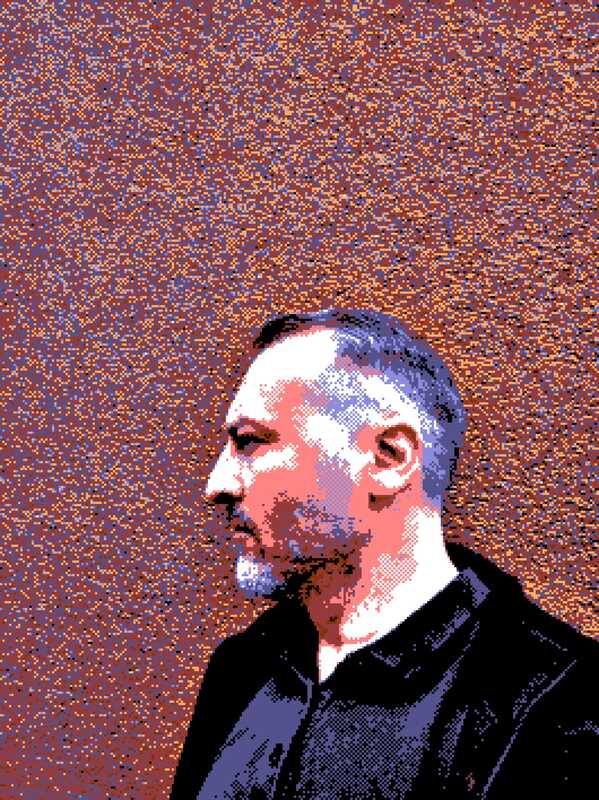

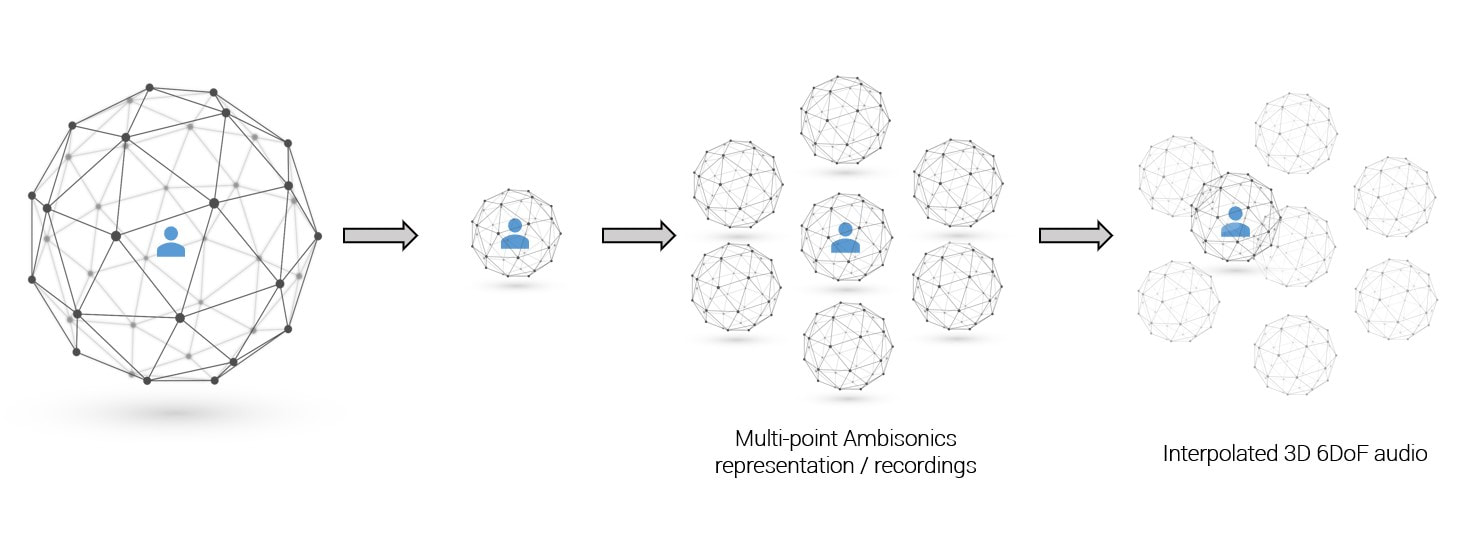

Volumetric audio recording is a technique that captures sound in a way that mimics how our ears perceive sound in the real world. It captures not only the level of sound (loudness), but also the location and movement of sound sources in a room or environment. This allows for a more immersive listening experience, as the listener can perceive the sounds as if they were actually in the room where the recording was made. There are a few different methods used to achieve volumetric audio recording. One of them is to use a grid of multiple Ambisonics microphone arrays to capture sound from different angles and positions. Additionally, the 6 degrees of freedom (6DoF) higher-order Ambisonics (HOA) rendering approach to volumetric audio recording allows the listener to move freely around the recorded space, experiencing the sound as if they were actually present in the environment.

The six degrees of freedom refer to the three linear (x, y, z) and three angular (pitch, yaw, roll) movements that can be made in a 3D space. In the context of volumetric audio recording, these movements correspond to the listener's position and orientation in the space and the position and movement of sound sources. The resulting audio can be played back through surround sound systems or virtual reality technology to create a sense of immersion and realism. Volumetric audio recording and 6dof rendering approach is used in a variety of applications, such as virtual reality, gaming, film, television and interactive audio installations, where the listener's movement and orientation play an important role in the experience. It also allows for a more immersive and realistic listening experience, as the listener can move around the space and hear the sound change accordingly, just as they would in the real world.

Exploring the Magic of Surround Sound with Zylia Microphone in "Hunting for Witches" Podcast2/9/2023 Podcasts are usually associated with two people sitting in a soundproofed room, talking into a microphone. But what if we want to add an extra layer of sound that can tell its own captivating story? That's when a regular podcast becomes a mesmerizing radio play that ignites the imagination and emotions of its listeners. One such podcast is "Hunting for Witches" (Polish title "Polowanie na Wiedźmy") by Michał Matus, available on Audioteka. It's a documentary audio series that takes us on a journey to explore the magic of our time. We had the privilege of speaking with the chief reporter, Michał Matus, and Katia Sochaczewska, the audio producer, about the creation of "Hunting for Witches" and the role that surround sound recording played in the production. The ZYLIA ZM-1 microphone was a key tool in capturing the unique sounds that make this podcast truly special. For the past year, Michal has been traveling throughout Europe, visiting places where magic is practiced, meeting people who have dedicated their lives to studying various forms of magic, and documenting the secret rituals that are performed to change the course of things. The ZYLIA microphone was with him every step of the way, capturing the essence of these magical places.

“You can hear the sounds of nature during a meeting with the Danish witch Vølve, in her garden, or the summer solstice celebration at the stone circle of Stonehenge, where thousands of pagan followers, druids, and party-goers were drumming, dancing, and singing.” "I also used Zylia when I knew a scene was going to be spectacular in terms of sound, with many different sound sources around." Michał recalls. “So, through Zylia, I recorded the summer solstice celebration at the stone circle of Stonehenge, where thousands of followers of pagan beliefs, druids and ordinary party people had fun drumming, dancing and singing. It was similar when visiting Roma witches who allowed their ritual to be recorded. All these places and events have a spatial sound that was worth preserving so that we could later recall them in the sound story.”

Want to learn more about 3D audio podcasts? |

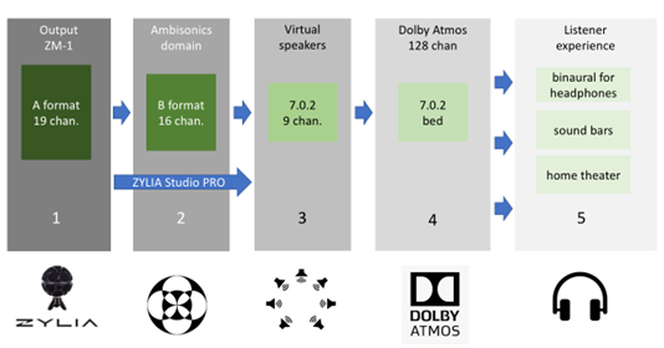

| Dolby Atmos and Atmos Music are one of the hottest topics in immersive audio these days. Through this new format, consumers can enjoy immersive audio over various playback solutions like multichannel home theatre setups, sound bars, or as binaural renderings through headphones, some of which even offer integrated head tracking. The other audio format playing an important role in creating immersive experiences in VR, AR, and XR is Higher-order Ambisonics (HOA). With the ZYLIA ZM-1 from Zylia, there is a convenient, practical and affordable solution to record sound in HOA with high spatial resolution. The question now is, how can HOA be combined with Dolby Atmos? |

Content of the article

Higher-order Ambisonics (HOA) overview

It is important to know that the raw output of an Ambisonics microphone array, the A-format, needs to be converted into what is known as the B-format before using it in an Ambisonics production workflow. This is sometimes a bit of an obstacle for the novice. Unlike in a channel-based approach, the audio channels of the B-format are not associated in a direct way with spatial directions or positions, like the positions of speakers in the playback system, for instance. However, the more abstract representation of the sound scene in the B-format makes sound field operations like rotations fairly straightforward.

Although multichannel audio is at its base, the B-format is only an intermediary step and requires decoding before you can listen to a meaningful output. This decoding process can either yield speaker feeds ranging from classic stereo to multichannel surround sound setups for a conventional channel-based output, or it can result in a binaural experience over headphones. This is just one of the reasons why one would like to deliver immersive audio recorded through Ambisonics over playback solutions that support Dolby Atmos. For delivering a mobile and immersive listener experience with comparably small hardware requirements, the capabilities of headphones with integrated head-tracking are particularly attractive.

For FOA recordings, the A- and the B-format have both 4 channels. The spatial resolution is limited, and sound sources usually appear as if they are all at the same remote distance. For full-sphere HOA solutions the A-format has typically more channels than the B-format. As an example, the ZYLIA ZM-1 has 19 channels as raw A-format output and 16 channels after converting it to 3rd order Ambisonics. It is important to remember these channel counts when planning your workflow with respect to the capacities of the DAW in question.

Dolby Atmos overview

In order to represent rich 360° audio content, Dolby Atmos can handle internally up to 128 channels of audio. The Audio Definition Model (ADM) ensures a proper representation of all the metadata related to these channels. Dolby Atmos files are distributed through the Broadcast Wave Format (BWF) [4]. From a mixing point of view, and also for combining it with Ambisonics, it is important to be aware of two main concepts in Dolby Atmos: beds and audio objects.

You can think of beds in two ways:

- Beds are a channel-based-inspired representation of audio content following surround sound speaker layouts plus additional up to 4 height channels.

- In terms of audio content that you would send to beds, this can be for instance music that you mix for a specific surround setting. You can also think of it in general terms as soundscapes that provide a background or atmosphere for other audio elements. This background sound is spatially resolved.

If you think for instance of a nature soundscape, this could be trees with rustling leaves and a creek with running water, all sound sources with more or less distinct positions. Take an urban soundscape as another example and think of traffic with various moving cars, these are sound sources that change their positions, but you would want to use the scene as is and not touch the sources individually in your mix. These are all examples of immersive audio content that you would send to beds.

Dolby Atmos also allows for sounds to be interpreted as objects with positions in three dimensions. In a mix, these are objects that allow for control of their position in x, y, and z independently of the position of designated speaker channels. See below [5], for reading up more on beds and objects.

HOA recordings in Dolby Atmos

While beds are channel-based in their conception, they may be rendered differently, depending on the speaker count and layout of your system. Think of beds as your main mix bus and let’s think of input for beds as surround configurations (2.0, 3.0, 5.0, 5.1, 7.0, 7.1, 7.0.2, or 7.1.2). In order to take advantage of the high resolution of 3rd order recordings made with the ZYLIA ZM-1, we will pick the 7.0.2 configuration with 7 horizontal and two elevated frontal speakers and we will decode the Ambisonic B-format to a virtual 7.0.2 speaker configuration. This results in a proper input for a Dolby Atmos bed.

Starting with a raw recording made with the ZYLIA ZM-1 we will then have the following signal chain:

Step one is the raw output of the microphone array, the A-format. For the ZYLIA ZM-1 this is an audio file with 19 channels. From a post-production and mixing perspective, all that matters here is where you placed your microphone with respect to the sound sources. If you want your work environment to include this step of the signal chain, the tracks of your DAW need to be able to accommodate 19 channels. But this is not absolutely necessary, you can start with step 2.

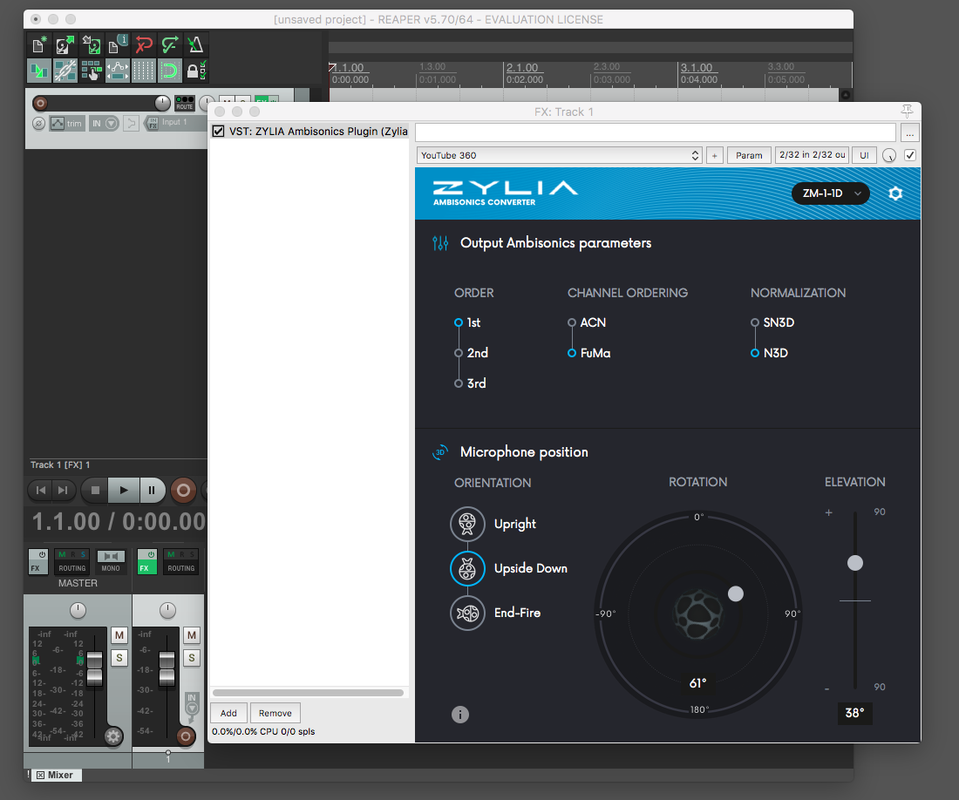

The 3rd order Ambisonic B-format contains 16 channels. For the conversion from step one to step two, you can use the Ambisonic Converter plugin from Zylia [6]. If your DAW cannot accommodate the necessary 19 channels for step one you can also convert offline with the ZYLIA Ambisonics Converter application, which also offers you batch conversion for multiple assets [7]. In many situations, it is advisable to start the signal chain with step 2, in order to save the CPU resources used by the A to B conversion for other effects. From a mixing perspective, operations that you apply here are mostly rotations, and global filtering, limiting or compression of the immersive sound scene that you want to send to Atmos beds. You will apply these operations based on how the immersive bed interacts with the objects that you may want to add to your scene later. There are various recognized free tools available to manipulate HOA B-format, for instance, the IEM [8] or SPARTA [9] plugin suites.

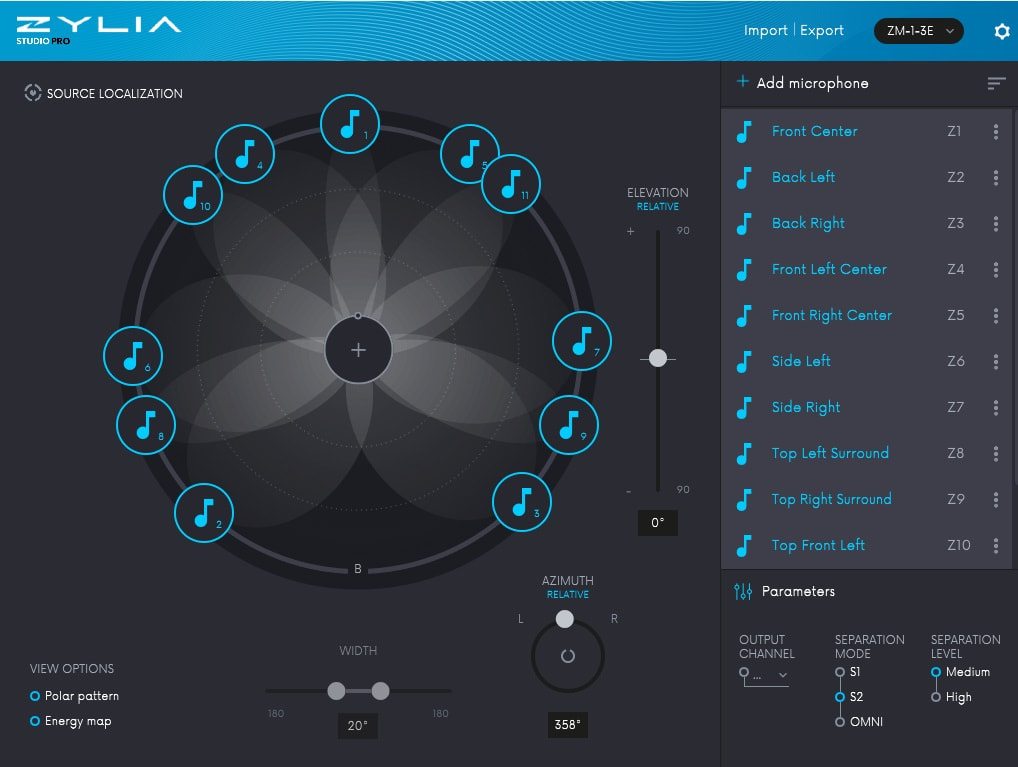

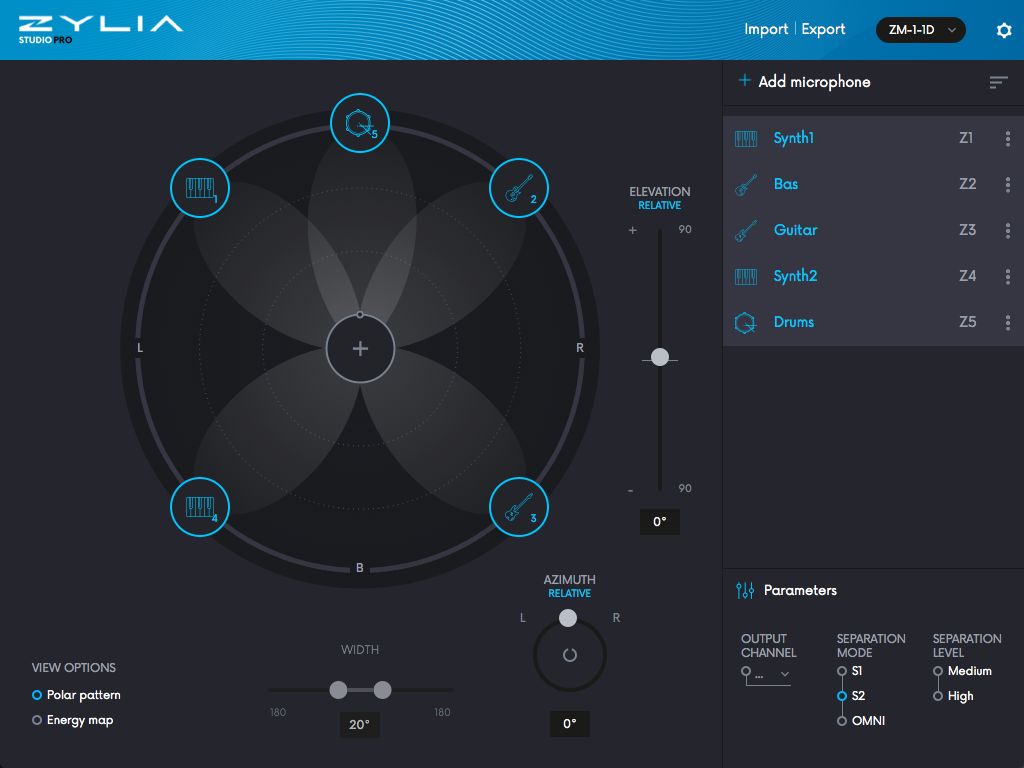

Then, The Ambisonic B-Format needs to be decoded to a virtual surround speaker configuration. For this conversion from the B-format, you can use various decoders that are again available from multiple plugin suites like IEM and SPARTA. ZYLIA Studio Pro [10] allows you to decode to a virtual surround layout directly from step one, the raw A-format recordings, which means that you can bypass step 2. For some background audio content, this maybe a perfectly suitable choice. Part of the roadmap for ZYLIA Studio Pro is to also offer A-format input, making it a versatile high-quality decoder. From a mixing perspective and depending on the content of your bed input, you may want to choose different virtual surround configurations to decode to. Some content might be good on a smaller, more frontal bed e.g. 3.1, and other content will need to be more enveloping. If your DAW has a channel count per track that is limited to surround sound setups, you will need to premix these beds as stems.

This bed then needs to be routed to Dolby Atmos. The details are beyond the scope of this article, and there are many excellent tutorials available that describe this process in detail. Here I want to mention that some DAWs have Dolby Atmos renderers built in, and you can study everything you practically need to know within these DAWs. With other DAWs, you will need to use the external Dolby Bridge [11]. This has a steeper learning curve to it but there are also many excellent tutorials out there that cover these topics [12]. There are also hardware solutions for Dolby Atmos renderings which interface with your speaker setup, but we will not cover them here. In Dolby Atmos, you will likely also integrate additional sources as objects, and you will control their 3D pan position with the Dolby Atmos Music Panner plug-in in your DAW. From a mixing perspective: the sonic interaction between the bed and the objects will probably make you revisit steps 2 and step 3 in order to rebalance, compress or limit your bed to optimise your mix.

You will need to monitor your mix to make sure that the end user experience is perfect. Only very few of us will have access to a Dolby Atmos studio for their work. For bedroom studio owners, you can listen to your mix always over headphones as a binaural rendering, on some recent OSX platforms over the inbuilt Atmos speakers, and with AirPods even over headphones with built-in headtracking. These solutions might be options depending on what you are producing for. Regarding this highly debated question, on whether you can mix and master over headphones, I found the following article very insightful [13], elaborating on all pros and cons and also pointing out that the overwhelming majority of end users will listen to music over headphones. With regards to an Ambisonic mix, using headphones means that the listener will be always in the sweet spot of the spatial reproduction.

The workflow in selected DAWs

Reaper is amongst the first choices when it comes to higher-order Ambisonics, due to its 64-channel count per track. Hence for the HOA aspect of the workflow sketched above, there are no limitations. However, you will need to familiarize yourself with the Dolby Bridge and the Dolby Atmos Music Panner plug-in.

In regular Pro Tools, you will also use the Dolby Atmos Music Panner plug-in and the Dolby Bridge. Since Pro Tools has a limitation of 16 channels per track, you will need to convert all your Ambisonic assets to B-format before you can start mixing. Upgrading to Pro Tools Studio or Flex [15] adds Dolby Atmos ADM BWF import/export, native immersive panning, I/O set-up integration with the Dolby Atmos Renderer, and a number of other Dolby Atmos workflow features as well as Ambisonics tracks.

In the most recent versions of Logic, Dolby Atmos is completely integrated, so no need to use the Dolby Bridge. For the monitoring of your mix, Logic will play nicely with all Atmos-ready features from Apple hardware. However, the channel count per track is limited to beds with 7.1.4. In theory, this means that you would have to premix all the beds as multichannel stems. While you can import ADM BWF files, as the Dolby Atmos project is ready for mixing, it is less obvious how to import a bed input as discussed above. In any case, once you have a premixed bed, the only modifications available to you in the mixing process are multi-mono plugins (e.g., filters), so you cannot rotate the Ambisonic sound field anymore at this point. To summarize for Logic, while Dolby Atmos is very well integrated, the HOA part of the signal chain is more difficult to realize.

Nuendo also has Dolby Atmos integrated and it also features dedicated Ambisonic tracks up to 3rd order which can be decoded to surround tracks. This means you have a complete environment for the steps of the workflow described above.

While being mostly known as a video editing environment, DaVinci Resolve features a native Dolby Atmos renderer that can import and export master files. This allows for a self-contained Dolby Atmos workflow in Resolve without the need for the Dolby Atmos Production or Mastering Suite. DaVinci Resolve also has the Dolby Atmos renderer integrated and the tracks can host multichannel audio assets and effects.

Summary

If you like this article, then please let us know in the comments what we should describe in more detail in future articles?

References:

- M. Gerzon, "Periphony: With Height Sound Reproduction," J. Audio Eng. Soc., vol. 21, no. 1, pp. 2-10, (1973 February.)

- J. Daniel, J. Rault, and J. Polack, "Ambisonics Encoding of Other Audio Formats for Multiple Listening Conditions," Paper 4795, (1998 September).

- R. Nicol, and M. Emerit, "3D-Sound Reproduction Over an Extensive Listening Area: A Hybrid Method Derived from Holophony and Ambisonic," Paper 16-039, (1999 March).

- An article by Jérôme Daniel about the HOA from the Ambisonics symposium 2009: LINK

- F. Zotter, M. Frank, "Ambisonics: A practical 3D audio theory for recording, studio production, sound reinforcement, and virtual reality", Springer Nature; 2019: LINK

- Here, you find more information about the broadcast Wave format BWF: LINK

[6] The Zylia Ambisonics Converter plugin: LINK

[7] The Zylia Ambisonics Converter: LINK

[8] The IEM plugin suite: LINK

[9] The SPARTA plugin suite: LINK

[10] Zylia Studio Pro plugin: LINK

[11] A video tutorial for using the Dolby Bridge with Pro Tools: LINK

[12] A video tutorial for using the Dolby Bridge with Reaper: LINK

[13] An blog post about the limits and possibilities of mixing Dolby Atmos via headphones by Edgar Rothermich: LINK

[14] Information about Dolby support for various DAWs: LINK

[15] Here you can compare Protools versions and their Dolby and HOA support: LINK

#zylia #dolbyatmos #ambisonics

Since then, various organizations and private companies have been mobilizing their R&D teams to find technologies that could connect virtual reality, social media, and teleconference systems. Metaverse Standard Forum indicates the vast potential of what we can develop together.

Zylia started to work on tools for making volumetric audio many years before people even heard about Metaverse. We wanted to be ready once they are most needed – this time has come!

Follow the activities of Metaverse Standard Forum to stay on top of the technologies that will drive Metaverse in the next few years.

#metaverse #virtualreality #gamedevelopment #opportunities #zylia #Volumetric #6dofAudio #3Daudio #immersive

QUATRE : un projet immersif reliant des paysages sonores naturels et de la musique enregistrée en ambisonie multipoint d’ordre élevé.

L'année dernière, notre ingénieur du son créatif Florian Grond a eu le privilège d'accompagner Christophe Papadimitriou lors de la production de QUATRE, une œuvre d'art audio immersive reliant les paysages sonores à la musique et à l'improvisation. La musique a été enregistrée avec l'ensemble 6DoF de ZYLIA dans les studios du centre musique Orford au Québec, Canada, et les paysages sonores de la nature ont été enregistrés avec un seul ZYLIA ZM-1 microphone en extérieur, l'ensemble de la composition a été mixé et masterisé comme un album binaural. Christophe a eu la gentillesse de nous donner une interview et de parler de ce qui le touche en tant qu'artiste et de ce qui l'a inspiré à se lancer dans ce projet audio immersive.

Photo: Les 3 musiciens du projet QUATRE enregistrant en ambisonie d'ordre supérieur en multipoint avec le système 6DoF de ZYLIA : de gauche à droite Luzio Altobelli, Christophe Papadimitriou, et Omar-Abou Afach.

Bonjour Christophe, parlez-nous de vous et de votre parcours artistique ?

For the past 10 years, I have been lucky enough to be able to play and record my own compositions for theatre projects as well as for jazz and world music productions, which is what I like most! I love composing and sharing with the public the fruit of my creativity and my imagination.

Je suis arrivé au Québec de France en 1978 et je vis à Montréal où je travaille comme musicien depuis ma sortie de l’Université Concordia en 1992. J’y ai étudié la contrebasse avec les grands professeurs et artistes jazz et classiques Don Habib et Éric Lagacé. Depuis, j’ai partagé la scène avec de nombreux artistes montréalais, surtout en jazz, musique du monde et chanson.

Depuis les derniers 10 ans, j’ai la chance de pouvoir jouer et enregistrer mes propres compositions au sein de projets autant en théâtre, jazz et musique du monde.

C’est ce qui me plait le plus, j'adore composer et partager avec le public le fruit de ma créativité et de mon imagination.

Photo: Christophe Papadimitriou enregistre une paysage sonore typique de l'été québécois avec le ZM-1 microphone de ZYLIA.

En tant que contrebassiste et musicien, quels sont vos publics préférés ?

J’ai la chance de travailler beaucoup et l’opportunité de me produire sur scène, environ une centaine de représentations par an. Ce contact avec le public est le plus important pour moi, car j’ai une réponse immédiate de la part du public. Notamment avec les jeunes, qui sont très expressifs et n’ont pas de censure. J’aime quand la musique provoque des sensations fortes et j’adore aussi la prise de risques, notamment lors de moments improvisés, ou les musiciens vivent, en même temps que le public, un moment unique et intense.

Maintenant, voici une grande question, quelle est la signification de la musique et de l'art pour vous ?

Pour moi, la musique est le moyen d’exprimer le plus fidèlement les émotions et l'intériorité. Transcendant les mots et les images, la musique permet aux musiciens et à l’auditoire de se connecter ensemble et de vivre simultanément une expérience à la fois collective et individuelle. Quand la magie opère et que musiciens et audience sont transportés par cette énergie positive, alors nous pouvons vivre un moment de grâce…Ces moments sont rares et pour ça, très précieux.

Photo: Enregistrement du paysage sonore d'une nuit d'hiver avec ZM-1 mic de ZYLIA au centre musique Orford

Vous avez créé QUATRE, qu’est-ce qui vous a motivé à vous lancer dans ce projet ?

La pandémie de Covid a eu un impact important sur plusieurs plans de notre société et nous oblige à prendre conscience, si ce n’était pas déjà le cas, de notre interconnexion au niveau planétaire. Une remise en question de l’activité humaine et de notre impact sur la nature sont des enjeux absolument prioritaires. Cette prise de conscience a naturellement un impact sur l’art en général et pour moi, il est devenu évident que ce serait la matière mon prochain projet. C’est ainsi qu’est née l’idée de créer une œuvre basée sur le cycle des saisons avec la volonté de reconnecter avec la nature qui nous entoure. Je voulais par cette œuvre créer l’effet d’un dialogue entre musique et sons de la nature, un moment de répit personnel et quasi-méditatif où l’auditeur est invité à rencontrer autrement la nature et à recréer du lien avec le précieux.

Qui sont les autres musiciens de QUATRE ?

Collaborateurs essentiels au projet : Luzio Altobelli (accordéon et marimba), Omar Abou Afach (alto, oud et nai) et Florian Grond (design sonore). En plus de leur évidente maîtrise instrumentale, Luzio et Omar apportent leur sensibilité et leur humanité dans chaque pièce de l'œuvre. Il a été très intéressant de constater pendant la réalisation de Quatre (qui s'est déroulée sur plus d’un an), la dynamique de groupe qui évoluait au fil des saisons. Nos différences culturelles et aussi les points qui nous rassemblent. Tout ceci se perçoit dans la musique enregistrée. On peut entendre des moments d’une grande unité et aussi des solos ou l’instrumentiste peut se laisser aller à sa propre vision, son ressenti du moment. Quatre est une œuvre avec des pièces écrites mais qui laisse toujours une bonne place à l'improvisation. Ainsi, les musiciens ont pu s’exprimer dans leur propre langage et l’œuvre est riche de sonorités diverses (Moyen Orient, Méditerranée et Québec).

Photo: Afin de partager le projet avec diverses communautés, QUATRE organise des sessions d'écoute binaurale en intérieur et en extérieur. Jusqu'à 12 personnes peuvent se connecter avec des écouteurs pour une expérience individuelle et pourtant collective de l'œuvre.

QUATRE a été réalisé comme un enregistrement en ambisonie d'ordre supérieur avec multiple point de captation avec le set ZYLIA 6DoF, quel est l'intérêt de l'audio immersif pour vous ?

Pendant un concert Covid improvisé sur ma terrasse, Florian nous a enregistré pour la première fois, d’abord avec un seul ZM-1, c’était là que j’ai découvert la technologie d’enregistrement en audio immersif. Un oiseau chantait le long de notre jeu et j’ai été charmé par la possibilité de connecter ma musique avec les sons de la nature, et de traiter celle-ci comme un 4e instrument. C’est cette technologie qui nous a permis de réellement se sentir entouré par l’univers sonore ainsi créé. Quatre est conçue comme un voyage dans la nature québécoise au fil des saisons. D’ailleurs, nous avons intégré à chaque saison des sons de pas (dans la neige, dans le sable, dans l’eau) qui aident l’auditeur à se projeter en train de se promener en forêt ou plus général en nature. Les témoignages que nous avons reçus après l’écoute montrent que l’effet d’immersion est bien réussi.

Aussi, vous l'avez fait masteriser en tant que pièce binaurale pour casque, dites-nous pourquoi ?

Today, a lot of people listen to music through headphones, but often while multitasking at the same time. To fully enjoy the music of Quatre, we suggest a moment of respite where the audience, comfortably seated, is completely dedicated to the act of listening. We are currently in the process of setting up several collective listening sessions with headphones in the Montreal area. These sessions will accommodate about fifteen listeners at a time and are created with the aim of discovering this work and providing an experience connecting art, nature and humans.

Nous sommes convaincus que l’écoute au casque offre les meilleures conditions pour une présence totale de l’auditeur. L'œuvre a beaucoup de subtilités et l'amplitude sonore est grande, passant de très doux à fort, le casque est nécessaire pour ne rien manquer.

Beaucoup de gens écoutent leur musique au casque de nos jours, mais souvent en faisant une autre tâche en même temps. Pour profiter de l’expérience au maximum, nous privilégions un moment de répit ou l’auditeur, confortablement installé, se dédie complètement à l’écoute. Nous sommes en train de mettre en place plusieurs séances d’écoute collectives au casque, dans la région de Montréal. Ces séances permettant une quinzaine d'auditeurs à la fois sont créées dans le but de faire découvrir cette œuvre et de faire vivre une expérience connectant art, nature et humains.

Appuyez notre démarche et supportez les artistes en téléchargeant Quatre ici :

https://christophepapadimitriou.bandcamp.com/album/quatre-sur-le-chemin

Want to learn more about multi-point HOA music and soundscape recordings? Contact our Sales team:

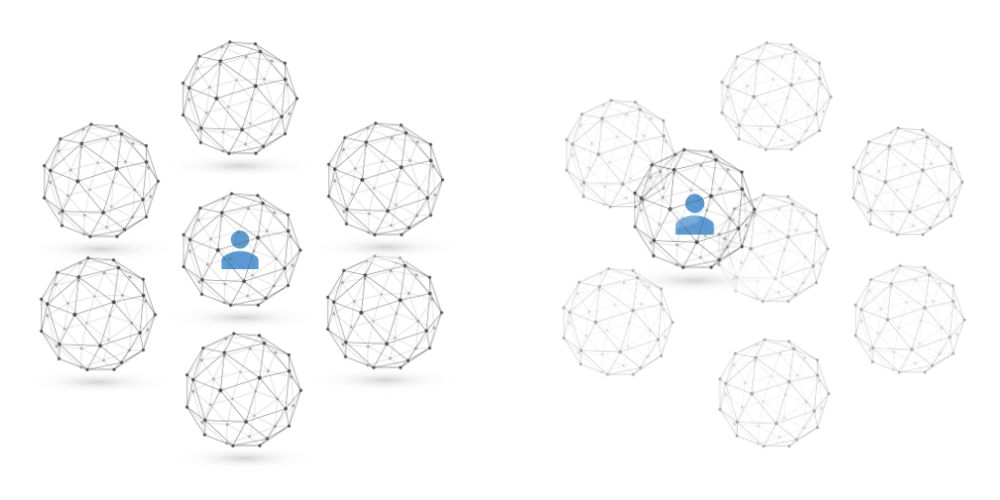

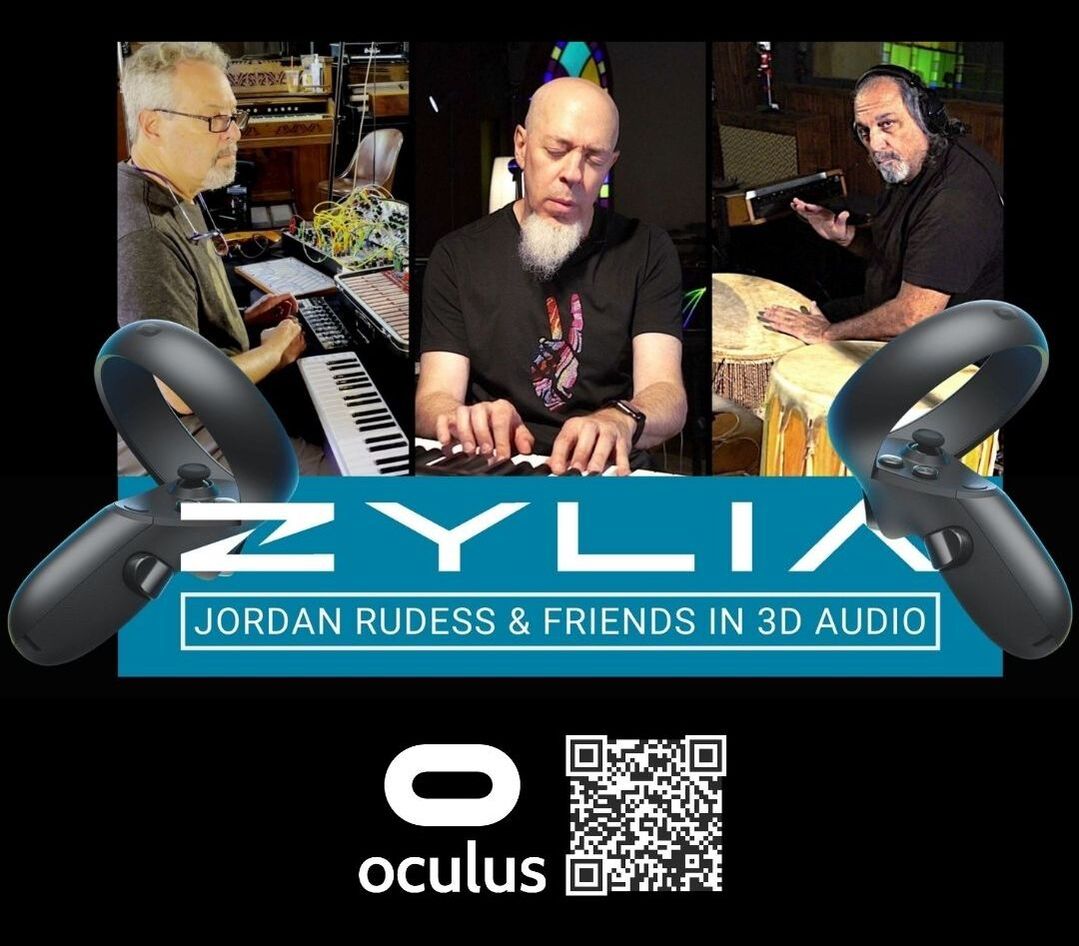

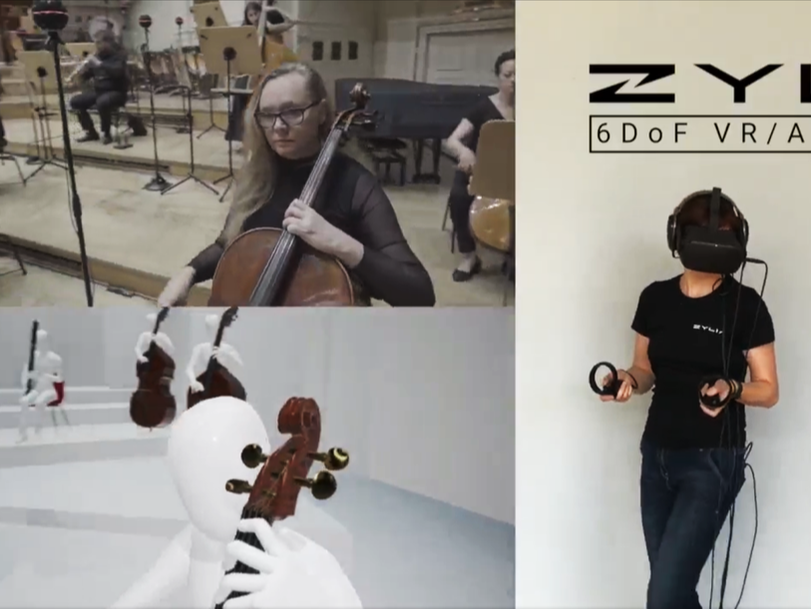

The idea

Zylia’s 3D Audio recording solution gives artists a unique opportunity to connect intimately with the audience. Thanks to the Oculus VR application, the viewer can experience each uniquely developed element of the performance at home as vividly as it would be by a live audience member. Using Oculus VR goggles and headphones, viewers have the opportunity to:

- watch the concert from several different perspectives each with a 360° view,

- listen to the music in 360° spatial audio at every vantage point,

- tilt and turn their head and witness how 3D audio follows their movement perfectly

- hear the unique acoustics of the venue,

- feel the fantastic atmosphere of the event.

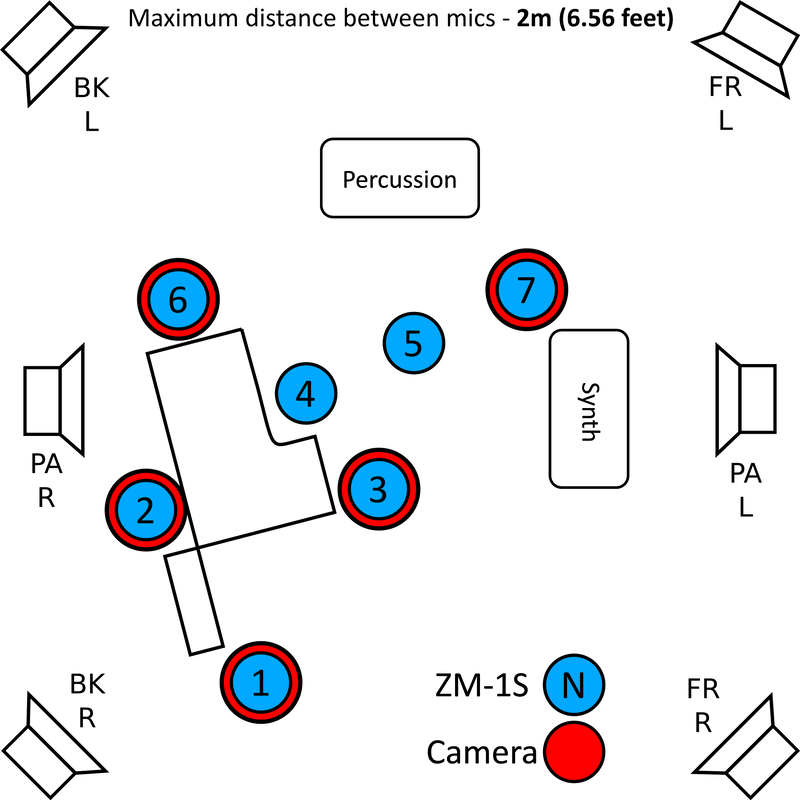

Technical information

The equipment used for the recording of “Jordan Rudess & Friends” concert

- One Mac Mini used for recording

- 6 ZM-1S microphones with synchronization

- 5 Qoocam 8K cameras

The 360° videos from the Qoocam 8K were stitched and converted to an equirectangular video format.

For the purpose of distributing the concert, a VR application was created in Unity3D and Wwise engines for Oculus Quest VR goggles.

Want to learn more about multi-point 360 audio and video productions? Contact our Sales team:

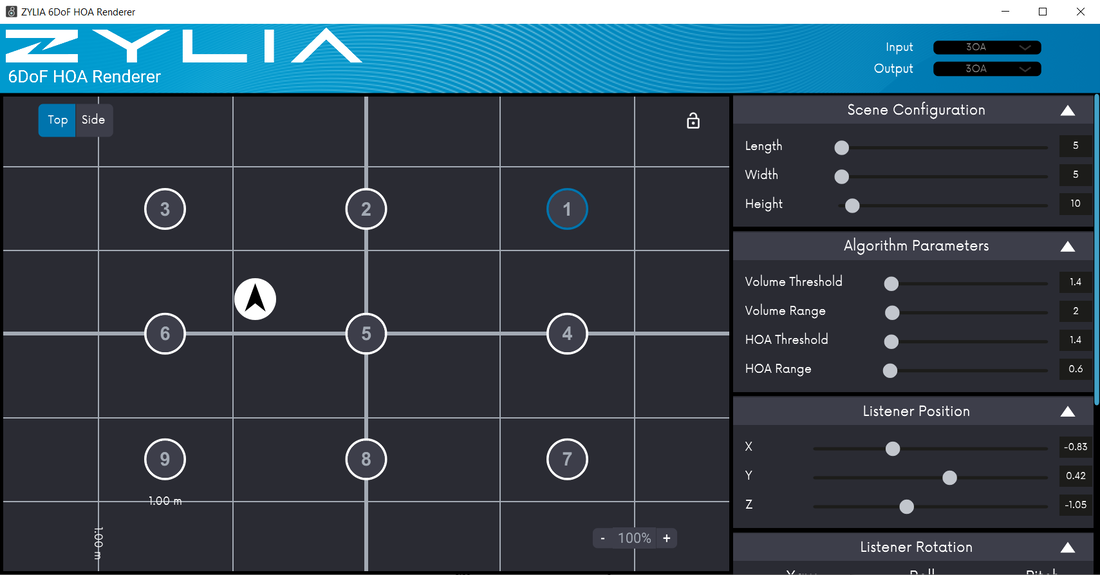

Efficient Volumetric Scene-based audio with ZYLIA 6 Degrees of Freedom solution

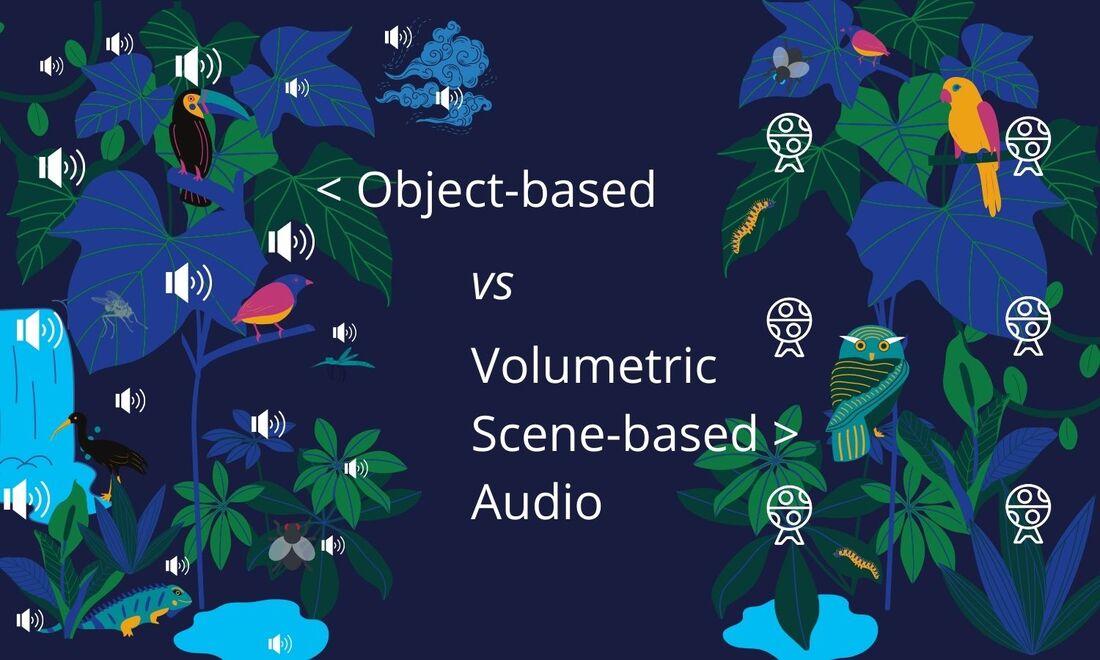

What is the difference between Object-based audio (OBA) and Volumetric Scene-based audio (VSBA)?

The most popular method of producing a soundtrack for games is known as Object-based audio. In this technique, the entire audio consists of individual sound assets with metadata describing their relationships and associations. Rendering these sound assets on the user's device means assembling these objects (sound + metadata) to create an overall user experience. The rendering of objects is flexible and responsive to the user, environmental, and platform-specific factors [ref.].

A complementary approach for games is Volumetric Scene-based audio, especially if the goal is to achieve natural behavior of the sound (reflections, diffraction). VSBA is a set of 3D sound technologies based on Higher-Order Ambisonics (HOA), a format for the modeling of 3D audio fields defined on the surface of a sphere. It allows for accurate capturing, efficient delivery, and compelling reproduction of 3D sound fields on any device (headphones, loudspeakers, etc.). VSBA and HOA are deeply interrelated; therefore, these two terms are often used interchangeably. Higher-Order Ambisonics is an ideal format for productions that involve large numbers of audio sources, typically held in many stems. While transmitting all these sources plus meta-information may be prohibitive as OBA, the Volumetric Scene-based approach limits the number of PCM (Pulse-Code Modulation) channels transmitted to the end-user as compact HOA signals [ref.].

- Rendering complexity independent of the number of objects in the scene, decreasing the CPU usage from 100 to 10 000 times in comparison to OBA

- Possibility of rendering HOA signals to any reproduction device

- Lower number of PCM channels sent to the end user

- Personalized interaction with immersive audio

- Lower production costs for loudspeaker layout

Zylia 6 Degrees of Freedom Navigable Audio

- Virtual, Augmented, and Mixed Realities

- Facebook 360, YouTube Spatial Audio, Google Resonance

- 360° videos

Want to learn more about multi-point 360 audio and video productions? Contact our Sales team:

The idea

ZYLIA 6 Degrees of Freedom Navigable Audio is a solution based on Ambisonics technology that allows recording an entire sound field around and within any performance imaginable. For a common listener it means that while listening to a live-recorded concert they can walk through the audio space freely. For instance, they can approach the stage, or even step on the stage to stand next to the musician. At every point, the sound they hear will be a bit different, as in real life. Right now, this is the only technology like that in the world.

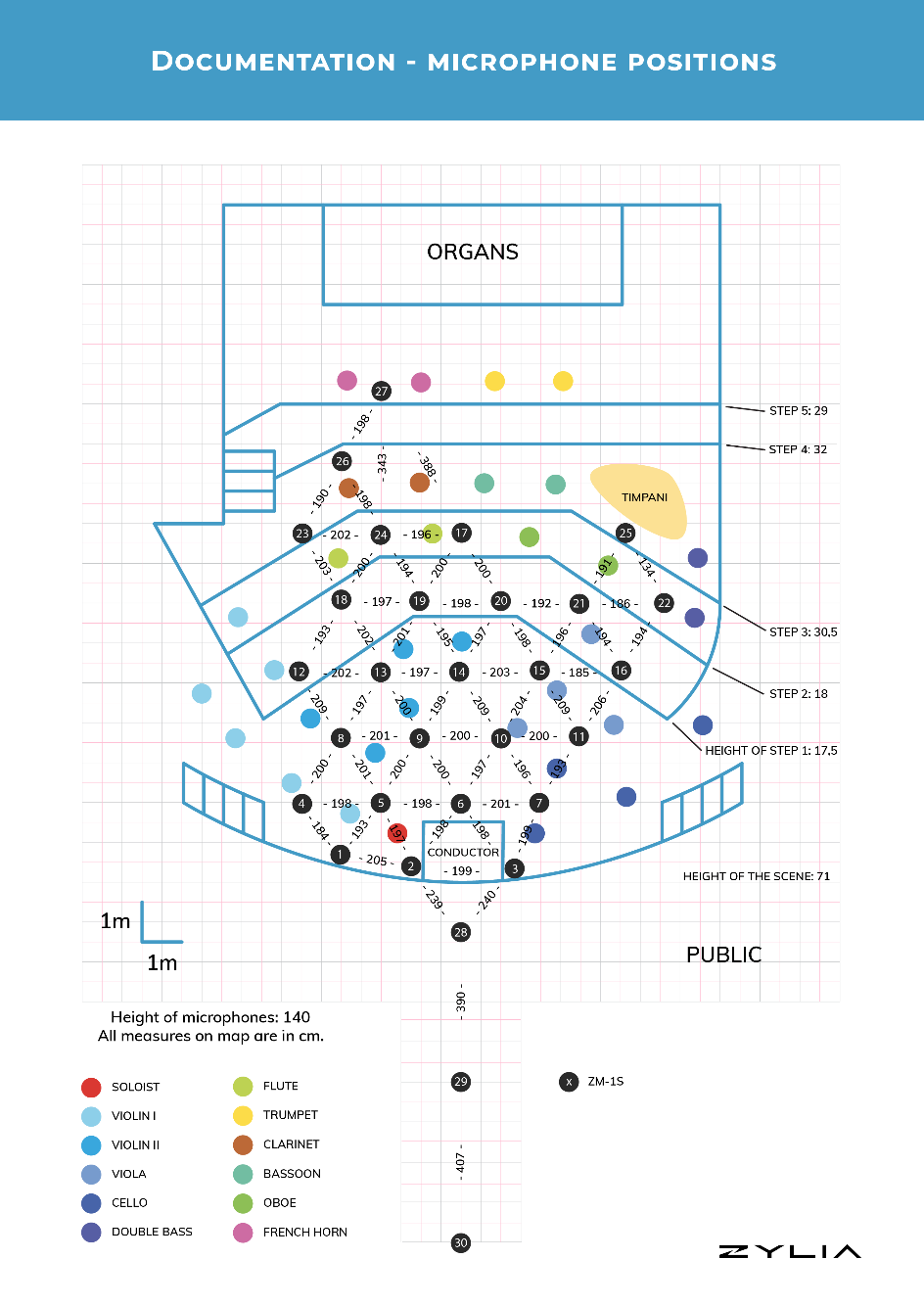

Technical information

How was it recorded?

* Two MacBooks pro for recording

* A single PC Linux workstation serving as a backup for recordings

* 30 ZM-1S mics – 3rd order Ambisonics microphones with synchronization

* 600 audio channels – 20 channels from each ZM-1S mic multiplied by 30 units

* 3 hours of recordings, 700 GB of audio data

Microphone array placement

| One of the main challenges of this project was to choose the most appropriate and effective arrangement of the microphones. Due to the COVID-19 situation, musicians had to keep a minimum distance of 2 meters from one another – having 34 of them on the stage and 30 microphones available, we knew, that we will not cover the whole area. The important thing was also the cable management – all of the cable paths had to be as short as possible, and their number had to be reduced to a minimum – for the comfort of musicians. Being aware of all of these limitations, we came up with a solution: we have placed most of the microphones 2 meters from each other (detailed distances between microphones are shown in the picture below – the measures are accurate up to 1 cm). This way we were able to cover most of the stage and a bit of the public. The results came out really satisfactory, as you can experience by yourself in our new demo. |

Recording process

Simultaneously to the audio recording, we were capturing the video to document the event. The film crew placed four static cameras in front of the stage and on the balconies. One cameraman was moving along the pre-planned path on the stage. Additionally, we have put two 360 degrees cameras among musicians.

Our chief recording engineer made sure that everything was ready – static cameras, moving camera operator, 360 cameras and recording engineers – and then gave a sign to the Conductor to begin the performance. When the LED rings on the 30 arrays had turned red everybody knew that the recording has started.

Data

Post-processing and preparing data for the ZYLIA 6DoF renderer

The outcome

Want to learn more about volumetric audio recording? Contact our experts:

There is a tool that will allow you to take your first easy steps in sound post-processing, so you could bring yourself closer to the world of professional musicians.

Record and mix with ZYLIA Music!

How does it work?

- Install ZYLIA Studio driver and the software.

- Connect ZYLIA ZM-1 microphone to your laptop with a USB cable and place it between musicians. If you play/sing solo put it about 1 meter in front of you. The software will automatically detect where you are in relation to the microphone.

- Record the song. This will be a 360-degree recording of the entire soundstage. The software will separate from it your tracks of instruments and vocals and automatically make a mix. If you were recording a band, the output you get will be a balanced mix of your track! You can, however, mix it yourself.

- Once the recording session is over, you will have the access to the separated tracks of instruments, raw recordings, and a balanced mix which will give your listener the feeling of being with you during recording.

Want more?

Don't waste your time scrolling through boring videos on the Internet. Get started making beautiful music!

Good luck!

Categories

All

360 Recording

6DOF

Ambisonics

Good Reading

How To Posts

Impulse Response

Interviews

Live Stream

Product Review

Recording

Software Releases

Tutorials

Archives

August 2023

July 2023

June 2023

May 2023

February 2023

November 2022

October 2022

July 2022

May 2022

February 2022

January 2022

August 2021

July 2021

May 2021

April 2021

March 2021

January 2021

December 2020

November 2020

October 2020

September 2020

August 2020

July 2020

June 2020

April 2020

March 2020

February 2020

January 2020

December 2019

November 2019

October 2019

September 2019

August 2019

July 2019

June 2019

May 2019

April 2019

March 2019

January 2019

December 2018

October 2018

September 2018

June 2018

May 2018

April 2018

March 2018

February 2018

January 2018

December 2017

October 2017

September 2017

August 2017

July 2017

June 2017

May 2017

March 2017

February 2017

January 2017

December 2016

November 2016

October 2016

RSS Feed

RSS Feed