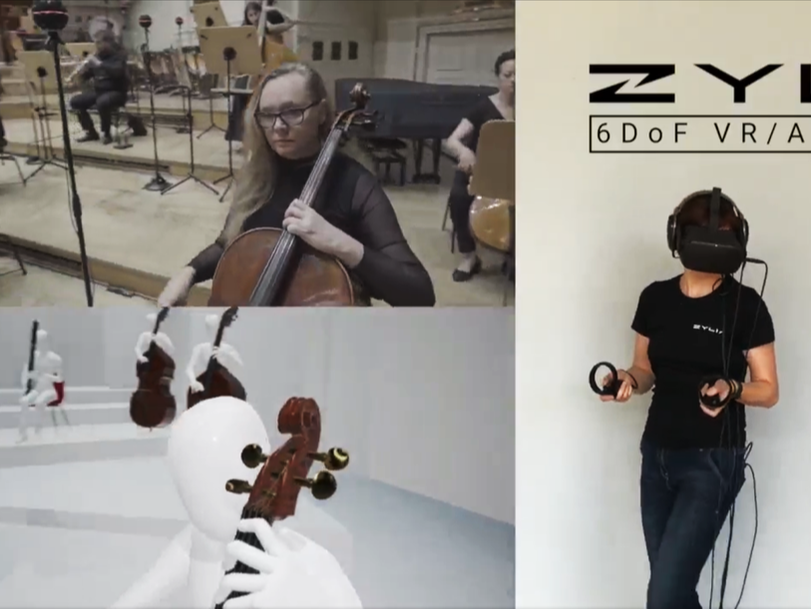

The ideaZylia in collaboration with Poznań Philharmonic Orchestra showed first in the world navigable audio in a live-recorded performance of a large classical orchestra. 34 musicians on stage and 30 ZYLIA 3’rd order Ambisonics microphones allowed to create a virtual concert hall, where each listener can enact their own audio path and get a real being-there sound experience. ZYLIA 6 Degrees of Freedom Navigable Audio is a solution based on Ambisonics technology that allows recording an entire sound field around and within any performance imaginable. For a common listener it means that while listening to a live-recorded concert they can walk through the audio space freely. For instance, they can approach the stage, or even step on the stage to stand next to the musician. At every point, the sound they hear will be a bit different, as in real life. Right now, this is the only technology like that in the world. 6 Degrees of Freedom in Zylia’s solution name refers to 6 directions of possible movement: up and down, left and right, forward and backward, rotation left and right, tilting forward and backward, rolling sideways. In post-production, the exact positions of microphones placed in the concert hall are being mirrored in the virtual space through the ZYLIA software. When it is done, the listener can create their own audio path moving in the 6 directions mentioned above and choose any listening spot they want. Technical information6DoF sound can be produced with an object-based approach – by placing pre-recorded mono or stereo files in a virtual space and then rendering the paths and reflections of each wave in this synthetic environment. Our approach, on the contrary, uses multiple Ambisonics microphones – this allows us to capture sound in almost every place in the room simultaneously. Thus, it provides a 6DoF sound which is comprised only of real-life recorded audio in a real acoustic environment. How was it recorded? * Two MacBooks pro for recording * A single PC Linux workstation serving as a backup for recordings * 30 ZM-1S mics – 3rd order Ambisonics microphones with synchronization * 600 audio channels – 20 channels from each ZM-1S mic multiplied by 30 units * 3 hours of recordings, 700 GB of audio data Microphone array placementThe placement of 30 ZM-1S microphones on the stage and in front of it.

Recording processTo be able to choose the best versions of performances, the Orchestra played nine times the Overture and eight times the Aria with three additional overdubs. Simultaneously to the audio recording, we were capturing the video to document the event. The film crew placed four static cameras in front of the stage and on the balconies. One cameraman was moving along the pre-planned path on the stage. Additionally, we have put two 360 degrees cameras among musicians. Our chief recording engineer made sure that everything was ready – static cameras, moving camera operator, 360 cameras and recording engineers – and then gave a sign to the Conductor to begin the performance. When the LED rings on the 30 arrays had turned red everybody knew that the recording has started. DataA large amount of data make it possible to explore the same moment in endless ways. Recording all 19 takes of two music pieces resulted in storing 700 GB of audio. The entire recording and preparation process was documented by the film with several cameras. Around 650 GB of the video has been captured. In total, we have gathered almost 1,5 TB of data. Post-processing and preparing data for the ZYLIA 6DoF rendererFirst, we had to prepare the 3D model of the stage. The model of the concert hall was redesigned, to match the dimensions in real life. Then, we have placed the microphones and musicians according to the accurate measurements. When this was done, specific parameters of the interpolation algorithm in the ZYLIA 6DoF HOA Renderer had to be set. The next task was the most difficult in post-production - matching the real camera sequences with the sequences from the VR environment in Unreal Engine. After this painstaking process of matching the paths of virtual and real cameras, a connection between Unreal and Wwise was established. In this way, we had the possibility to render the sound of the defined path in Unreal - just as if someone was walking there in VR. Last, but not least - was to synchronize and connect the real and virtual video with the desired audio. The outcomeThe outcome of this project is presented in “The Walk Through The Music” movie, where we can enter the music spectacle from the audience position and move around artists on the stage. You can also watch the “Making of” movie to get more detailed information on how the setup looked like. Want to learn more about volumetric audio recording? Contact our experts:

0 Comments

Leave a Reply. |

Categories

All

Archives

August 2023

|

|

© Zylia Sp. z o.o., copyright 2018. ALL RIGHTS RESERVED.

|

RSS Feed

RSS Feed